Introduction

It turns out that the asymptotic theory of least squares estimation applies equally to the projection model and the linear CEF model. Therefore the results in this chapter will be stated for the broader projection model described in Section 2.18. Recall that the model is with the linear projection coefficient .

Maintained assumptions in this chapter will be random sampling (Assumption 1.2) and finite second moments (Assumption 2.1). We restate these here for clarity.

Assumption 7.1

The variables , are i.i.d.

.

.

is positive definite.

The distributional results will require a strengthening of these assumptions to finite fourth moments. We discuss the specific conditions in Section 7.3.

Consistency of Least Squares Estimator

In this section we use the weak law of large numbers (WLLN, Theorem 6.1 and Theorem 6.2) and continuous mapping theorem (CMT, Theorem 6.6) to show that the least squares estimator is consistent for the projection coefficient .

This derivation is based on three key components. First, the OLS estimator can be written as a continuous function of a set of sample moments. Second, the WLLN shows that sample moments converge in probability to population moments. And third, the CMT states that continuous functions preserve convergence in probability. We now explain each step in brief and then in greater detail. First, observe that the OLS estimator

is a function of the sample moments and .

Second, by an application of the WLLN these sample moments converge in probability to their population expectations. Specifically, the fact that are mutually i.i.d. implies that any function of is i.i.d., including and . These variables also have finite expectations under Assumption 7.1. Under these conditions, the WLLN implies that as ,

and

Third, the CMT allows us to combine these equations to show that converges in probability to . Specifically, as ,

We have shown that as . In words, the OLS estimator converges in probability to the projection coefficient vector as the sample size gets large.

To fully understand the application of the CMT we walk through it in detail. We can write

where is a function of and . The function is a continuous function of and at all values of the arguments such that exists. Assumption specifies that is positive definite, which means that exists. Thus is continuous at . This justifies the application of the CMT in (7.2).

For a slightly different demonstration of (7.2) recall that (4.6) implies that

where

The WLLN and (2.25) imply

Therefore

which is the same as . Theorem 7.1 Consistency of Least Squares. Under Assumption 7.1, , and as

Theorem states that the OLS estimator converges in probability to as increases and thus is consistent for . In the stochastic order notation, Theorem can be equivalently written as

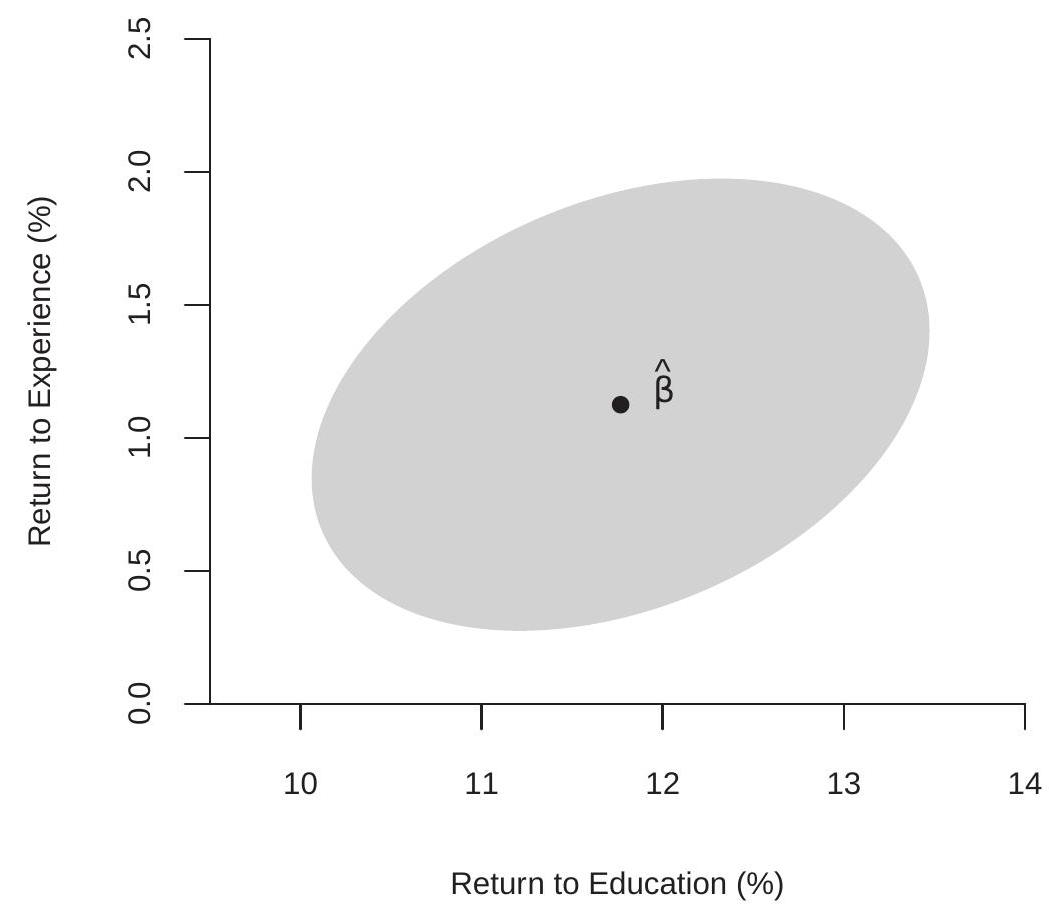

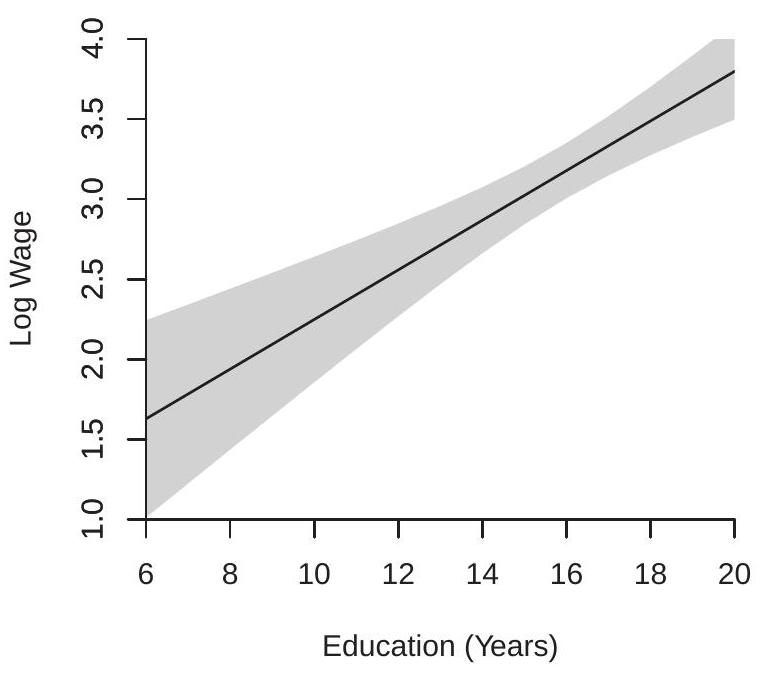

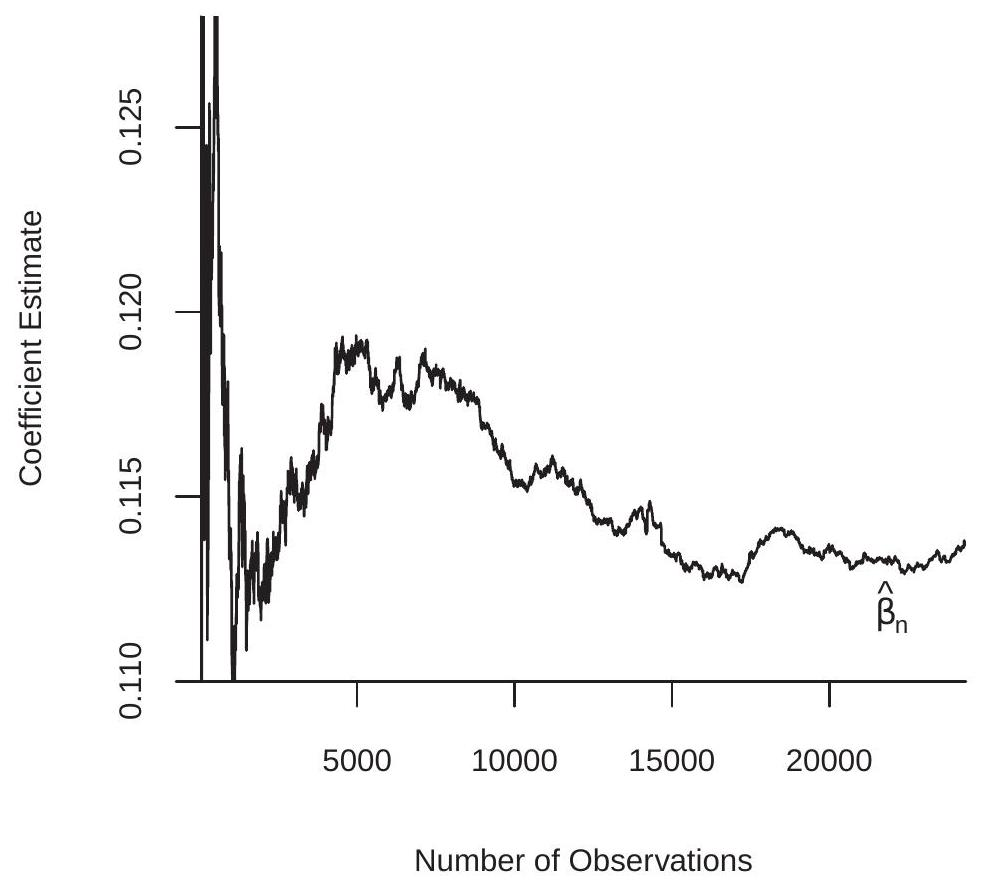

To illustrate the effect of sample size on the least squares estimator consider the least squares regression

We use the sample of 24,344 white men from the March 2009 CPS. We randomly sorted the observations and sequentially estimated the model by least squares starting with the first 5 observations and continuing until the full sample is used. The sequence of estimates are displayed in Figure 7.1. You can see how the least squares estimate changes with the sample size. As the number of observations increases it settles down to the full-sample estimate .

Figure 7.1: The Least-Squares Estimator as a Function of Sample Size

Asymptotic Normality

We started this chapter discussing the need for an approximation to the distribution of the OLS estimator . In Section we showed that converges in probability to . Consistency is a good first step, but in itself does not describe the distribution of the estimator. In this section we derive an approximation typically called the asymptotic distribution.

The derivation starts by writing the estimator as a function of sample moments. One of the moments must be written as a sum of zero-mean random vectors and normalized so that the central limit theorem can be applied. The steps are as follows.

Take equation (7.3) and multiply it by . This yields the expression

This shows that the normalized and centered estimator is a function of the sample average and the normalized sample average .

The random pairs are i.i.d., meaning that they are independent across and identically distributed. Any function of is also i.i.d. This includes and the product . The latter is mean-zero and has covariance matrix

We show below that has finite elements under a strengthening of Assumption 7.1. Since is i.i.d., mean zero, and finite variance, the central limit theorem (Theorem 6.3) implies

We state the required conditions here.

Assumption 7.2

The variables , are i.i.d..

.

.

is positive definite.

Assumption implies that . To see this, take its element, . Theorem 2.9.6 shows that . By the expectation inequality (B.30), the element of is bounded by

By two applications of the Cauchy-Schwarz inequality (B.32), this is smaller than

where the finiteness holds under Assumption 7.2.2 and 7.2.3. Thus .

An alternative way to show that the elements of are finite is by using a matrix norm (See Appendix A.23). Then by the expectation inequality, the Cauchy-Schwarz inequality, Assumption 7.2.3, and ,

This is a more compact argument (often described as more elegant) but such manipulations should not be done without understanding the notation and the applicability of each step of the argument.

Regardless, the finiteness of the covariance matrix means that we can apply the multivariate CLT (Theorem 6.3).

Theorem 7.2 Assumption implies that

and

as

Putting together (7.1), (7.5), and (7.7),

as . The final equality follows from the property that linear combinations of normal vectors are also normal (Theorem 5.2).

We have derived the asymptotic normal approximation to the distribution of the least squares estimator.

Theorem 7.3 Asymptotic Normality of Least Squares Estimator Under Assumption 7.2, as

where , and

In the stochastic order notation, Theorem implies that which is stronger than (7.4).

The matrix is the variance of the asymptotic distribution of . Consequently, is often referred to as the asymptotic covariance matrix of . The expression is called a sandwich form as the matrix is sandwiched between two copies of . It is useful to compare the variance of the asymptotic distribution given in (7.8) and the finite-sample conditional variance in the CEF model as given in (4.10):

Notice that is the exact conditional variance of and is the asymptotic variance of . Thus should be (roughly) times as large as , or . Indeed, multiplying (7.9) by and distributing we find

which looks like an estimator of . Indeed, as . The expression is useful for practical inference (such as computation of standard errors and tests) as it is the variance of the estimator , while is useful for asymptotic theory as it is well defined in the limit as goes to infinity. We will make use of both symbols and it will be advisable to adhere to this convention.

There is a special case where and simplify. Suppose that

Condition (7.10) holds in the homoskedastic linear regression model but is somewhat broader. Under (7.10) the asymptotic variance formulae simplify as

In (7.11) we define whether (7.10) is true or false. When (7.10) is true then , otherwise . We call the homoskedastic asymptotic covariance matrix.

Theorem states that the sampling distribution of the least squares estimator, after rescaling, is approximately normal when the sample size is sufficiently large. This holds true for all joint distributions of which satisfy the conditions of Assumption 7.2. Consequently, asymptotic normality is routinely used to approximate the finite sample distribution of .

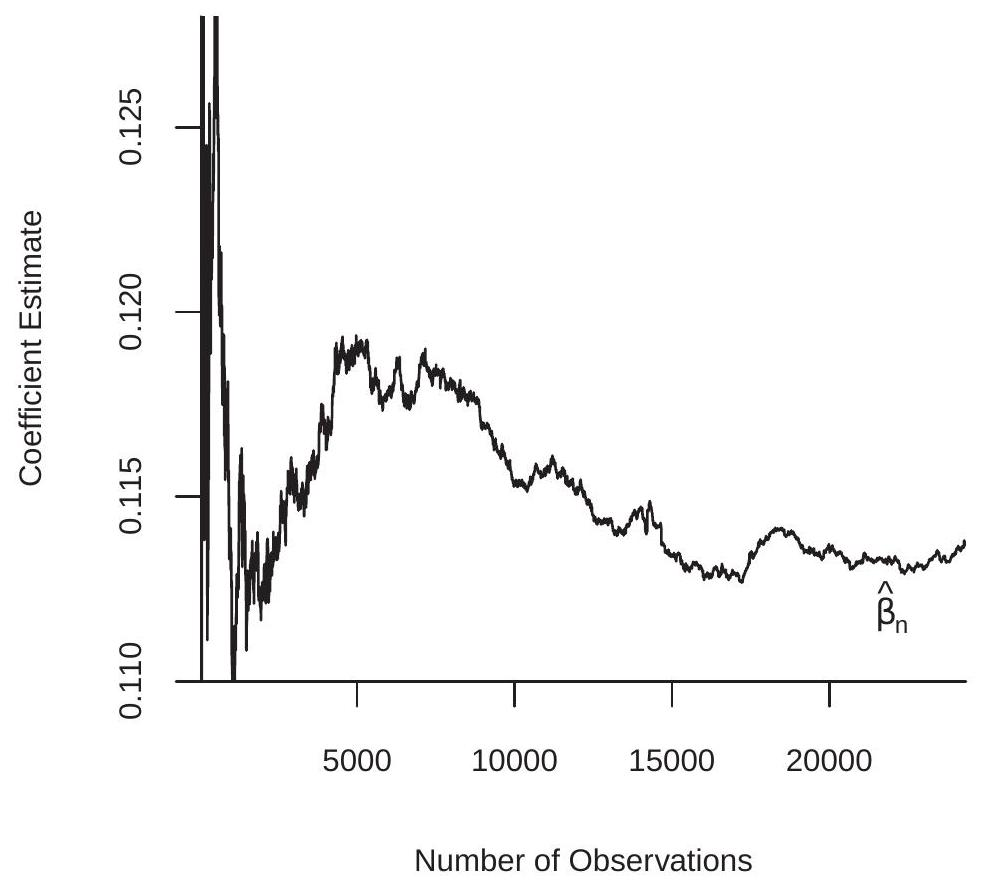

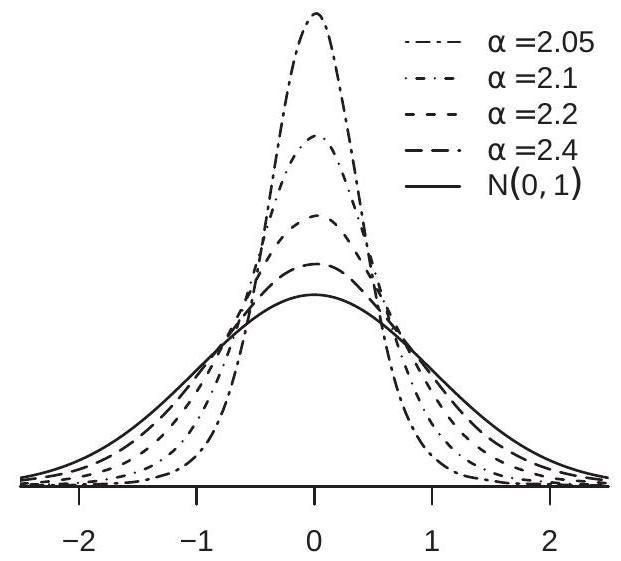

A difficulty is that for any fixed the sampling distribution of can be arbitrarily far from the normal distribution. The normal approximation improves as increases, but how large should be in order for the approximation to be useful? Unfortunately, there is no simple answer to this reasonable question. The trouble is that no matter how large is the sample size, the normal approximation is arbitrarily poor for some data distribution satisfying the assumptions. We illustrate this problem using a simulation. Let where is and is independent of with the Double Pareto density . If the error has zero mean and variance . As approaches 2 , however, its variance diverges to infinity. In this context the normalized least squares slope estimator has the asymptotic distribution for any . In Figure a) we display the finite sample densities of the normalized estimator , setting and varying the parameter . For the density is very close to the density. As diminishes the density changes significantly, concentrating most of the probability mass around zero.

Another example is shown in Figure 7.2(b). Here the model is where

and . We show the sampling distribution of for , varying and 8 . As increases, the sampling distribution becomes highly skewed and non-normal. The lesson from Figure is that the asymptotic approximation is never guaranteed to be accurate.

- Double Pareto Error

.jpg)

- Error Process (7.12)

Figure 7.2: Density of Normalized OLS Estimator

Joint Distribution

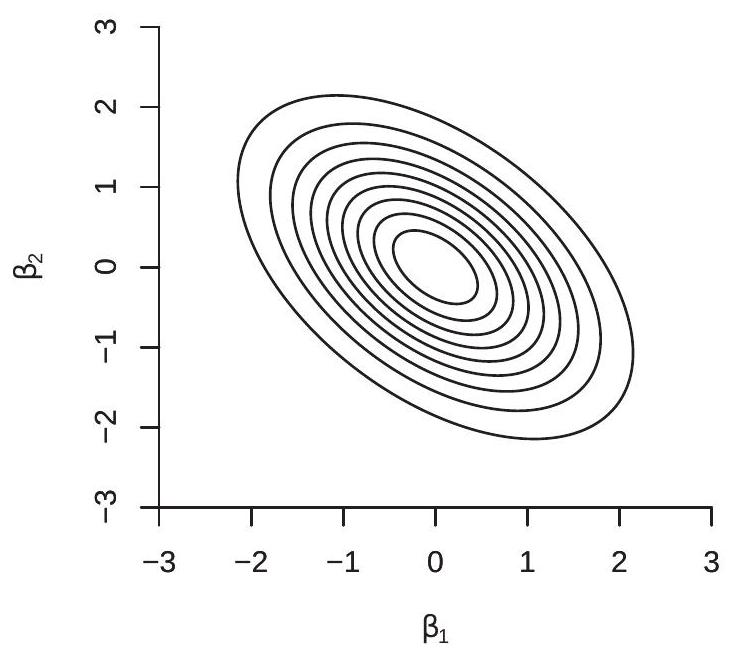

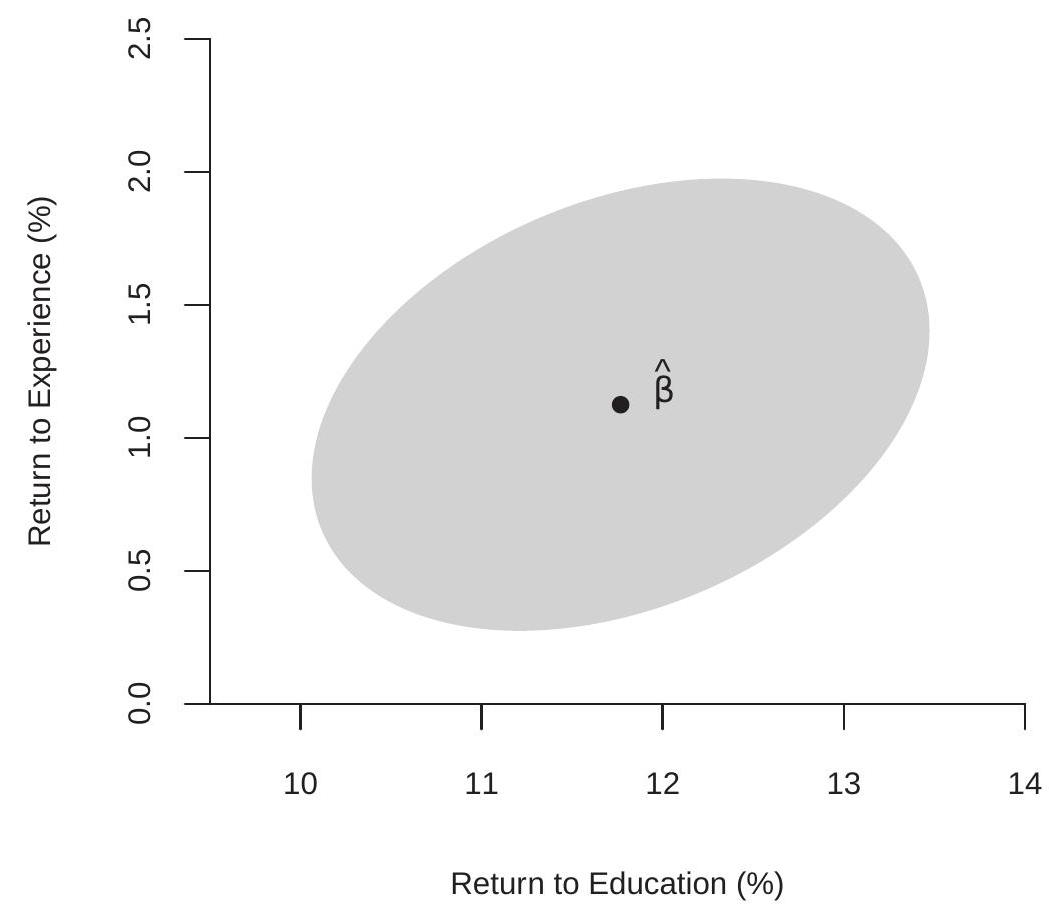

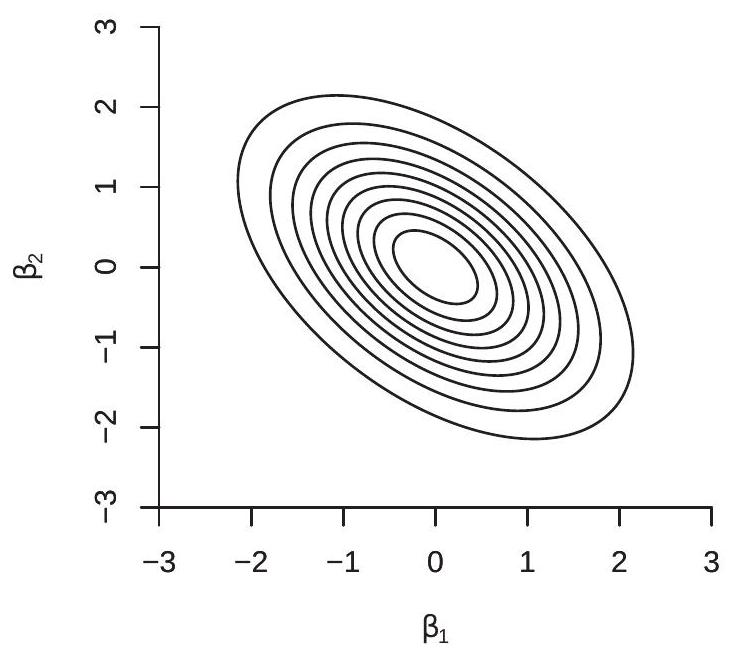

Theorem gives the joint asymptotic distribution of the coefficient estimators. We can use the result to study the covariance between the coefficient estimators. For simplicity, take the case of two regressors, no intercept, and homoskedastic error. Assume the regressors are mean zero, variance one, with correlation . Then using the formula for inversion of a matrix,

Thus if and are positively correlated then and are negatively correlated (and viceversa).

For illustration, Figure 7.3(a) displays the probability contours of the joint asymptotic distribution of and when and . The coefficient estimators are negatively correlated because the regressors are positively correlated. This means that if is unusually negative, it is likely that is unusually positive, or conversely. It is also unlikely that we will observe both and unusually large and of the same sign.

This finding that the correlation of the regressors is of opposite sign of the correlation of the coefficient estimates is sensitive to the assumption of homoskedasticity. If the errors are heteroskedastic then this relationship is not guaranteed.

This can be seen through a simple constructed example. Suppose that and only take the values , symmetrically, with , and . You can check that the regressors are mean zero, unit variance and correlation , which is identical with the setting displayed in Figure 7.3(a).

Now suppose that the error is heteroskedastic. Specifically, suppose that and . You can check that , and . There-

- Homoskedastic Case

.jpg)

- Heteroskedastic Case

Figure 7.3: Contours of Joint Distribution of and

fore

Thus the coefficient estimators and are positively correlated (their correlation is .) The joint probability contours of their asymptotic distribution is displayed in Figure 7.3(b). We can see how the two estimators are positively associated.

What we found through this example is that in the presence of heteroskedasticity there is no simple relationship between the correlation of the regressors and the correlation of the parameter estimators.

We can extend the above analysis to study the covariance between coefficient sub-vectors. For example, partitioning and , we can write the general model as

and the coefficient estimates as . Make the partitions

From (2.43)

where and . Thus when the error is homoskedastic

which is a matrix generalization of the two-regressor case.

In general you can show that (Exercise 7.5)

where

Unfortunately, these expressions are not easily interpretable.

Consistency of Error Variance Estimators

Using the methods of Section we can show that the estimators and are consistent for .

The trick is to write the residual as equal to the error plus a deviation

Thus the squared residual equals the squared error plus a deviation

So when we take the average of the squared residuals we obtain the average of the squared errors, plus two terms which are (hopefully) asymptotically negligible. This average is:

The WLLN implies that

Theorem shows that . Hence (7.18) converges in probability to as desired.

Finally, since as it follows that . Thus both estimators are consistent. Theorem 7.4 Under Assumption 7.1, and as .

Homoskedastic Covariance Matrix Estimation

Theorem shows that is asymptotically normal with asymptotic covariance matrix . For asymptotic inference (confidence intervals and tests) we need a consistent estimator of . Under homoskedasticity simplifies to and in this section we consider the simplified problem of estimating .

The standard moment estimator of is defined in (7.1) and thus an estimator for is . The standard estimator of is the unbiased estimator defined in (4.31). Thus a natural plug-in estimator for is .

Consistency of for follows from consistency of the moment estimators and and an application of the continuous mapping theorem. Specifically, Theorem established , and Theorem established . The function is a continuous function of and so long as , which holds true under Assumption 7.1.4. It follows by the CMT that

so that is consistent for .

Theorem 7.5 Under Assumption 7.1, as

It is instructive to notice that Theorem does not require the assumption of homoskedasticity. That is, is consistent for regardless if the regression is homoskedastic or heteroskedastic. However, only under homoskedasticity. Thus, in the general case is consistent for a welldefined but non-useful object.

Heteroskedastic Covariance Matrix Estimation

Theorems established that the asymptotic covariance matrix of is . We now consider estimation of this covariance matrix without imposing homoskedasticity. The standard approach is to use a plug-in estimator which replaces the unknowns with sample moments.

As described in the previous section a natural estimator for is where defined in (7.1). The moment estimator for is

leading to the plug-in covariance matrix estimator

You can check that where is the HC0 covariance matrix estimator from (4.36).

As shown in Theorem 7.1, , so we just need to verify the consistency of . The key is to replace the squared residual with the squared error , and then show that the difference is asymptotically negligible.

Specifically, observe that

The first term is an average of the i.i.d. random variables , and therefore by the WLLN converges in probability to its expectation, namely,

Technically, this requires that has finite elements, which was shown in (7.6).

To establish that is consistent for it remains to show that

There are multiple ways to do this. A reasonably straightforward yet slightly tedious derivation is to start by applying the triangle inequality (B.16) using a matrix norm:

Then recalling the expression for the squared residual (7.17), apply the triangle inequality (B.1) and then the Schwarz inequality (B.12) twice

Combining (7.21) and (7.22), we find

The expression is because and both averages in parenthesis are averages of random variables with finite expectation under Assumption (and are thus ). Indeed, by Hölder’s inequality (B.31)

We have established (7.20) as desired. Theorem 7.6 Under Assumption 7.2, as and

For an alternative proof of this result, see Section 7.20.

Summary of Covariance Matrix Notation

The notation we have introduced may be somewhat confusing so it is helpful to write it down in one place.

The exact variance of (under the assumptions of the linear regression model) and the asymptotic variance of (under the more general assumptions of the linear projection model) are

The HC0 estimators of these two covariance matrices are

and satisfy the simple relationship .

Similarly, under the assumption of homoskedasticity the exact and asymptotic variances simplify to

Their standard estimators are

which also satisfy the relationship .

The exact formula and estimators are useful when constructing test statistics and standard errors. However, for theoretical purposes the asymptotic formula (variances and their estimates) are more useful as these retain non-generate limits as the sample sizes diverge. That is why both sets of notation are useful.

Alternative Covariance Matrix Estimators*

In Section we introduced as an estimator of is a scaled version of from Section 4.14, where we also introduced the alternative HC1, HC2, and HC3 heteroskedasticity-robust covariance matrix estimators. We now discuss the consistency properties of these estimators.

To do so we introduce their scaled versions, e.g. , and . These are (alternative) estimators of the asymptotic covariance matrix . First, consider . Notice that where was defined in (7.19) and shown consistent for in Theorem 7.6. If is fixed as , then and thus

Thus is consistent for .

The alternative estimators and take the form (7.19) but with replaced by

and

respectively. To show that these estimators also consistent for given it is sufficient to show that the differences and converge in probability to zero as .

The trick is the fact that the leverage values are asymptotically negligible:

(See Theorem in Section 7.21.) Then using the triangle inequality (B.16)

The sum in parenthesis can be shown to be under Assumption by the same argument as in in the proof of Theorem 7.6. (In fact, it can be shown to converge in probability to .) The term in absolute values is by (7.24). Thus the product is which means that .

Similarly,

Theorem 7.7 Under Assumption 7.2, as , and

Theorem shows that the alternative covariance matrix estimators are also consistent for the asymptotic covariance matrix.

To simplify notation, for the remainder of the chapter we will use the notation and to refer to any of the heteroskedasticity-consistent covariance matrix estimators , , HC2, and , as they all have the same asymptotic limits.

Functions of Parameters

In most serious applications a researcher is actually interested in a specific transformation of the coefficient vector . For example, the researcher may be interested in a single coefficient or a ratio . More generally, interest may focus on a quantity such as consumer surplus which could be a complicated function of the coefficients. In any of these cases we can write the parameter of interest as a function of the coefficients, e.g. for some function . The estimate of is

By the continuous mapping theorem (Theorem 6.6) and the fact we can deduce that is consistent for if the function is continuous.

Theorem 7.8 Under Assumption 7.1, if is continuous at the true value of then as

Furthermore, if the transformation is sufficiently smooth, by the Delta Method (Theorem 6.8) we can show that is asymptotically normal.

Assumption 7.3 is continuously differentiable at the true value of and has rank .

Theorem 7.9 Asymptotic Distribution of Functions of Parameters Under Assumptions and 7.3, as ,

where .

In many cases the function is linear:

for some matrix . In particular if is a “selector matrix”

then we can partition so that . Then

the upper-left sub-matrix of given in (7.14). In this case (7.25) states that

That is, subsets of are approximately normal with variances given by the conformable subcomponents of .

To illustrate the case of a nonlinear transformation take the example for . Then

so

where denotes the element of .

For inference we need an estimator of the asymptotic covariance matrix . For this it is typical to use the plug-in estimator

The derivative in (7.27) may be calculated analytically or numerically. By analytically, we mean working out the formula for the derivative and replacing the unknowns by point estimates. For example, if then is (7.26). However in some cases the function may be extremely complicated and a formula for the analytic derivative may not be easily available. In this case numerical differentiation may be preferable. Let be the unit vector with the ” 1 ” in the place. The element of a numerical derivative is

for some small .

The estimator of is

Alternatively, the homoskedastic covariance matrix estimator could be used leading to a homoskedastic covariance matrix estimator for .

Given (7.27), (7.28) and (7.29) are simple to calculate using matrix operations.

As the primary justification for is the asymptotic approximation (7.25), is often called an asymptotic covariance matrix estimator.

The estimator is consistent for under the conditions of Theorem because by Theorem and

because and the function is continuous in . Theorem 7.10 Under Assumptions and 7.3, as

Theorem 7.10 shows that is consistent for and thus may be used for asymptotic inference. In practice we may set

as an estimator of the variance of .

Asymptotic Standard Errors

As described in Section 4.15, a standard error is an estimator of the standard deviation of the distribution of an estimator. Thus if is an estimator of the covariance matrix of then standard errors are the square roots of the diagonal elements of this matrix. These take the form

Standard errors for are constructed similarly. Supposing that is real-valued then the standard error for is the square root of

When the justification is based on asymptotic theory we call or an asymptotic standard error for or . When reporting your results it is good practice to report standard errors for each reported estimate and this includes functions and transformations of your parameter estimates. This helps users of the work (including yourself) assess the estimation precision.

We illustrate using the log wage regression

Consider the following three parameters of interest.

- Percentage return to education:

(100 times the partial derivative of the conditional expectation of wage) with respect to education.)

1. Percentage return to experience for individuals with 10 years of experience:

(100 times the partial derivative of the conditional expectation of log wages with respect to experience, evaluated at experience .) 3. Experience level which maximizes expected log wages:

(The level of experience at which the partial derivative of the conditional expectation of log(wage) with respect to experience equals 0 .)

The vector for these three parameters is

respectively.

We use the subsample of married Black women (all experience levels) which has 982 observations. The point estimates and standard errors are

The standard errors are the square roots of the HC2 covariance matrix estimate

We calculate that

The calculations show that the estimate of the percentage return to education is per year with a standard error of 0.8. The estimate of the percentage return to experience for those with 10 years of experience is per year with a standard error of . The estimate of the experience level which maximizes expected log wages is 35 years with a standard error of 7 .

In Stata the nlcom command can be used after estimation to perform the same calculations. To illustrate, after estimation of (7.31) use the commands given below. In each case, Stata reports the coefficient estimate, asymptotic standard error, and confidence interval.

.jpg)

Stata Commands\ nlcom 100_b[education]\ nlcom 100_b[experience]+20_b[exp2]\ nlcom -50_b[experience

t-statistic

Let be a parameter of interest, its estimator, and its asymptotic standard error. Consider the statistic

Different writers call (7.33) a t-statistic, a t-ratio, a z-statistic, or a studentized statistic, sometimes using the different labels to distinguish between finite-sample and asymptotic inference. As the statistics themselves are always (7.33) we won’t make this distinction, and will simply refer to as a t-statistic or a t-ratio. We also often suppress the parameter dependence, writing it as . The t-statistic is a function of the estimator, its standard error, and the parameter.

By Theorems and and . Thus

The last equality is the property that affine functions of normal variables are normal (Theorem 5.2).

This calculation requires that , otherwise the continuous mapping theorem cannot be employed. In practice this is an innocuous requirement as it only excludes degenerate sampling distributions. Formally we add the following assumption.

Assumption 7.4 .

Assumption states that is positive definite. Since is full rank under Assumption a sufficient condition is that . Since a sufficient condition is . Thus Assumption could be replaced by the assumption . Assumption is weaker so this is what we use.

Thus the asymptotic distribution of the t-ratio is standard normal. Since this distribution does not depend on the parameters we say that is asymptotically pivotal. In finite samples is not necessarily pivotal but the property means that the dependence on unknowns diminishes as increases. It is also useful to consider the distribution of the absolute t-ratio . Since the continuous mapping theorem yields . Letting denote the standard normal distribution function we calculate that the distribution of is

Theorem 7.11 Under Assumptions 7.2, 7.3, and 7.4, and

The asymptotic normality of Theorem is used to justify confidence intervals and tests for the parameters.

Confidence Intervals

The estimator is a point estimator for , meaning that is a single value in . A broader concept is a set estimator which is a collection of values in . When the parameter is real-valued then it is common to focus on sets of the form which is called an interval estimator for .

An interval estimator is a function of the data and hence is random. The coverage probability of the interval is . The randomness comes from as the parameter is treated as fixed. In Section we introduced confidence intervals for the normal regression model which used the finite sample distribution of the t-statistic. When we are outside the normal regression model we cannot rely on the exact normal distribution theory but instead use asymptotic approximations. A benefit is that we can construct confidence intervals for general parameters of interest not just regression coefficients.

An interval estimator is called a confidence interval when the goal is to set the coverage probability to equal a pre-specified target such as or . is called a confidence interval if .

When is asymptotically normal with standard error the conventional confidence interval for takes the form

where equals the quantile of the distribution of . Using (7.34) we calculate that is equivalently the quantile of the standard normal distribution. Thus, solves

This can be computed by, for example, norminv in MATLAB. The confidence interval (7.35) is symmetric about the point estimator and its length is proportional to the standard error .

Equivalently, (7.35) is the set of parameter values for such that the t-statistic is smaller (in absolute value) than , that is

The coverage probability of this confidence interval is

where the limit is taken as , and holds because is asymptotically by Theorem . We call the limit the asymptotic coverage probability and call an asymptotic confidence interval for . Since the t-ratio is asymptotically pivotal the asymptotic coverage probability is independent of the parameter .

It is useful to contrast the confidence interval (7.35) with (5.8) for the normal regression model. They are similar but there are differences. The normal regression interval (5.8) only applies to regression coefficients not to functions of the coefficients. The normal interval (5.8) also is constructed with the homoskedastic standard error, while (7.35) can be constructed with a heteroskedastic-robust standard error. Furthermore, the constants in (5.8) are calculated using the student distribution, while in (7.35) are calculated using the normal distribution. The difference between the student and normal values are typically small in practice (since sample sizes are large in typical economic applications). However, since the student values are larger it results in slightly larger confidence intervals which is reasonable. (A practical rule of thumb is that if the sample sizes are sufficiently small that it makes a difference then neither (5.8) nor (7.35) should be trusted.) Despite these differences the coincidence of the intervals means that inference on regression coefficients is generally robust to using either the exact normal sampling assumption or the asymptotic large sample approximation, at least in large samples.

Stata by default reports confidence intervals for each coefficient where the critical values are calculated using the distribution. This is done for all standard error methods even though it is only exact for homoskedastic standard errors and under normality.

The standard coverage probability for confidence intervals is , leading to the choice for the constant in (7.35). Rounding to 2 , we obtain the most commonly used confidence interval in applied econometric practice

This is a useful rule-of thumb. This asymptotic confidence interval is simple to compute and can be roughly calculated from tables of coefficient estimates and standard errors. (Technically, it is an asymptotic interval due to the substitution of for but this distinction is overly precise.)

Theorem 7.12 Under Assumptions 7.2, 7.3 and 7.4, for defined in (7.35) with . For .

Confidence intervals are a simple yet effective tool to assess estimation uncertainty. When reading a set of empirical results look at the estimated coefficient estimates and the standard errors. For a parameter of interest compute the confidence interval and consider the meaning of the spread of the suggested values. If the range of values in the confidence interval are too wide to learn about then do not jump to a conclusion about based on the point estimate alone.

For illustration, consider the three examples presented in Section based on the log wage regression for married Black women.

Percentage return to education. A 95% asymptotic confidence interval is , 13.3]. This is reasonably tight.

Percentage return to experience (per year) for individuals with 10 years experience. A asymptotic confidence interval is . The interval is positive but broad. This indicates that the return to experience is positive, but of uncertain magnitude. Experience level which maximizes expected log wages. An asymptotic confidence interval is . This is rather imprecise, indicating that the estimates are not very informative regarding this parameter.

Regression Intervals

In the linear regression model the conditional expectation of given is

In some cases we want to estimate at a particular point . Notice that this is a linear function of . Letting and we see that and so . Thus an asymptotic confidence interval for is

It is interesting to observe that if this is viewed as a function of the width of the confidence interval is dependent on .

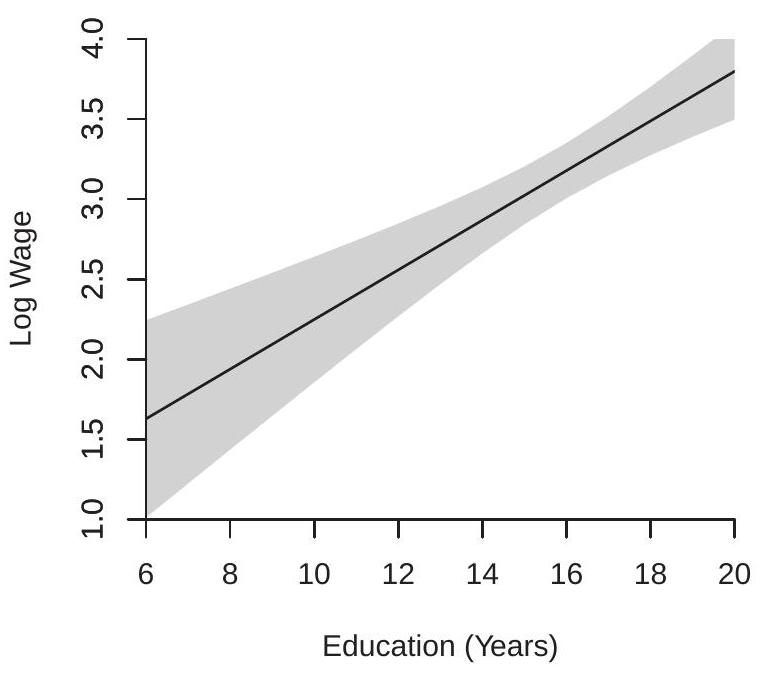

To illustrate we return to the log wage regression (3.12) of Section 3.7. The estimated regression equation is

where . The covariance matrix estimate from (4.43) is

Thus the confidence interval for the regression is

The estimated regression and 95% intervals are shown in Figure 7.4(a). Notice that the confidence bands take a hyperbolic shape. This means that the regression line is less precisely estimated for large and small values of education.

Plots of the estimated regression line and confidence intervals are especially useful when the regression includes nonlinear terms. To illustrate consider the log wage regression (7.31) which includes experience and its square and covariance matrix estimate (7.32). We are interested in plotting the regression estimate and regression intervals as a function of experience. Since the regression also includes education, to plot the estimates in a simple graph we fix education at a specific value. We select education=12. This only affects the level of the estimated regression since education enters without an interaction. Define the points of evaluation

where experience.

- Wage on Education

.jpg)

- Wage on Experience

Figure 7.4: Regression Intervals

The regression interval for education as a function of experience is

The estimated regression and 95% intervals are shown in Figure 7.4(b). The regression interval widens greatly for small and large values of experience indicating considerable uncertainty about the effect of experience on mean wages for this population. The confidence bands take a more complicated shape than in Figure 7.4(a) due to the nonlinear specification.

Forecast Intervals

Suppose we are given a value of the regressor vector for an individual outside the sample and we want to forecast (guess) for this individual. This is equivalent to forecasting given which will generally be a function of . A reasonable forecasting rule is the conditional expectation as it is the mean-square minimizing forecast. A point forecast is the estimated conditional expectation . We would also like a measure of uncertainty for the forecast.

The forecast error is . As the out-of-sample error is inde- pendent of the in-sample estimator this has conditional variance

Under homoskedasticity, . In this case a simple estimator of (7.36) is so a standard error for the forecast is . Notice that this is different from the standard error for the conditional expectation.

The conventional 95% forecast interval for uses a normal approximation and equals . It is difficult, however, to fully justify this choice. It would be correct if we have a normal approximation to the ratio

The difficulty is that the equation error is generally non-normal and asymptotic theory cannot be applied to a single observation. The only special exception is the case where has the exact distribution which is generally invalid.

An accurate forecast interval would use the conditional distribution of given , which is more challenging to estimate. Due to this difficulty many applied forecasters use the simple approximate interval despite the lack of a convincing justification.

Wald Statistic

Let be any parameter vector of interest, its estimator, and its covariance matrix estimator. Consider the quadratic form

where . When , then is the square of the t-ratio. When is typically called a Wald statistic as it was proposed by Wald (1943). We are interested in its sampling distribution.

The asymptotic distribution of is simple to derive given Theorem and Theorem 7.10. They show that and . It follows that

a quadratic in the normal random vector . As shown in Theorem the distribution of this quadratic form is , a chi-square random variable with degrees of freedom.

Theorem 7.13 Under Assumptions 7.2, and 7.4, as .

Theorem is used to justify multivariate confidence regions and multivariate hypothesis tests.

Homoskedastic Wald Statistic

Under the conditional homoskedasticity assumption we can construct the Wald statistic using the homoskedastic covariance matrix estimator defined in (7.29). This yields a homoskedastic Wald statistic

Under the assumption of conditional homoskedasticity it has the same asymptotic distribution as

Theorem 7.14 Under Assumptions 7.2, 7.3, and , as ,

Confidence Regions

A confidence region is a set estimator for when . A confidence region is a set in intended to cover the true parameter value with a pre-selected probability . Thus an ideal confidence region has the coverage probability . In practice it is typically not possible to construct a region with exact coverage but we can calculate its asymptotic coverage.

When the parameter estimator satisfies the conditions of Theorem a good choice for a confidence region is the ellipse

with the quantile of the distribution. (Thus .) It can be computed by, for example, chi2inv in MATLAB.

Theorem implies

which shows that has asymptotic coverage .

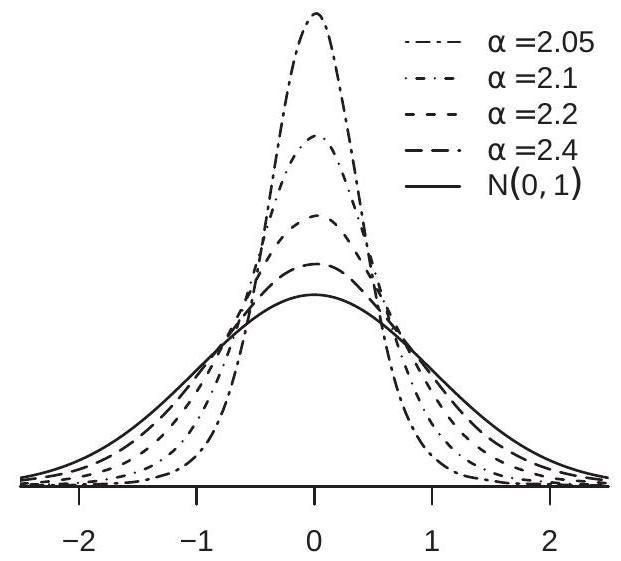

To illustrate the construction of a confidence region, consider the estimated regression (7.31) of

Suppose that the two parameters of interest are the percentage return to education and the percentage return to experience for individuals with 10 years experience . These two parameters are a linear transformation of the regression parameters with point estimates

and have the covariance matrix estimate

with inverse

Thus the Wald statistic is

The quantile of the distribution is (we use the distribution as the dimension of is two) so an asymptotic confidence region for the two parameters is the interior of the ellipse which is displayed in Figure 7.5. Since the estimated correlation of the two coefficient estimates is modest (about ) the region is modestly elliptical.

Figure 7.5: Confidence Region for Return to Experience and Return to Education

Edgeworth Expansion*

Theorem showed that the t-ratio is asymptotically normal. In practice this means that we use the normal distribution to approximate the finite sample distribution of . How good is this approximation? Some insight into the accuracy of the normal approximation can be obtained by an Edgeworth expansion which is a higher-order approximation to the distribution of . The following result is an application of Theorem of Probability and Statistics for Economists.

Theorem 7.15 Under Assumptions 7.2, 7.3, has five continuous derivatives in a neighborhood of , and , as

uniformly in , where is an even polynomial of order 2 and is an odd polynomial of degree 5 with coefficients depending on the moments of and up to order

Theorem shows that the finite sample distribution of the t-ratio can be approximated up to by the sum of three terms, the first being the standard normal distribution, the second a adjustment, and the third a adjustment.

Consider a one-sided confidence interval where is the quantile of , thus . Then

This means that the actual coverage is within of the desired level.

Now consider a two-sided interval . It has coverage

This means that the actual coverage is within of the desired level. The accuracy is better than the one-sided interval because the term in the Edgeworth expansion has offsetting effects in the two tails of the distribution.

Asymptotic Leverage*

Recall the definition of leverage from (3.40) . These are the diagonal elements of the projection matrix and appear in the formula for leave-one-out prediction errors and and HC3 covariance matrix estimators. We can show that under i.i.d. sampling the leverage values are uniformly asymptotically small.

Let and denote the smallest and largest eigenvalues of a symmetric square matrix and note that . Since , by the CMT . (The latter is positive since is positive definite and thus all its eigenvalues are positive.) Then by the Quadratic Inequality (B.18)

Theorem shows that implies and thus (7.42) is

Theorem 7.17 If is i.i.d., , and for some , then .

For any then (1) (uniformly in ). Larger implies a faster rate of convergence. For example implies .

Theorem (7.17) implies that under random sampling with finite variances and large samples no individual observation should have a large leverage value. Consequently, individual observations should not be influential unless one of these conditions is violated.

Exercises

Exercise 7.1 Take the model with . Suppose that is estimated by regressing on only. Find the probability limit of this estimator. In general, is it consistent for ? If not, under what conditions is this estimator consistent for ?

Exercise 7.2 Take the model with . Define the ridge regression estimator

here is a fixed constant. Find the probability limit of as . Is consistent for ?

Exercise 7.3 For the ridge regression estimator (7.43), set where is fixed as . Find the probability limit of as . Exercise 7.4 Verify some of the calculations reported in Section 7.4. Specifically, suppose that and only take the values , symmetrically, with

Verify the following:\ (a) \ (b) \ (c) \ (d) \ (e) \ (f) .

Exercise 7.5 Show (7.13)-(7.16).

Exercise The model is

Find the method of moments estimators for .

Exercise 7.7 Of the variables only the pair are observed. In this case we say that is a latent variable. Suppose

where is a measurement error satisfying

Let denote the OLS coefficient from the regression of on .

Is the coefficient from the linear projection of on ? (b) Is consistent for as ?

Find the asymptotic distribution of as .

Exercise 7.8 Find the asymptotic distribution of as .

Exercise 7.9 The model is with and . Consider the two estimators

Under the stated assumptions are both estimators consistent for ?

Are there conditions under which either estimator is efficient?

Exercise 7.10 In the homoskedastic regression model with and suppose is the OLS estimator of with covariance matrix estimator based on a sample of size . Let be the estimator of . You wish to forecast an out-of-sample value of given that . Thus the available information is the sample, the estimates , the residuals , and the out-of-sample value of the regressors .

Find a point forecast of .

Find an estimator of the variance of this forecast.

Exercise 7.11 Take a regression model with i.i.d. observations with

Let be the OLS estimator of with residuals . Consider the estimators of

Find the asymptotic distribution of as .

Find the asymptotic distribution of as .

How do you use the regression assumption in your answer to (b)?

Exercise 7.12 Consider the model

with both and scalar. Assuming and suppose the parameter of interest is the area under the regression curve (e.g. consumer surplus), which is .

Let be the least squares estimators of so that and let be a standard estimator for .

Given the above, describe an estimator of .

Construct an asymptotic confidence interval for .

Exercise 7.13 Consider an i.i.d. sample where and are scalar. Consider the reverse projection model with and define the parameter of interest as .

Propose an estimator of .

Propose an estimator of .

Find the asymptotic distribution of .

Find an asymptotic standard error for .

Exercise 7.14 Take the model

with both and , and define the parameter .

What is the appropriate estimator for ?

Find the asymptotic distribution of under standard regularity conditions.

Show how to calculate an asymptotic confidence interval for .

Exercise 7.15 Take the linear model with and . Consider the estimator

Find the asymptotic distribution of as .

Exercise 7.16 From an i.i.d. sample of size you randomly take half the observations. You estimate a least squares regression of on using only this sub-sample. Is the estimated slope coefficient consistent for the population projection coefficient? Explain your reasoning.

Exercise 7.17 An economist reports a set of parameter estimates, including the coefficient estimates , and standard errors and . The author writes “The estimates show that is larger than

Write down the formula for an asymptotic 95% confidence interval for , expressed as a function of and , where is the estimated correlation between and .

Can be calculated from the reported information? (c) Is the author correct? Does the reported information support the author’s claim?

Exercise 7.18 Suppose an economic model suggests

where . You have a random sample .

Describe how to estimate at a given value .

Describe (be specific) an appropriate confidence interval for .

Exercise 7.19 Take the model with and suppose you have observations . (The number of observations is .) You randomly split the sample in half, (each has observations), calculate by least squares on the first sample, and by least squares on the second sample. What is the asymptotic distribution of ?

Exercise 7.20 The variables are a random sample. The parameter is estimated by minimizing the criterion function

That is .

Find an explicit expression for .

What population parameter is estimating? Be explicit about any assumptions you need to impose. Do not make more assumptions than necessary.

Find the probability limit for as .

Find the asymptotic distribution of as .

Exercise 7.21 Take the model

where is a (vector) function of . The sample is with i.i.d. observations. Assume that for all . Suppose you want to forecast given and for an out-of-sample observation . Describe how you would construct a point forecast and a forecast interval for .

Exercise 7.22 Take the model

where is a vector and is scalar. Your goal is to estimate the scalar parameter . You use a two-step estimator: - Estimate by least squares of on .

- Estimate by least squares of on .

Show that is consistent for .

Find the asymptotic distribution of when

Exercise 7.23 The model is with and . Consider the estimator

Find conditions under which is consistent for as .

Exercise 7.24 The parameter is defined in the model where is independent of , . The observables are where and is random scale measurement error, independent of and . Consider the least squares estimator for .

Find the plim of expressed in terms of and moments of .

Can you find a non-trivial condition under which is consistent for ? (By non-trivial we mean something other than .)

Exercise 7.25 Take the projection model with . For a positive function let . Consider the estimator

Find the probability limit (as ) of . Do you need to add an assumption? Is consistent for ? If not, under what assumption is consistent for ?

Exercise 7.26 Take the regression model

with . Assume that . Consider the infeasible estimator

This is a WLS estimator using the weights .

Find the asymptotic distribution of .

Contrast your result with the asymptotic distribution of infeasible GLS. Exercise 7.27 The model is with . An econometrician is worried about the impact of some unusually large values of the regressors. The model is thus estimated on the subsample for which for some fixed . Let denote the OLS estimator on this subsample. It equals

Show that .

Find the asymptotic distribution of .

Exercise 7.28 As in Exercise 3.26, use the cps09mar dataset and the subsample of white male Hispanics. Estimate the regression

Report the coefficient estimates and robust standard errors.

Let be the ratio of the return to one year of education to the return to one year of experience for experience . Write as a function of the regression coefficients and variables. Compute from the estimated model.

Write out the formula for the asymptotic standard error for as a function of the covariance matrix for . Compute from the estimated model.

Construct a asymptotic confidence interval for from the estimated model.

Compute the regression function at education and experience . Compute a 95% confidence interval for the regression function at this point.

Consider an out-of-sample individual with 16 years of education and 5 years experience. Construct an forecast interval for their log wage and wage. [To obtain the forecast interval for the wage, apply the exponential function to both endpoints.]

.jpg)

.jpg)

.jpg)

.jpg)