Introduction

Economists traditionally use the term panel data to refer to data structures consisting of observations on individuals for multiple time periods. Other fields such as statistics typically call this structure longitudinal data. The observed “individuals” can be, for example, people, households, workers, firms, schools, production plants, industries, regions, states, or countries. The distinguishing feature relative to cross-sectional data sets is the presence of multiple observations for each individual. More broadly, panel data methods can be applied to any context with cluster-type dependence.

There are several distinct advantages of panel data relative to cross-section data. One is the possibility of controlling for unobserved time-invariant endogeneity without the use of instrumental variables. A second is the possibility of allowing for broader forms of heterogeneity. A third is modeling dynamic relationships and effects.

There are two broad categories of panel data sets in economic applications: micro panels and macro panels. Micro panels are typically surveys or administrative records on individuals and are characterized by a large number of individuals (often in the 1000’s or higher) and a relatively small number of time periods (often 2 to 20 years). Macro panels are typically national or regional macroeconomic variables and are characterized by a moderate number of individuals (e.g. 7-20) and a moderate number of time periods (20-60 years).

Panel data was once relatively esoteric in applied economic practice. Now, it is a dominant feature of applied research.

A typical maintained assumption for micro panels (which we follow in this chapter) is that the individuals are mutually independent while the observations for a given individual are correlated across time periods. This means that the observations follow a clustered dependence structure. Because of this, current econometric practice is to use cluster-robust covariance matrix estimators when possible. Similar assumptions are often used for macro panels though the assumption of independence across individuals (e.g. countries) is much less compelling.

The application of panel data methods in econometrics started with the pioneering work of Mundlak (1961) and Balestra and Nerlove (1966).

Several excellent monographs and textbooks have been written on panel econometrics, including Arellano (2003), Hsiao (2003), Wooldridge (2010), and Baltagi (2013). This chapter will summarize some of the main themes but for a more in-depth treatment see these references.

One challenge arising in panel data applications is that the computational methods can require meticulous attention to detail. It is therefore advised to use established packages for routine applications. For most panel data applications in economics Stata is the standard package.

Time Indexing and Unbalanced Panels

It is typical to index observations by both the individual and the time period , thus denotes a variable for individual in period . We index individuals as and time periods as . Thus is the number of individuals in the panel and is the number of time series periods.

Panel data sets can involve data at any time series frequency though the typical application involves annual data. The observations in a data set will be indexed by calendar time which for the case of annual observations is the year. For notational convenience it is customary to denote the time periods as , so that is the first time period observed and is the final time period.

When observations are available on all individuals for the same time periods we say that the panel is balanced. In this case there are an equal number of observations for each individual and the total number of observations is .

When different time periods are available for the individuals in the sample we say that the panel is unbalanced. This is the most common type of panel data set. It does not pose a problem for applications but does make the notation cumbersome and also complicates computer programming.

To illustrate, consider the data set Invest 1993 on the textbook webpage. This is a sample of 1962 U.S. firms extracted from Compustat, assembled by Bronwyn Hall, and used in the empirical work in Hall and Hall (1993). In Table 17.1 we display a set of variables from the data set for the first 13 observations. The first variable is the firm code number. The second variable is the year of the observation. These two variables are essential for any panel data analysis. In Table you can see that the first firm (#32) is observed for the years 1970 through 1977. The second firm (#209) is observed for 1987 through 1991. You can see that the years vary considerably across the firms so this is an unbalanced panel.

For unbalanced panels the time index denotes the full set of time periods. For example, in the data set Invest 1993 there are observations for the years 1960 through 1991, so the total number of time periods is . Each individual is observed for a subset of periods. The set of time periods for individual is denoted as so that individual-specific sums (over time periods) are written as .

The observed time periods for a given individual are typically contiguous (for example, in Table 17.1, firm #32 is observed for each year from 1970 through 1977) but in some cases are non-continguous (if, for example, 1973 was missing for firm #32). The total number of observations in the sample is .

Table 17.1: Observations from Investment Data Set

| 32 |

1970 |

|

|

|

|

|

|

. |

| 32 |

1971 |

|

|

|

|

|

|

|

| 32 |

1972 |

|

|

|

|

|

|

|

| 32 |

1973 |

|

|

|

|

|

|

|

| 32 |

1974 |

|

|

|

|

|

|

|

| 32 |

1975 |

|

|

|

|

|

|

|

| 32 |

1976 |

|

|

|

|

|

|

|

| 32 |

1977 |

|

|

|

|

|

|

|

| 209 |

1987 |

|

|

|

|

|

|

. |

| 209 |

1988 |

|

|

|

|

|

|

|

| 209 |

1989 |

|

|

|

|

|

|

|

| 209 |

1990 |

|

|

|

|

|

|

|

| 209 |

1991 |

|

|

|

|

|

|

|

Notation

This chapter focuses on panel data regression models whose observations are pairs where is the dependent variable and is a -vector of regressors. These are the observations on individual for time period .

It will be useful to cluster the observations at the level of the individual. We borrow the notation from Section to write as the stacked observations on for , stacked in chronological order. Similarly, we write as the matrix of stacked for , stacked in chronological order.

We will also sometimes use matrix notation for the full sample. To do so, let denote the vector of stacked , and set similarly.

Pooled Regression

The simplest model in panel regresion is pooled regresssion

where is a coefficient vector and is an error. The model can be written at the level of the individual as

where is . The equation for the full sample is where is .

The standard estimator of in the pooled regression model is least squares, which can be written as

In the context of panel data is called the pooled regression estimator. The vector of residuals for the individual is .

The pooled regression model is ideally suited for the context where the errors satisfy strict mean independence:

This occurs when the errors are mean independent of all regressors for all time periods . Strict mean independence is stronger than pairwise mean independence as well as projection (17.1). Strict mean independence requires that neither lagged nor future values of help to forecast . It excludes lagged dependent variables (such as ) from (otherwise would be predictable given ). It also requires that is exogenous in the sense discussed in Chapter 12.

We now describe some statistical properties of under (17.2). First, notice that by linearity and the cluster-level notation we can write the estimator as

Using (17.2)

so is unbiased for .

Under the additional assumption that the error is serially uncorrelated and homoskedastic the covariance estimator takes a classical form and the classical homoskedastic variance estimator can be used. If the error is heteroskedastic but serially uncorrelated then a heteroskedasticity-robust covariance matrix estimator can be used.

In general, however, we expect the errors to be correlated across time for a given individual. This does not necessarily violate (17.2) but invalidates classical covariance matrix estimation. The conventional solution is to use a cluster-robust covariance matrix estimator which allows arbitrary withincluster dependence. Cluster-robust covariance matrix estimators for pooled regression equal

As in (4.55) this can be multiplied by a degree-of-freedom adjustment. The adjustment used by the Stata regress command is

The pooled regression estimator with cluster-robust standard errors can be obtained using the Stata command regress cluster(id) where id indicates the individual.

When strict mean independence (17.2) fails the pooled least squares estimator is not necessarily consistent for . Since strict mean independence is a strong and undesirable restriction it is typically preferred to adopt one of the alternative estimators described in the following sections.

To illustrate the pooled regression estimator consider the data set Invest1993 described earlier. We consider a simple investment model

where is investment/assets, is market value/assets, is long term debt/assets, is cash flow/assets, and is a dummy variable indicating if the corporation’s stock is traded on the NYSE or AMEX. The regression also includes 19 dummy variables indicating an industry code. The theory of investment suggests that while . Theories of liquidity constraints suggest that and . We will be using this example throughout this chapter. The values of and for the first 13 observations are also displayed in Table 17.1.

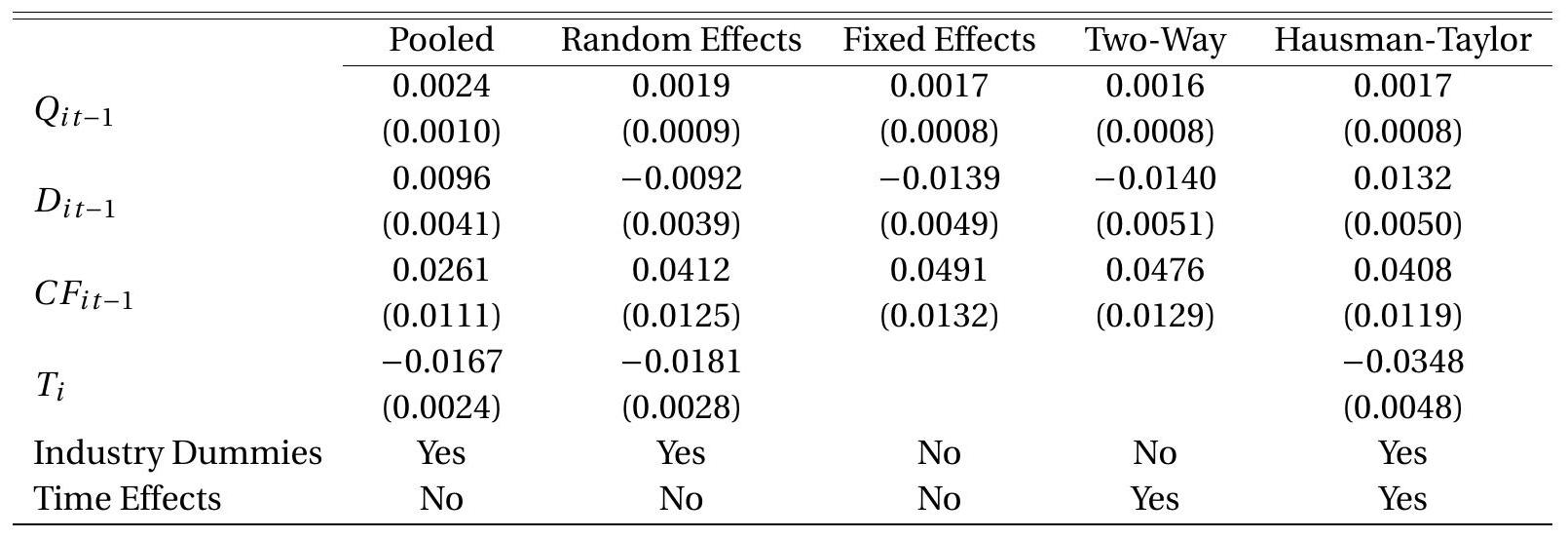

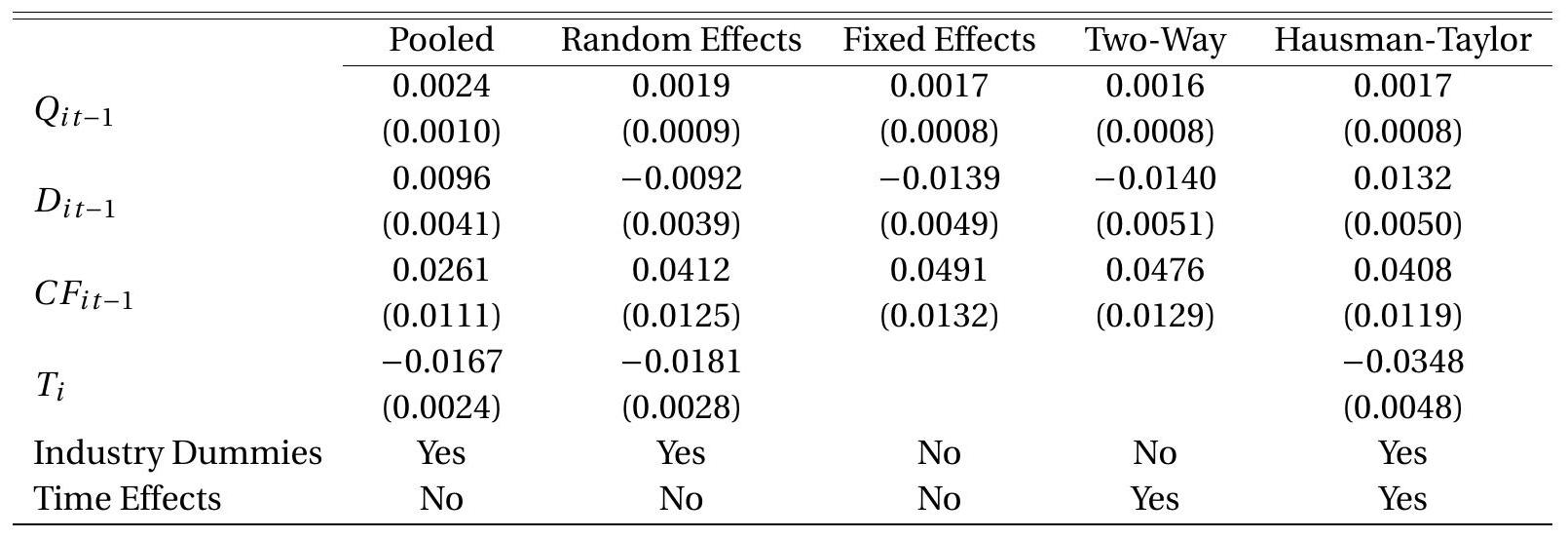

In Table we present the pooled regression estimates of (17.3) in the first column with clusterrobust standard errors.

One-Way Error Component Model

One approach to panel data regression is to model the correlation structure of the regression error . The most common choice is an error-components structure. The simplest takes the form

Table 17.2: Estimates of Investment Equation

Cluster-robust standard errors in parenthesis.

where is an individual-specific effect and are idiosyncratic (i.i.d.) errors. This is known as a oneway error component model.

In vector notation we can write where is a vector of 1’s.

The one-way error component regression model is

written at the level of the observation, or written at the level of the individual.

To illustrate why an error-component structure such as (17.4) might be appropriate, examine Table 17.1. In the final column we have included the pooled regression residuals for these observations. (There is no residual for the first year for each firm due to the lack of lagged regressors for this observation.) What is quite striking is that the residuals for the second firm (#209) are all negative, clustering around . While informal, this suggests that it may be appropriate to model these errors using (17.4), expecting that firm #209 has a large negative value for its individual effect .

Random Effects

The random effects model assumes that the errors and in (17.4) are conditionally mean zero, uncorrelated, and homoskedastic.

Assumption 17.1 Random Effects. Model (17.4) holds with

where (17.7) holds for all . Assumption is known as a random effects specification. It implies that the vector of errors for individual has the covariance structure

say, where is an identity matrix of dimension . The matrix depends on since its dimension depends on the number of observed time periods .

Assumptions 17.1.1 and 17.1.4 state that the idiosyncratic error and individual-specific error are strictly mean independent so the combined error is strictly mean independent as well.

The random effects model is equivalent to an equi-correlation model. That is, suppose that the error satisfies

and

for . These conditions imply that can be written as (17.4) with the components satisfying Assumption with and . Thus random effects and equi-correlation are identical.

The random effects regression model is

or where the errors satisfy Assumption 17.1.

Given the error structure the natural estimator for is GLS. Suppose and are known. The GLS estimator of is

A feasible GLS estimator replaces the unknown and with estimators. See Section .

We now describe some statistical properties of the estimator under Assumption 17.1. By linearity

Thus

Thus is conditionally unbiased for . The conditional variance of is

Now let’s compare with the pooled estimator . Under Assumption the latter is also conditionally unbiased for and has conditional variance

Using the algebra of the Gauss-Markov Theorem we deduce that

and thus the random effects estimator is more efficient than the pooled estimator under Assumption 17.1. (See Exercise 17.1.) The two variance matrices are identical when there is no individualspecific effect (when ) for then .

Under the assumption that the random effects model is a useful approximation but not literally true then we may consider a cluster-robust covariance matrix estimator such as

where . This may be re-scaled by a degree of freedom adjustment if desired.

The random effects estimator can be obtained using the Stata command xtreg. The default covariance matrix estimator is (17.11). For the cluster-robust covariance matrix estimator (17.14) use the command xtreg vce(robust). (The xtset command must be used first to declare the group identifier. For example, cusip is the group identifier in Table 17.1.)

To illustrate, in the second column of Table we present the random effect regression estimates of the investment model (17.3) with cluster-robust standard errors (17.14). The point estimates are reasonably different from the pooled regression estimator. The coefficient on debt switches from positive to negative (the latter consistent with theories of liquidity constraints) and the coefficient on cash flow increases significantly in magnitude. These changes appear to be greater in magnitude than would be expected if Assumption were correct. In the next section we consider a less restrictive specification.

Fixed Effect Model

Consider the one-way error component regression model

or

In many applications it is useful to interpret the individual-specific effect as a time-invariant unobserved missing variable. For example, in a wage regression may be the unobserved ability of individual . In the investment model (17.3) may be a firm-specific productivity factor.

When is interpreted as an omitted variable it is natural to expect it to be correlated with the regressors . This is especially the case when includes choice variables.

To illustrate, consider the entries in Table 17.1. The final column displays the pooled regression residuals for the first 13 observations which we interpret as estimates of the error . As described before, what is particularly striking about the residuals is that they are all strongly negative for firm #209, clustering around . We can interpret this as an estimate of for this firm. Examining the values of the regressor for the two firms we can see that firm #209 has very large values (in all time periods) for . (The average value for the two firms appears in the seventh column.) Thus it appears (though we are only looking at two observations) that and are correlated. It is not reasonable to infer too much from these limited observations, but the relevance is that such correlation violates strict mean independence.

In the econometrics literature if the stochastic structure of is treated as unknown and possibly correlated with then is called a fixed effect.

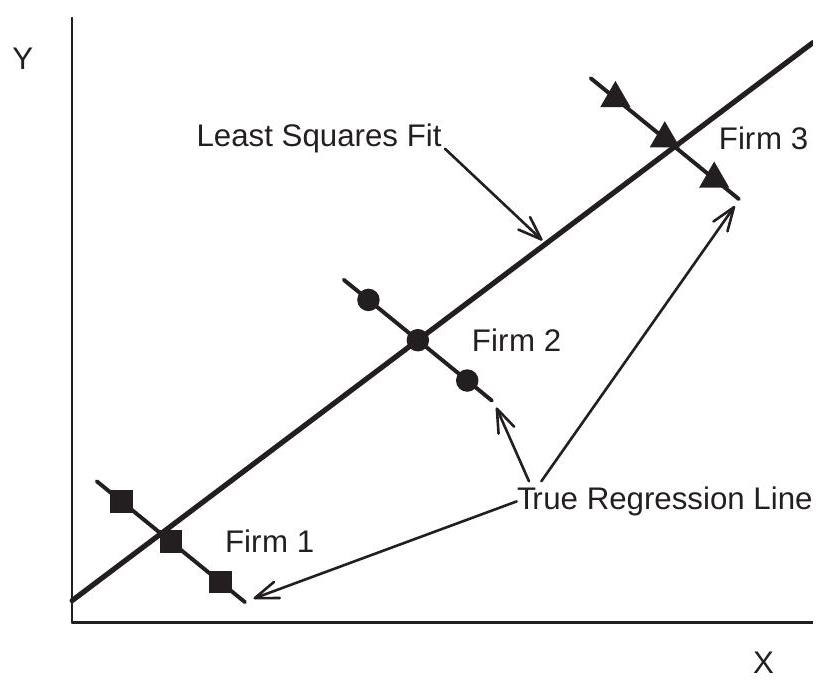

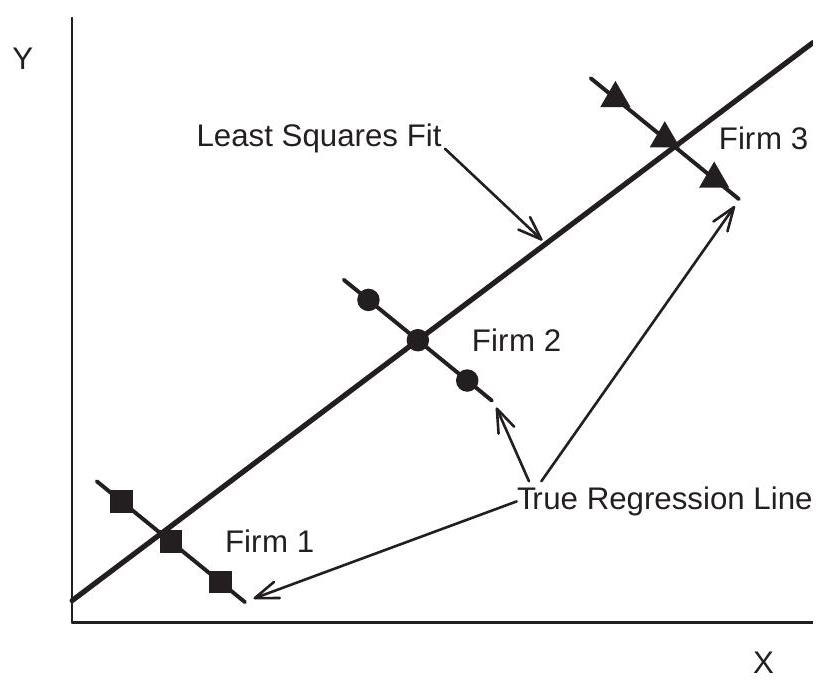

Correlation between and will cause both pooled and random effect estimators to be biased. This is due to the classic problems of omitted variables bias and endogeneity. To see this in a generated example view Figure 17.1. This shows a scatter plot of three observations from three firms. The true model is . (The true slope coefficient is .) The variables and are highly correlated so the fitted pooled regression line through the nine observations has a slope close to +1. (The random effects estimator is identical.) The apparent positive relationship between and is driven entirely by the positive correlation between and . Conditional on , however, the slope is . Thus regression techniques which do not control for will produce biased and inconsistent estimators.

Figure 17.1: Scatter Plot and Pooled Regression Line

The presence of the unstructured individual effect means that it is not possible to identify under a simple projection assumption such as . It turns out that a sufficient condition for identification is the following. Definition 17.1 The regressor is strictly exogenous for the error if

for all .

Strict exogeneity is a strong projection condition, meaning that if for any is added to (17.15) it will have a zero coefficient. Strict exogeneity is a projection analog of strict mean independence

(17.18) implies (17.17) but not conversely. While (17.17) is sufficient for identification and asymptotic theory we will also use the stronger condition (17.18) for finite sample analysis.

While (17.17) and (17.18) are strong assumptions they are much weaker than (17.2) or Assumption 17.1, which require that the individual effect is also strictly mean independent. In contrast, (17.17) and (17.18) make no assumptions about .

Strict exogeneity (17.17) is typically inappropriate in dynamic models. In Section we discuss estimation under the weaker assumption of predetermined regressors.

Within Transformation

In the previous section we showed that if and are correlated then pooled and random-effects estimators will be biased and inconsistent. If we leave the relationship between and fully unstructured then the only way to consistently estimate the coefficient is by an estimator which is invariant to . This can be achieved by transformations which eliminate .

One such transformation is the within transformation. In this section we describe this transformation in detail.

Define the mean of a variable for a given individual as

We call this the individual-specific mean since it is the mean of a given individual. Contrarywise, some authors call this the time-average or time-mean since it is the average over the time periods.

Subtracting the individual-specific mean from the variable we obtain the deviations

This is known as the within transformation. We also refer to as the demeaned values or deviations from individual means. Some authors refer to as deviations from time means. What is important is that the demeaning has occured at the individual level.

Some algebra may also be useful. We can write the individual-specific mean as . Stacking the observations for individual we can write the within transformation using the notation

where is the individual-specific demeaning operator. Notice that is an idempotent matrix.

Similarly for the regressors we define the individual-specific means and demeaned values:

We illustrate demeaning in Table 17.1. In the fourth and seventh columns we display the firm-specific means and and in the fifth and eighth columns the demeaned values and .

We can also define the full-sample within operator. Define and . Note that . Thus

Now apply these operations to equation (17.15). Taking individual-specific averages we obtain

where . Subtracting from (17.15) we obtain

where . The individual effect has been eliminated! obtain

We can alternatively write this in vector notation. Applying the demeaning operator to (17.16) we

The individual-effect is eliminated because . Equation (17.22) is a vector version of (17.21).

The equation (17.21) is a linear equation in the transformed (demeaned) variables. As desired the individual effect has been eliminated. Consequently estimators constructed from (17.21) (or equivalently (17.22)) will be invariant to the values of . This means that the the endogeneity bias described in the previous section will be eliminated.

Another consequence, however, is that all time-invariant regressors are also eliminated. That is, if the original model (17.15) had included any regressors which are constant over time for each individual then for these regressors the demeaned values are identically 0 . What this means is that if equation (17.21) is used to estimate it will be impossible to estimate (or identify) a coefficient on any regressor which is time invariant. This is not a consequence of the estimation method but rather a consequence of the model assumptions. In other words, if the individual effect has no known structure then it is impossible to disentangle the effect of any time-invariant regressor . The two have observationally equivalent effects and cannot be separately identified.

The within transformation can greatly reduce the variance of the regressors. This can be seen in Table 17.1 where you can see that the variation between the elements of the transformed variables and is less than that of the untransformed variables, as much of the variation is captured by the firm-specific means.

It is not typically needed to directly program the within transformation, but if it is desired the following Stata commands easily do so.

| is the original variable |

| id is the group identifier |

| xdot is the within-transformed variable |

| egen xmean mean , by(id) gen xdot |

Fixed Effects Estimator

Consider least squares applied to the demeaned equation (17.21) or equivalently (17.22). This is

This is known as the fixed-effects or within estimator of . It is called the fixed-effects estimator because it is appropriate for the fixed effects model (17.15). It is called the within estimator because it is based on the variation of the data within each individual.

The above definition implicitly assumes that the matrix is full rank. This requires that all components of have time variation for at least some individuals in the sample.

The fixed effects residuals are

Let us describe some of the statistical properties of the estimator under strict mean independence (17.18). By linearity and the fact , we can write

Then (17.18) implies

Thus is unbiased for under (17.18).

Let denote the conditional covariance matrix of the idiosyncratic errors. The variance of is

This expression simplifies when the idiosyncratic errors are homoskedastic and serially uncorrelated:

for all . In this case, and (17.24) simplifies to

It is instructive to compare the variances of the fixed-effects and pooled estimators under (17.25)(17.26) and the assumption that there is no individual-specific effect . In this case we see that

The inequality holds since the demeaned variables have reduced variation relative to the original observations . (See Exercise 17.28.) This shows the cost of using fixed effects relative to pooled estimation. The estimation variance increases due to reduced variation in the regressors. This reduction in efficiency is a necessary by-product of the robustness of the estimator to the individual effects .

Differenced Estimator

The within transformation is not the only transformation which eliminates the individual-specific effect. Another important transformation which does the same is first-differencing.

The first-differencing transformation is . This can be applied to all but the first observation (which is essentially lost). At the level of the individual this can be written as where is the matrix differencing operator

Applying the transformation to (17.15) or (17.16) we obtain or

We can see that the individual effect has been eliminated.

Least squares applied to the differenced equation (17.29) is

(17.30) is called the differenced estimator. For equals the fixed effects estimator. See Exercise 17.6. They differ, however, for .

When the errors are serially uncorrelated and homoskedastic then the error in (17.29) has covariance matrix where

We can reduce estimation variance by using GLS. When the errors are i.i.d. (serially uncorrelated and homoskedastic), this is

where . Recall, the matrix is with rank and is orthogonal to the vector of ones . This means projects orthogonally to and thus equals the within transformation matrix. Hence , the fixed effects estimator!

What we have shown is that under i.i.d. errors, GLS applied to the first-differenced equation precisely equals the fixed effects estimator. Since the Gauss-Markov theorem shows that GLS has lower variance than least squares, this means that the fixed effects estimator is more efficient than first differencing under the assumption that is i.i.d.

This argument extends to any other transformation which eliminates the fixed effect. GLS applied after such a transformation is equal to the fixed effects estimator and is more efficient than least squares applied after the same transformation under i.i.d. errors. This shows that the fixed effects estimator is Gauss-Markov efficient in the class of estimators which eliminate the fixed effect, under these assumptions.

Dummy Variables Regression

An alternative way to estimate the fixed effects model is by least squares of on and a full set of dummy variables, one for each individual in the sample. It turns out that this is algebraically equivalent to the within estimator.

To see this start with the error-component model without a regressor:

Consider least squares estimation of the vector of fixed effects . Since each fixed effect is an individual-specific mean and the least squares estimate of the intercept is the sample mean it follows that the least squares estimate of is . The least squares residual is then , the within transformation. If you would prefer an algebraic argument, let be a vector of dummy variables where the element indicates the individual. Thus the element of is 1 and the remaining elements are zero. Notice that and (17.32) equals . This is a regression with the regressors and coefficients . We can also write this in vector notation at the level of the individual as or using full matrix notation as where .

The least squares estimate of is

The least squares residuals are

as shown in (17.19). Thus the least squares residuals from the simple error-component model are the within transformed variables.

Now consider the error-component model with regressors, which can be written as

since as discussed above. In matrix notation

We consider estimation of by least squares and write the estimates as . We call this the dummy variable estimator of the fixed effects model.

By the Frisch-Waugh-Lovell Theorem (Theorem 3.5) the dummy variable estimator and residuals may be obtained by the least squares regression of the residuals from the regression of on on the residuals from the regression of on . We learned above that the residuals from the regression on are the within transformations. Thus the dummy variable estimator and residuals may be obtained from least squares regression of the within transformed on the within transformed . This is exactly the fixed effects estimator . Thus the dummy variable and fixed effects estimators of are identical.

This is sufficiently important that we state this result as a theorem.

Theorem 17.1 The fixed effects estimator of algebraically equals the dummy variable estimator of . The two estimators have the same residuals.

This may be the most important practical application of the Frisch-Waugh-Lovell Theorem. It shows that we can estimate the coefficients either by applying the within transformation or by inclusion of dummy variables (one for each individual in the sample). This is important because in some cases one approach is more convenient than the other and it is important to know that the two methods are algebraically equivalent.

When is large it is advisable to use the within transformation rather than the dummy variable approach. This is because the latter requires considerably more computer memory. To see this consider the matrix in (17.34) in the balanced case. It has elements which must be created and stored in memory. When is large this can be excessive. For example, if and , the matrix has one billion elements! Whether or not a package can technically handle a matrix of this dimension depends on several particulars (system RAM, operating system, package version), but even if it can execute the calculation the computation time is slow. Hence for fixed effects estimation with large it is recommended to use the within transformation rather than dummy variable regression.

The dummy variable formulation may add insight about how the fixed effects estimator achieves invariance to the fixed effects. Given the regression equation (17.34) we can write the least squares estimator of using the residual regression formula:

since . The expression (17.35) is free of the vector and thus is invariant to . This is another demonstration that the fixed effects estimator is invariant to the actual values of the fixed effects, and thus its statistical properties do not rely on assumptions about .

Fixed Effects Covariance Matrix Estimation

First consider estimation of the classical covariance matrix as defined in (17.27). This is

with

The degree of freedom adjustment is motivated by the dummy variable representation. You can verify that is unbiased for under assumptions (17.18), (17.25) and (17.26). See Exercise 17.8.

Notice that the assumptions (17.18), (17.25), and (17.26) are identical to (17.5)-(17.7) of Assumption 17.1. The assumptions (17.8)-(17.10) are not needed. Thus the fixed effect model weakens the random effects model by eliminating the assumptions on but retaining those on .

The classical covariance matrix estimator (17.36) for the fixed effects estimator is valid when the errors are homoskedastic and serially uncorrelated but is invalid otherwise. A covariance matrix estimator which allows to be heteroskedastic and serially correlated across is the cluster-robust covariance matrix estimator, clustered by individual

where as the fixed effects residuals as defined in (17.23). (17.38) was first proposed by Arellano (1987). As in (4.55) can be multiplied by a degree-of-freedom adjustment. The adjustment recommended by the theory of C. Hansen (2007) is

and that corresponding to is

These estimators are convenient because they are simple to apply and allow for unbalanced panels.

In typical micropanel applications is very large and is modest. Thus the adjustment in (17.39) is minor while that in (17.40) is approximately where is the average number of time periods per individual. When is small this can be a very large adjustment. Hence the choice between (17.38), (17.39), and (17.40) can be substantial.

To understand if the degree of freedom adjustment in (17.40) is appropriate, consider the simplified setting where the residuals are constructed with the true but estimated fixed effects . This is a useful approximation since the number of estimated slope coefficients is small relative to the sample size . Then so and (17.38) equals

which is the idealized estimator with the true errors rather than the residuals. Since it follows that and is unbiased for ! Thus no degree of freedom adjustment is required. This is despite the fact that fixed effects have been estimated. While this analysis concerns the idealized case where the residuals have been constructed with the true coefficients so does not translate into a direct recommendation for the feasible estimator, it still suggests that the strong ad hoc adjustment in (17.40) is unwarranted.

This (crude) analysis suggests that for the cluster robust covariance estimator for fixed effects regression the adjustment recommended by C. Hansen (17.39) is the most appropriate. It is typically well approximated by the unadjusted estimator (17.38). Based on current theory there is no justification for the ad hoc adjustment (17.40). The main argument for the latter is that it produces the largest standard errors and is thus the most conservative choice.

In current practice the estimators (17.38) and (17.40) are the most commonly used covariance matrix estimators for fixed effects estimation.

In Sections and we discuss covariance matrix estimation under heteroskedasticity but no serial correlation.

To illustrate, in Table we present the fixed effect regression estimates of the investment model (17.3) in the third column with cluster-robust standard errors. The trading indicator and the industry dummies cannot be included as they are time-invariant. The point estimates are similar to the random effects estimates, though the coefficients on debt and cash flow increase in magnitude.

Fixed Effects Estimation in Stata

There are several methods to obtain the fixed effects estimator in Stata.

The first method is dummy variable regression. This can be obtained by the Stata regress command, for example reg y , cluster(id) where id is the group (individual) identifier. In most cases, as discussed in Section 17.11, this is not recommended due to the excessive computer memory requirements and slow computation. If this command is done it may be useful to suppress display of the full list of coefficient estimates. To do so, type quietly reg y , cluster(id) followed by estimates table, keep( cons) be se. The second command will report the coefficient(s) on only, not those on the index variable id. (Other statistics can be reported as well.) The second method is to manually create the within transformed variables as described in Section 17.8, and then use regress.

The third method is which is specifically written for panel data. This estimates the slope coefficients using the partialling-out approach. The default covariance matrix estimator is classical as defined in (17.36). The cluster-robust covariance matrix (17.38) can be obtained using the options vce(robust) or .

The fourth method is areg absorb (id). This command is an alternative implementation of partiallingout regression. The default covariance matrix estimator is the classical (17.36). The cluster-robust covariance matrix estimator (17.40) can be obtained using the cluster(id) option. The heteroskedasticityrobust covariance matrix is obtained when or (robust) is specified but this is not recommended unless is large as will be discussed in Section .

An important difference between the Stata xtreg and areg commands is that they implement different cluster-robust covariance matrix estimators: (17.38) in the case of xtreg and (17.40) in the case of areg. As discussed in the previous section the adjustment used by areg is ad hoc and not well-justified but produces the largest and hence most conservative standard errors.

Another difference between the commands is how they report the equation . This difference can be huge and stems from the fact that they are estimating distinct population counter-parts. Full dummy variable regression and the areg command calculate the same way: the squared correlation between and the fitted regression with all predictors including the individual dummy variables. The command reports three values for : within, between, and overall. The “within” is identical to what is obtained from a second stage regression using the within transformed variables. (The second method described above.) The “overall” is the squared correlation between and the fitted regression excluding the individual effects.

Which should be reported? The answer depends on the baseline model before regressors are added. If we view the baseline as an individual-specific mean, then the within calculation is appropriate. If the baseline is a single mean for all observations then the full regression (areg) calculation is appropriate. The latter (areg) calculation is typically much higher than the within calculation, as the fixed effects typically “explain” a large portion of the variance. In any event as there is not a single definition of it is important to be explicit about the method if it is reported.

In current econometric practice both xtreg and areg are used, though areg appears to be the more popular choice. Since the latter typically produces a much higher value of , reported values should be viewed skeptically unless their calculation method is documented by the author.

Between Estimator

The between estimator is calculated from the individual-mean equation (17.20)

Estimation can be done at the level of individuals or at the level of observations. Least squares applied to (17.41) at the level of the individuals is

Least squares applied to (17.41) at the level of observations is

In balanced panels but they differ on unbalanced panels. equals weighted least squares applied at the level of individuals with weight .

Under the random effects assumptions (Assumption 17.1) is unbiased for and has variance

where

is the variance of the error in (17.41). When the panel is balanced the variance formula simplifies to

Under the random effects assumption the between estimator is unbiased for but is less efficient than the random effects estimator . Consequently there seems little direct use for the between estimator in linear panel data applications.

Instead, its primary application is to construct an estimate of . First, consider estimation of

where is the harmonic mean of . (In the case of a balanced panel .) A natural estimator of is

where are the between residuals. (Either or can be used.)

From the relation and (17.42) we can deduce an estimator for . We have already described an estimator for in (17.37) for the fixed effects model. Since the fixed effects model holds under weaker conditions than the random effects model, is valid for the latter as well. This suggests the following estimator for

To summarize, the fixed effect estimator is used for , the between estimator for , and is constructed from the two.

It is possible for (17.43) to be negative. It is typical to use the constrained estimator

(17.44) is the most common estimator for in the random effects model.

The between estimator can be obtained using the Stata command xtreg be. The estimator can be obtained by xtreg be wls.

Feasible GLS

The random effects estimator can be written as

where and . It is instructive to study these transformations.

Define so that . Thus while is the within operator, can be called the individual-mean operator since . We can write

where

Since the matrices and are idempotent and orthogonal we find that and

Therefore the transformation used by the GLS estimator is

which is a partial within transformation.

The transformation as written depends on which is unknown. It can be replaced by the estimator

where the estimators and are given in (17.37) and (17.44). We obtain the feasible transformations

and

The feasible random effects estimator is (17.45) using (17.49) and (17.50).

In the previous section we noted that it is possible for . In this case and .

What this shows is the following. The random effects estimator (17.45) is least squares applied to the transformed variables and defined in (17.50) and (17.49). When these are the within transformations, so , and is the fixed effects estimator. When the data are untransformed , and is the pooled estimator. In general, and can be viewed as partial within transformations.

Recalling the definition we see that when the idiosyncratic error variance is large relative to then and . Thus when the variance estimates suggest that the individual effect is relatively small the random effect estimator simplifies to the pooled estimator. On the other hand when the individual effect error variance is large relative to then and . Thus when the variance estimates suggest that the individual effect is relatively large the random effect estimator is close to the fixed effects estimator.

Intercept in Fixed Effects Regression

The fixed effect estimator does not apply to any regressor which is time-invariant for all individuals. This includes an intercept. Yet some authors and packages (e.g. Amemiya (1971) and xtreg in Stata) report an intercept. To see how to construct an estimator of an intercept take the components regression equation adding an explicit intercept

We have already discussed estimation of by . Replacing in this equation with and then estimating by least squares, we obtain

where and are averages from the full sample. This is the estimator reported by xtreg.

Estimation of Fixed Effects

For most applications researchers are interested in the coefficients not the fixed effects . But in some cases the fixed effects themselves are interesting. This arises when we want to measure the distribution of to understand its heterogeneity. It also arises in the context of prediction. As discussed in Section the fixed effects estimate is obtained by least squares applied to the regression (17.33). To find their solution, replace in (17.33) with the least squares minimizer and apply least squares. Since this is the individual-specific intercept the solution is

Alternatively, using (17.34) this is

Thus the least squares estimates of the fixed effects can be obtained from the individual-specific means and does not require a regression with regressors.

If an intercept has been estimated (as discussed in the previous section) it should be subtracted from (17.51). In this case the estimated fixed effects are

With either estimator when the number of time series observations is small will be an imprecise estimator of . Thus calculations based on should be interpreted cautiously.

The fixed effects (17.52) may be obtained in Stata after ivreg, fe using the predict u command or after areg using the predict d command.

GMM Interpretation of Fixed Effects

We can also interpret the fixed effects estimator through the generalized method of moments.

Take the fixed effects model after applying the within transformation (17.21). We can view this as a system of equations, one for each time period . This is a multivariate regression model. Using the notation of Chapter 11 define the regressor matrix

If we treat each time period as a separate equation we have the moment conditions

This is an overidentified system of equations when as there are coefficients and moments. (However, the moments are collinear due to the within transformation. There are effective moments.) Interpreting this model in the context of multivariate regression, overidentification is achieved by the restriction that the coefficient vector is constant across time periods.

This model can be interpreted as a regression of on using the instruments . The 2SLS estimator using matrix notation is

Notice that

and

Thus the 2SLS estimator simplifies to

the fixed effects estimator!

This shows that if we treat each time period as a separate equation with its separate moment equation so that the system is over-identified, and then estimate by GMM using the 2SLS weight matrix, the resulting GMM estimator equals the simple fixed effects estimator. There is no change by adding the additional moment conditions.

The 2SLS estimator is the appropriate GMM estimator when the equation error is serially uncorrelated and homoskedastic. If we use a two-step efficient weight matrix which allows for heteroskedasticity and serial correlation the GMM estimator is

where are the fixed effects residuals.

Notationally, this GMM estimator has been written for a balanced panel. For an unbalanced panel the sums over need to be replaced by sums over individuals observed during time period . Otherwise no changes need to be made.

Identification in the Fixed Effects Model

The identification of the slope coefficient in fixed effects regression is similar to that in conventional regression but somewhat more nuanced.

It is most useful to consider the within-transformed equation, which can be written as or

From regression theory we know that the coefficient is the linear effect of on . The variable is the deviation of the regressor from its individual-specific mean and similarly for . Thus the fixed effects model does not identify the effect of the average level of on the average level of , but rather the effect of the deviations in on .

In any given sample the fixed effects estimator is only defined if is full rank. The population analog (when individuals are i.i.d.) is

Equation (17.54) is the identification condition for the fixed effects estimator. It requires that the regressor matrix is full-rank in expectation after application of the within transformation. The regressors cannot contain any variable which does not have time-variation at the individual level nor a set of regressors whose time-variation at the individual level is collinear.

Asymptotic Distribution of Fixed Effects Estimator

In this section we present an asymptotic distribution theory for the fixed effects estimator in balanced panels. Unbalanced panels are considered in the following section.

We use the following assumptions.

Assumption $17.2

for and with .

The variables , are independent and identically distributed.

for all .

.

.

Given Assumption we can establish asymptotic normality for .

Theorem 17.2 Under Assumption 17.2, as where and .

This asymptotic distribution is derived as the number of individuals diverges to infinity while the time number of time periods is held fixed. Therefore the normalization is rather than (though either could be used since is fixed). This approximation is appropriate for the context of a large number of individuals. We could alternatively derive an approximation for the case where both and diverge to infinity but this would not be a stronger result. One way of thinking about this is that Theorem does not require to be large.

Theorem may appear standard given our arsenal of asymptotic theory but in a fundamental sense it is quite different from any other result we have introduced. Fixed effects regression is effectively estimating coefficients - the slope coefficients plus the fixed effects - and the theory specifies that . Thus the number of estimated parameters is diverging to infinity at the same rate as sample size yet the the estimator obtains a conventional mean-zero sandwich-form asymptotic distribution. In this sense Theorem is new and special.

We now discuss the assumptions.

Assumption 17.2.2 states that the observations are independent across individuals . This is commonly used for panel data asymptotic theory. An important implied restriction is that it means that we exclude from the regressors any serially correlated aggregate time series variation. Assumption 17.2.3 imposes that is strictly exogeneous for . This is stronger than simple projection but is weaker than strict mean independence (17.18). It does not impose any condition on the individual-specific effects .

Assumption 17.2.4 is the identification condition discussed in the previous section.

Assumptions 17.2.5 and 17.2.6 are needed for the central limit theorem.

We now prove Theorem 17.2. The assumptions imply that the variables are i.i.d. across and have finite fourth moments. Thus by the WLLN

Assumption 17.2.3 implies

so they are mean zero. Assumptions 17.2.5 and 17.2.6 imply that has a finite covariance matrix . The assumptions for the CLT (Theorem 6.3) hold, thus

Together we find

as stated.

Asymptotic Distribution for Unbalanced Panels

In this section we extend the theory of the previous section to cover unbalanced panels under random selection. Our presentation is built on Section of Wooldridge (2010).

Think of an unbalanced panel as a shortened version of an idealized balanced panel where the shortening is due to “missing” observations due to random selection. Thus suppose that the underlying (potentially latent) variables are and . Let be a vector of selection indicators, meaning that if the time period is observed for individual and otherwise. Then we can describe the estimators algebraically as follows.

Let and , which is idempotent. The within transformations can be written as and . They have the property that if (so that time period is missing) then the element of and the row of are all zeros. The missing observations have been replaced by zeros. Consequently, they do not appear in matrix products and sums.

The fixed effects estimator of based on the observed sample is

Centered and normalized,

Notationally this appears to be identical to the case of a balanced panel but the difference is that the within operator incorporates the sample selection induced by the unbalanced panel structure.

To derive a distribution theory for need to be explicit about the stochastic nature of . That is, why are some time periods observed and some not? We could take several approaches:

We could treat as fixed (non-random). This is the easiest approach but the most unsatisfactory.

We could treat as random but independent of . This is known as “missing at random” and is a common assumption used to justify methods with missing observations. It is justified when the reason why observations are not observed is independent of the observations. This is appropriate, for example, in panel data sets where individuals enter and exit in “waves”. The statistical treatment is not substantially different from the case of fixed .

We could treat as jointly random but impose a condition sufficient for consistent estimation of . This is the approach we take below. The condition turns out to be a form of mean independence. The advantage of this approach is that it is less restrictive than full independence. The disadvantage is that we must use a conditional mean restriction rather than uncorrelatedness to identify the coefficients.

The specific assumptions we impose are as follows.

Assumption 17.3

for with .

The variables , are independent and identically distributed.

.

.

.

.

The primary difference with Assumption is that we have strengthened strict exogeneity to strict mean independence. This imposes that the regression model is properly specified and that selection does not affect the mean of . It is less restrictive than full independence since can affect other moments of and more importantly does not restrict the joint dependence between and .

Given the above development it is straightforward to establish asymptotic normality.

Theorem 17.3 Under Assumption 17.3, as where and . We now prove Theorem 17.3. The assumptions imply that the variables are i.i.d. across and have finite fourth moments. By the WLLN

The random vectors are i.i.d. The matrix is a function of only. Assumption 17.3.3 and the law of iterated expectations implies

so that is mean zero. Assumptions 17.3.5 and 17.3.6 and the fact that is bounded implies that has a finite covariance matrix, which is . The assumptions for the CLT hold, thus

Together we obtain the stated result.

Heteroskedasticity-Robust Covariance Matrix Estimation

We have introduced two covariance matrix estimators for the fixed effects estimator. The classical estimator (17.36) is appropriate for the case where the idiosyncratic errors are homoskedastic and serially uncorrelated. The cluster-robust estimator (17.38) allows for heteroskedasticity and arbitrary serial correlation. In this and the following section we consider the intermediate case where is heteroskedastic but serially uncorrelated.

Assume that (17.18) and (17.26) hold but not necessarily (17.25). Define the conditional variances

Then . The covariance matrix (17.24) can be written as

A natural estimator of is . Replacing with in (17.56) and making a degree-of-freedom adjustment we obtain a White-type covariance matrix estimator

Following the insight of White (1980) it may seem appropriate to expect to be a reasonable estimator of . Unfortunately this is not the case as discovered by Stock and Watson (2008). The problem is that is a function of the individual-specific means which are negligible only if the number of time series observations are large.

We can see this by a simple bias calculation. Assume that the sample is balanced and that the residuals are constructed with the true . Then

Using (17.26) and (17.55)

where . (See Exercise 17.10.) Using (17.57) and setting we obtain

Thus is biased of order . Unless this bias will persist as is unbiased in two contexts. The first is when the errors are homoskedastic. The second is when . (To show the latter requires some algebra so is omitted.)

To correct the bias for the case , Stock and Watson (2008) proposed the estimator

You can check that and so is unbiased for . (See Exercise 17.11.)

Stock and Watson (2008) show that is consistent with fixed and . In simulations they show that has excellent performance.

Because of the Stock-Watson analysis Stata no longer calculates the heteroskedasticity-robust covariance matrix estimator when the fixed effects estimator is calculated using the xtreg command. Instead, the cluster-robust estimator is reported when robust standard errors are requested. However, fixed effects is often implemented using the areg command which reports the biased estimator if robust standard errors are requested. These leads to the practical recommendation that areg should be used with the cluster(id) option.

At present the corrected estimator (17.58) has not been programmed as a Stata option.

Heteroskedasticity-Robust Estimation - Unbalanced Case

A limitation with the bias-corrected robust covariance matrix estimator of Stock and Watson (2008) is that it was only derived for balanced panels. In this section we generalize their estimator to cover unbalanced panels.

The estimator is

where

To justify this estimator, as in the previous section make the simplifying assumption that the residuals are constructed with the true . We calculate that

You can show that under these assumptions, and thus is unbiased for . (See Exercise 17.12.)

In balanced panels the estimator simplifies to the Stock-Watson estimator (with ).

Hausman Test for Random vs Fixed Effects

The random effects model is a special case of the fixed effects model. Thus we can test the null hypothesis of random effects against the alternative of fixed effects. The Hausman test is typically used for this purpose. The statistic is a quadratic in the difference between the fixed effects and random effects estimators. The statistic is

where both and take the classical (non-robust) form.

The test can be implemented on a subset of the coefficients . In particular this needs to be done if the regressors contain time-invariant elements so that the random effects estimator contains more coefficients than the fixed effects estimator. In this case the test should be implemented only on the coefficients on the time-varying regressors.

An asymptotic test rejects if exceeds the quantile of the distribution where . If the test rejects this is evidence that the individual effect is correlated with the regressors so the random effects model is not appropriate. On the other hand if the test fails to reject this evidence says that the random effects hypothesis cannot be rejected.

It is tempting to use the Hausman test to select whether to use the fixed effects or random effects estimator. One could imagine using the random effects estimator if the Hausman test fails to reject the random effects hypothesis and using the fixed effects estimator otherwise. This is not, however, a wise approach. This procedure - selecting an estimator based on a test - is known as a pretest estimator and is biased. The bias arises because the result of the test is random and correlated with the estimators.

Instead, the Hausman test can be used as a specification test. If you are planning to use the random effects estimator (and believe that the random effects assumptions are appropriate in your context) the Hausman test can be used to check this assumption and provide evidence to support your approach.

Random Effects or Fixed Effects?

We have presented the random effects and fixed effects estimators of the regression coefficients. Which should be used in practice? How should we view the difference?

The basic distinction is that the random effects estimator requires the individual error to satisfy the conditional mean assumption (17.8). The fixed effects estimator does not require (17.8) and is robust to its violation. In particular, the individual effect can be arbitrarily correlated with the regressors. On the other hand the random effects estimator is efficient under random effects (Assumption 17.1). Current econometric practice is to prefer robustness over efficiency. Consequently, current practice is (nearly uniformly) to use the fixed effects estimator for linear panel data models. Random effects estimators are only used in contexts where fixed effects estimation is unknown or challenging (which occurs in many nonlinear models).

The labels “random effects” and “fixed effects” are misleading. These are labels which arose in the early literature and we are stuck with these labels today. In a previous era regressors were viewed as “fixed”. Viewing the individual effect as an unobserved regressor leads to the label of the individual effect as “fixed”. Today, we rarely refer to regressors as “fixed” when dealing with observational data. We view all variables as random. Consequently describing as “fixed” does not make much sense and it is hardly a contrast with the “random effect” label since under either assumption is treated as random. Once again, the labels are unfortunate but the key difference is whether is correlated with the regressors.

Time Trends

In general we expect that economic agents will experience common shocks during the same time period. For example, business cycle fluctations, inflation, and interest rates affect all agents in the economy. Therefore it is often desirable to include time effects in a panel regression model.

The simplest specification is a linear time trend

For a introduction to time trends see Section 14.42. More flexible specifications (such as a quadratic) can also be used. For estimation it is appropriate to include the time trend as an element of the regressor vector and then apply fixed effects.

In some cases the time trends may be individual-specific. Series may be growing or declining at different rates. A linear time trend specification only extracts a common time trend. To allow for individualspecific time trends we need to include an interaction effect. This can be written as

In a fixed effects specification the coefficients are treated as possibly correlated with the regressors. To eliminate them from the model we treat them as unknown parameters and estimate all by least squares. By the FWL theorem the estimator for equals least squares of on where their elements are the residuals from the least squares regressions on a linear time trend fit separately for each individual and variable.

Two-Way Error Components

In the previous section we discussed inclusion of time trends and individual-specific time trends. The functional forms imposed by linear time trends are restrictive. There is no economic reason to expect the “trend” of a series to be linear. Business cycle “trends” are cyclic. This suggests that it is desirable to be more flexible than a linear (or polynomial) specifications. In this section we consider the most flexible specification where the trend is allowed to take any arbitrary shape but will require that it is common rather than individual-specific.

The model we consider is the two-way error component model

In this model is an unobserved individual-specific effect, is an unobserved time-specific effect, and is an idiosyncratic error.

The two-way model (17.63) can be handled either using random effects or fixed effects. In a random effects framework the errors and are modeled as in Assumption 17.1. When the panel is balanced the covariance matrix of the error vector is

When the panel is unbalanced a similar but cumbersome expression for (17.64) can be derived. This variance (17.64) can be used for GLS estimation of .

More typically (17.63) is handled using fixed effects. The two-way within transformation subtracts both individual-specific means and time-specific means to eliminate both and from the two-way model (17.63). For a variable we define the time-specific mean as follows. Let be the set of individuals for which the observation is included in the sample and let be the number of these individuals. Then the time-specific mean at time is

This is the average across all values of observed at time .

For the case of balanced panels the two-way within transformation is

where is the full-sample mean. If satisfies the two-way component model

then and . Hence

so the individual and time effects are eliminated.

The two-way within transformation applied to (17.63) yields

which is invariant to both and . The two-way within estimator is least squares applied to (17.66).

For the unbalanced case there are two computational approaches to implement the estimator. Both are based on the realization that the estimator is equivalent to including dummy variables for all time periods. Let be a set of dummy variables where the indicates the time period. Thus the element of is 1 and the remaining elements are zero. Set as the vector of time fixed effects. Notice that . We can write the two-way model as

This is the dummy variable representation of the two-way error components model.

Model (17.67) can be estimated by one-way fixed effects with regressors and and coefficient vectors and . This can be implemented by standard one-way fixed effects methods including xtreg or areg in Stata. This produces estimates of the slopes as well as the time effects . To achieve identification one time dummy variable is omitted from so the estimated time effects are all relative to this baseline time period. This is the most common method in practice to estimate a two-way fixed effects model. As the number of time periods is typically modest this is a computationally attractive approach.

The second computational approach is to eliminate the time effects by residual regression. This is done by the following steps. First, subtract individual-specific means for (17.67). This yields

Second, regress on to obtain a residual and regress each element of on to obtain a residual . Third, regress on to obtain the within estimator of . These steps eliminate the fixed effects so the estimator is invariant to their value. What is important about this two-step procedure is that the second step is not a within transformation across the time index but rather standard regression.

If the two-way within estimator is used then the regressors cannot include any time-invariant variables or common time series variables . Both are eliminated by the two-way within transformation. Coefficients are only identified for regressors which have variation both across individuals and across time.

If desired, the relevance of the time effects can be tested by an exclusion test on the coefficients . If the test rejects the hypothesis of zero coefficients then this indicates that the time effects are relevant in the regression model.

The fixed effects estimator of (17.63) is invariant to the values of and , thus no assumptions need to be made concerning their stochastic properties.

To illustrate, the fourth column of Table presents fixed effects estimates of the investment equation, augmented to included year dummy indicators, and is thus a two-way fixed effects model. In this example the coefficient estimates and standard errors are not greatly affected by the inclusion of the year dummy variables.

Instrumental Variables

Take the fixed effects model

We say is exogenous for if , and we say is endogenous for if . In Chapter 12 we discussed several economic examples of endogeneity and the same issues apply in the panel data context. The primary difference is that in the fixed effects model we only need to be concerned if the regressors are correlated with the idiosyncratic error , as correlation between and is allowed.

As in Chapter 12 if the regressors are endogenous the fixed effects estimator will be biased and inconsistent for the structural coefficient . The standard approach to handling endogeneity is to specify instrumental variables which are both relevant (correlated with ) yet exogenous (uncorrelated with ).

Let be an instrumental variable where . As in the cross-section case, may contain both included exogenous variables (variables in that are exogenous) and excluded exogenous variables (variables not in ). Let be the stacked instruments by individual and be the stacked instruments for the full sample.

The dummy variable formulation of the fixed effects model is where is an vector of dummy variables, one for each individual in the sample. The model in matrix notation for the full sample is

Theorem shows that the fixed effects estimator for can be calculated by least squares estimation of (17.69). Thus the dummies should be viewed as included exogenous variables. Consider 2SLS estimation of using the instruments for . Since is an included exogenous variable it should also be used as an instrument. Thus 2SLS estimation of the fixed effects model (17.68) is algebraically 2SLS of the regression (17.69) of on using the pair as instruments.

Since the dimension of can be excessively large, as discussed in Section 17.11, it is advisable to use residual regression to compute the 2SLS estimator as we now describe.

In Section 12.12, we described several alternative representations for the 2SLS estimator. The fifth (equation (12.32)) shows that the 2SLS estimator for equals

where . The latter is the matrix within operator, thus , and . It follows that the 2SLS estimator is

This is convenient. It shows that the 2SLS estimator for the fixed effects model can be calculated by applying 2SLS to the within-transformed , and . The 2SLS residuals are .

This estimator can be obtained using the Stata command xtivreg fe. It can also be obtained using the Stata command ivregress after making the within transformations.

The presentation above focused for clarity on the one-way fixed effects model. There is no substantial change in the two-way fixed effects model

The easiest way to estimate the two-way model is to add time-period dummies to the regression model and include these dummy variables as both regressors and instruments.

Identification with Instrumental Variables

To understand the identification of the structural slope coefficient in the fixed effects model it is necessary to examine the reduced form equation for the endogenous regressors . This is

where is a vector of fixed effects for the regressors and is an idiosyncratic error.

The coefficient matrix is the linear effect of on holding the fixed effects constant. Thus has a similar interpretation as the coefficient in the fixed effects regression model. It is the effect of the variation in about its individual-specific mean on .

The 2SLS estimator is a function of the within transformed variables. Applying the within transformation to the reduced form we find . This shows that is the effect of the within-transformed instruments on the regressors. If there is no time-variation in the within-transformed instruments or there is no correlation between the instruments and the regressors after removing the individual-specific means then the coefficient will be either not identified or singular. In either case the coefficient will not be identified.

Thus for identification of the fixed effects instrumental variables model we need

and

Condition (17.70) is the same as the condition for identification in fixed effects regression - the instruments must have full variation after the within transformation. Condition (17.71) is analogous to the relevance condition for identification of instrumental variable regression in the cross-section context but applies to the within-transformed instruments and regressors.

Condition (17.71) shows that to examine instrument validity in the context of fixed effects 2SLS it is important to estimate the reduced form equation using fixed effects (within) regression. Standard tests for instrument validity ( tests on the excluded instruments) can be applied. However, since the correlation structure of the reduced form equation is in general unknown it is appropriate to use a cluster-robust covariance matrix, clustered at the level of the individual.

Asymptotic Distribution of Fixed Effects 2SLS Estimator

In this section we present an asymptotic distribution theory for the fixed effects estimator. We provide a formal theory for the case of balanced panels and discuss an extension to the unbalanced case.

We use the following assumptions for balanced panels.

Assumption $17.4

for and with .

The variables , are independent and identically distributed.

for all .

.

where .

.

.

.

Given Assumption we can establish asymptotic normality for .

Theorem 17.4 Under Assumption 17.4, as where

The proof of the result is similar to Theorem so is omitted. The key condition is Assumption 17.4.3, which states that the instruments are strictly exogenous for the idiosyncratic errors. The identification conditions are Assumptions 17.4.4 and 17.4.5, which were discussed in the previous section.

The theorem is stated for balanced panels. For unbalanced panels we can modify the theorem as in Theorem by adding the selection indicators and replacing Assumption with 0 , which states that the idiosyncratic errors are mean independent of the instruments and selection.

If the idiosyncratic errors are homoskedastic and serially uncorrelated then the covariance matrix simplifies to

In this case a classical homoskedastic covariance matrix estimator can be used. Otherwise a clusterrobust covariance matrix estimator can be used, and takes the form

As for the case of fixed effects regression, the heteroskedasticity-robust covariance matrix estimator is not recommended due to bias when is small, and a bias-corrected version has not been developed.

The Stata command xtivreg, fe by default reports the classical homoskedastic covariance matrix estimator. To obtain a cluster-robust covariance matrix use option vce (robust) orvce (cluster id).

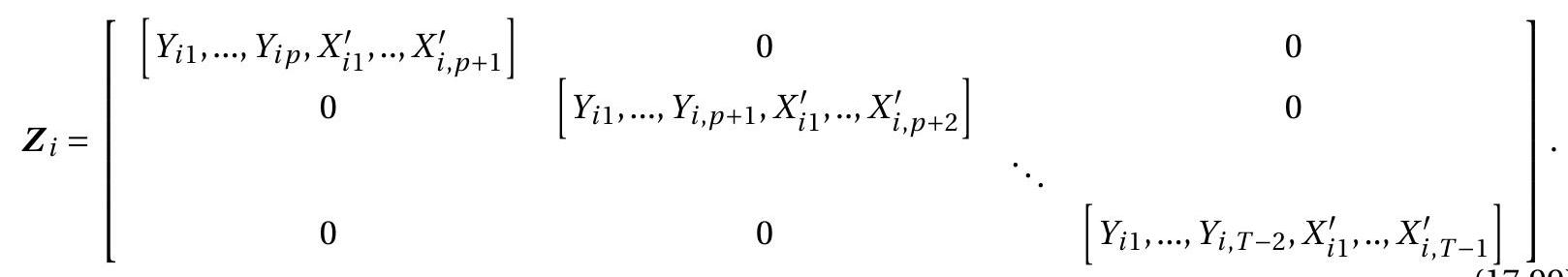

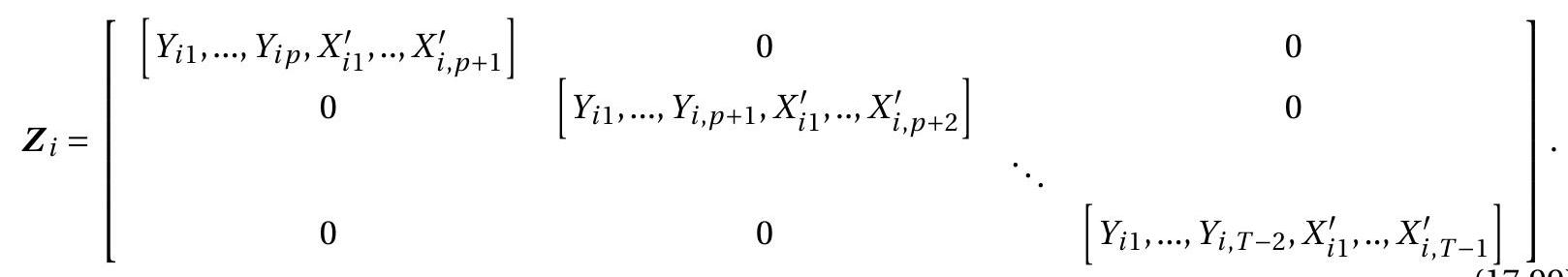

Linear GMM

Consider the just-identified 2SLS estimator. It solves the equation . These are sample analogs of the population moment condition . These population conditions hold at the true because as lies in the null space of , and is implied by Assumption 17.4.3.

The population orthogonality conditions hold in the overidentified case as well. In this case an alternative to 2SLS is GMM. Let be an estimator of , for example

where are the 2SLS fixed effects residuals. The GMM fixed effects estimator is

The estimator (17.73)-(17.72) does not have a Stata command but can be obtained by generating the within transformed variables and , and then estimating by GMM a regression of on using as instruments using a weight matrix clustered by individual.

Estimation with Time-Invariant Regressors

One of the disappointments with the fixed effects estimator is that it cannot estimate the effect of regressors which are time-invariant. They are not identified separately from the fixed effect and are eliminated by the within transformation. In contrast, the random effects estimator allows for time-invariant regressors but does so only by assuming strict exogeneity which is stronger than typically desired in economic applications.

It turns out that we can consider an intermediate case which maintains the fixed effects assumptions for the time-varying regressors but uses stronger assumptions on the time-invariant regressors. For our exposition we will denote the time-varying regressors by the vector and the time-invariant regressors by the vector .

Consider the linear regression model

At the level of the individual this can be written as

where . For the full sample in matrix notation we can write this as

We maintain the assumption that the idiosyncratic errors are uncorrelated with both and at all time horizons:

In this section we consider the case where is uncorrelated with the individual-level error , thus

but the correlation of and is left unrestricted. In this context we say that is exogenous with respect to the fixed effect while is endogenous with respect to . Note that this is a different type of endogeneity than considered in the sections on instrumental variables: there endogeneity meant correlation with the idiosyncratic error . Here endogeneity means correlation with the fixed effect .

We consider estimation of (17.74) by instrumental variables and thus need instruments which are uncorrelated with the error . The time-invariant regressors satisfy this condition due to (17.76) and (17.77), thus

While the time-varying regressors are correlated with the within transformed variables are uncorrelated with under (17.75), thus

Therefore we can estimate by instrumental variable regression using the instrument set . Specifically, regression of on and treating as endogenous, as exogenous, and using the instrument . Write this estimator as . This can be implemented using the Stata ivregress command after constructing the within transformed .

This instrumental variables estimator is algebraically equal to a simple two-step estimator. The first step is the fixed effects estimator. The second step sets , the least squares coefficient from the regression of the estimated fixed effect on . To see this equivalence observe that the instrumental variables estimator estimator solves the sample moment equations

Notice that so . Thus (17.78) is the same as whose solution is . Plugging this into the left-side of (17.79) we obtain

where and are the stacked individual means and . Set equal to 0 and solving we obtain the least squares estimator as claimed. This equivalence was first observed by Hausman and Taylor (1981).

For standard error calculation it is recommended to estimate jointly by instrumental variable regression and use a cluster-robust covariance matrix clustered at the individual level. Classical and heteroskedasticity-robust estimators are misspecified due to the individual-specific effect .

The estimator is a special case of the Hausman-Taylor estimator described in the next section. (For an unknown reason the above estimator cannot be estimated using Stata’s xthtaylor command.)

Hausman-Taylor Model

Hausman and Taylor (1981) consider a generalization of the previous model. Their model is

where and are time-varying and and are time-invariant. Let the dimensions of , , and be , and , respectively.

Write the model in matrix notation as

Let and denote conformable matrices of individual-specific means and let and denote the within-transformed variables.

The Hausman-Taylor model assumes that all regressors are uncorrelated with the idiosyncratic error at all time horizons and that and are exogenous with respect to the fixed effect so that

The regressors and , however, are allowed to be correlated with .

Set and . The assumptions imply the following population moment conditions

There are moment conditions and coefficients. Identification requires : that there are at least as many exogenous time-varying regressors as endogenous time-invariant regressors. (This includes the model of the previous section where .) Given the moment conditions the coefficients can be estimated by 2SLS regression of (17.80) using the instruments or equivalently . This is 2SLS regression treating and as exogenous and and as endogenous using the excluded instruments and

It is recommended to use cluster-robust covariance matrix estimation clustered at the individual level. Neither conventional nor heteroskedasticity-robust covariance matrix estimators should be used as they are misspecified due to the individual-specific effect .

When the model is just-identified the estimators simplify as follows. and are the fixed effects estimator. and equal the 2 SLS estimator from a regression of on and using as an instrument for . (See Exercise 17.14.)

When the model is over-identified the equation can also be estimated by GMM with a cluster-robust weight matrix using the same equations and instruments.

This estimator with cluster-robust standard errors can be calculated using the Stata ivregress cluster(id) command after constructing the transformed variables and .

The 2SLS estimator described above corresponds with the Hausman and Taylor (1981) estimator in the just-identified case with a balanced panel.

Hausman and Taylor derived their estimator under the stronger assumption that the errors and are strictly mean independent and homoskedastic and consequently proposed a GLS-type estimator which is more efficient when these assumptions are correct. Define where and and are the variances of the error components and . Define as well the transformed variables and . The Hausman-Taylor estimator is

Recall from (17.47) that where is defined in (17.46). Thus

It follows that the Hausman-Taylor estimator can be calculated by 2SLS regression of on using the instruments

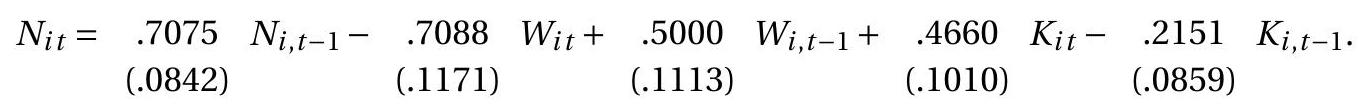

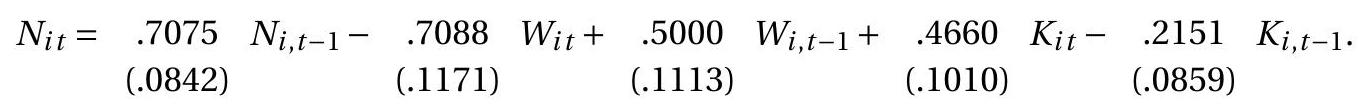

When the panel is balanced the coefficients all equal and scale out from the instruments. Thus the estimator can be calculated by 2SLS regression of on using the instruments