Introduction

We now turn to nonparametric estimation of the conditional expectation function (CEF)

Unless an economic model restricts the form of to a parametric function, can take any nonlinear shape and is therefore nonparametric. In this chapter we discuss nonparametric kernel smoothing estimators of . These are related to the nonparametric density estimators of Chapter 17 of Probability and Statistics for Economists. In Chapter 20 of this textbook we explore estimation by series methods.

There are many excellent monographs written on nonparametric regression estimation, including Härdle (1990), Fan and Gijbels (1996), Pagan and Ullah (1999), and Li and Racine (2007).

To get started, suppose that there is a single real-valued regressor . We consider the case of vectorvalued regressors later. The nonparametric regression model is

We assume that we have observations for the pair . The goal is to estimate either at a single point or at a set of points. For most of our theory we focus on estimation at a single point which is in the interior of the support of .

In addition to the conventional regression assumptions we assume that both and (the marginal density of ) are continuous in . For our theoretical treatment we assume that the observations are i.i.d. The methods extend to dependent observations but the theory is more advanced. See Fan and Yao (2003). We discuss clustered observations in Section 19.20.

Binned Means Estimator

For clarity, fix the point and consider estimation of . This is the expectation of for random pairs such that . If the distribution of were discrete then we could estimate by taking the average of the sub-sample of observations for which . But when is continuous then the probability is zero that exactly equals . So there is no sub-sample of observations with and this estimation idea is infeasible. However, if is continuous then it should be possible to get a good approximation by taking the average of the observations for which is close to , perhaps for the observations for which for some small . As for the case of density estimation we call a bandwidth. This binned means estimator can be written as

This is an step function estimator of the regression function .

- Nadaraya-Watson

.jpg)

- Local Linear

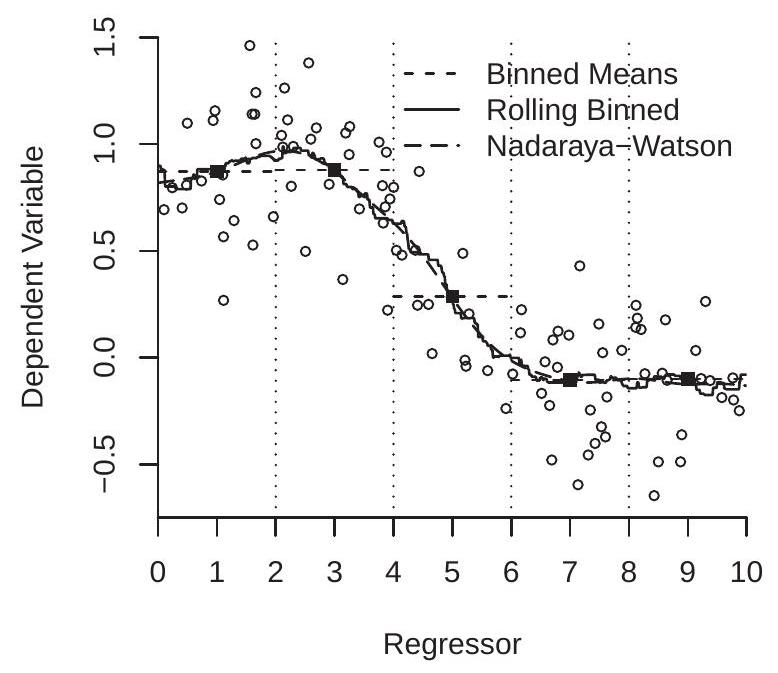

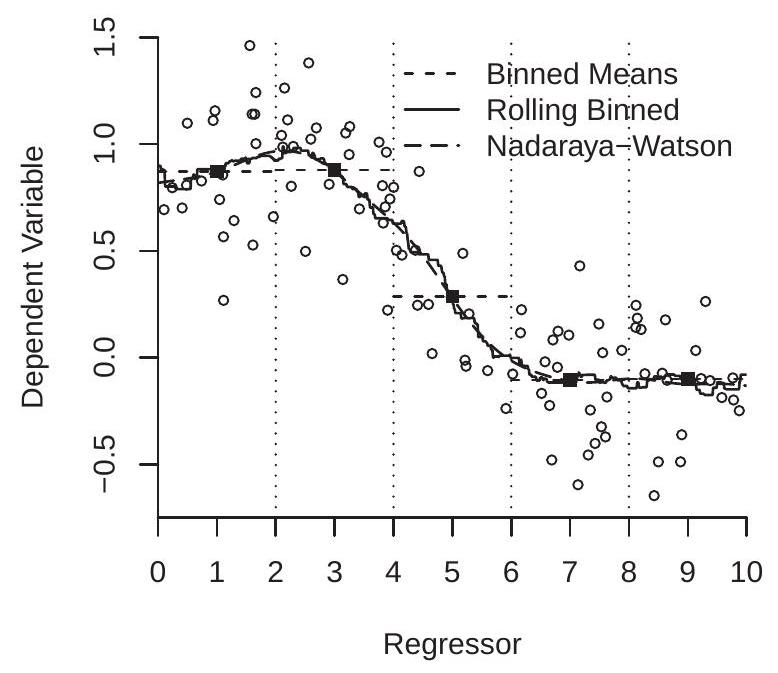

Figure 19.1: Nadaraya-Watson and Local Linear Regression

To visualize, Figure 19.1(a) displays a scatter plot of 100 random pairs generated by simulation. The observations are displayed as the open circles. The estimator (19.1) of at with is the average of the for the observations such that falls in the interval [ . This estimator is and is shown on Figure 19.1(a) by the first solid square. We repeat the calculation (19.1) for , 5,7 , and 9, which is equivalent to partitioning the support of into the bins , [2,4], , and . These bins are shown in Figure 19.1(a) by the vertical dotted lines and the estimates by the five solid squares.

The binned estimator is the step function which is constant within each bin and equals the binned mean. In Figure 19.1(a) it is displayed by the horizontal dashed lines which pass through the solid squares. This estimate roughly tracks the central tendency of the scatter of the observations . However, the huge jumps at the edges of the partitions are disconcerting, counter-intuitive, and clearly an artifact of the discrete binning.

If we take another look at the estimation formula (19.1) there is no reason why we need to evaluate (19.1) only on a course grid. We can evaluate for any set of values of . In particular, we can evaluate (19.1) on a fine grid of values of and thereby obtain a smoother estimate of the CEF. This estimator is displayed in Figure 19.1(a) with the solid line. We call this estimator “Rolling Binned Means”. This is a generalization of the binned estimator and by construction passes through the solid squares. It turns out that this is a special case of the Nadaraya-Watson estimator considered in the next section. This estimator, while less abrupt than the Binned Means estimator, is still quite jagged.

Kernel Regression

One deficiency with the estimator (19.1) is that it is a step function in even when evaluated on a fine grid. That is why its plot in Figure is jagged. The source of the discontinuity is that the weights are discontinuous indicator functions. If instead the weights are continuous functions then will also be continuous in . Appropriate weight functions are called kernel functions.

Definition 19.1 A (second-order) kernel function satisfies

,

,

for all positive integers .

Essentially, a kernel function is a bounded probability density function which is symmetric about zero. Assumption 19.1.4 is not essential for most results but is a convenient simplification and does not exclude any kernel function used in standard empirical practice. Some of the mathematical expressions are simplified if we restrict attention to kernels whose variance is normalized to unity.

Definition 19.2 A normalized kernel function satisfies .

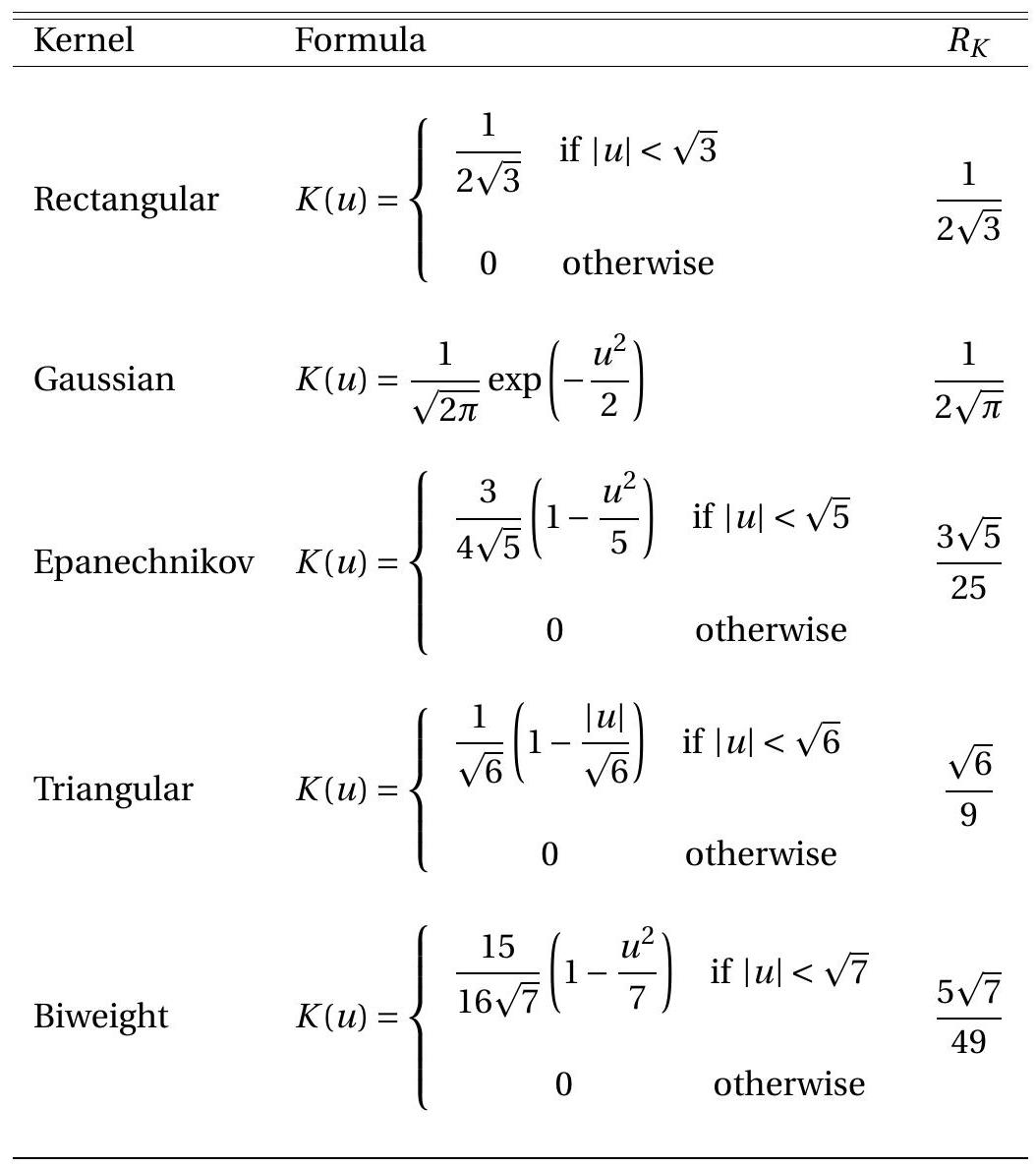

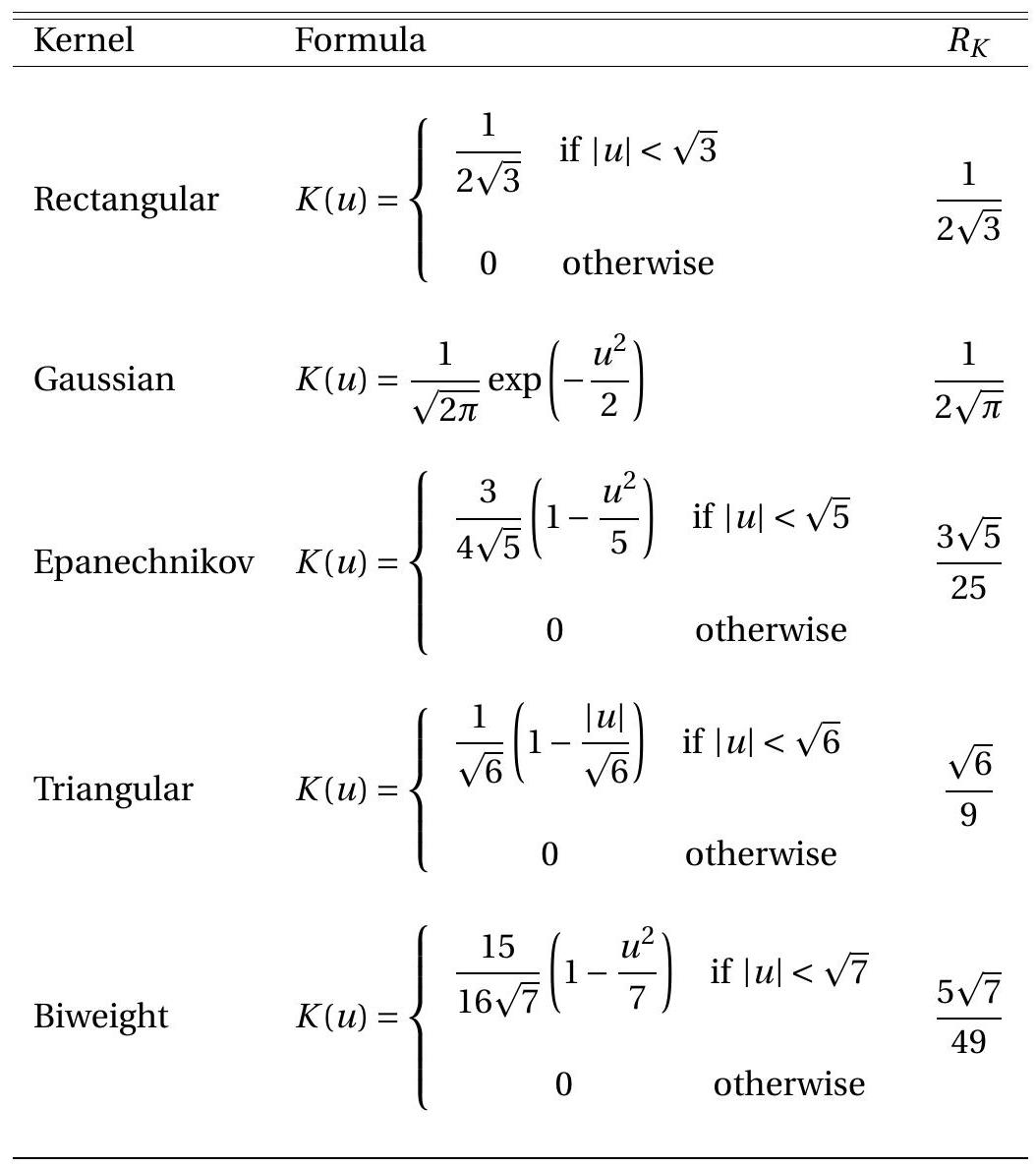

There are a large number of functions which satisfy Definition 19.1, and many are programmed as options in statistical packages. We list the most important in Table below: the Rectangular, Gaussian, Epanechnikov, Triangular, and Biweight kernels. In practice it is unnecessary to consider kernels beyond these five. For nonparametric regression we recommend either the Gaussian or Epanechnikov kernel, and either will give similar results. In Table we express the kernels in normalized form.

For more discussion on kernel functions see Chapter 17 of Probability and Statistics for Economists. A generalization of (19.1) is obtained by replacing the indicator function with a kernel function:

The estimator (19.2) is known as the Nadaraya-Watson estimator, the kernel regression estimator, or the local constant estimator, and was introduced independently by Nadaraya (1964) and Watson (1964).

The rolling binned means estimator (19.1) is the Nadarya-Watson estimator with the rectangular kernel. The Nadaraya-Watson estimator (19.2) can be used with any standard kernel and is typically estimated using the Gaussian or Epanechnikov kernel. In general we recommend the Gaussian kernel because it produces an estimator which possesses derivatives of all orders.

The bandwidth plays a similar role in kernel regression as in kernel density estimation. Namely, larger values of will result in estimates which are smoother in , and smaller values of will result in estimates which are more erratic. It might be helpful to consider the two extreme cases Table 19.1: Common Normalized Second-Order Kernels

and . As we can see that (if the values of are unique), so that is simply the scatter of on . In contrast, as then , the sample mean. For intermediate values of will smooth between these two extreme cases.

The estimator (19.2) using the Gaussian kernel and is also displayed in Figure 19.1(a) with the long dashes. As you can see, this estimator appears to be much smoother than the binned estimator but tracks exactly the same path. The bandwidth for the Gaussian kernel is equivalent to the bandwidth for the binned estimator because the latter is a kernel estimator using the rectangular kernel scaled to have a standard deviation of .

Local Linear Estimator

The Nadaraya-Watson (NW) estimator is often called a local constant estimator as it locally (about ) approximates as a constant function. One way to see this is to observe that solves the minimization problem

This is a weighted regression of on an intercept only.

This means that the NW estimator is making the local approximation for , which means it is making the approximation

The NW estimator is a local estimator of this approximate model using weighted least squares.

This interpretation suggests that we can construct alternative nonparametric estimators of by alternative local approximations. Many such local approximations are possible. A popular choice is the Local Linear (LL) approximation. Instead of the approximation , LL uses the linear approximation . Thus

The LL estimator then applies weighted least squares similarly as in NW estimation.

One way to represent the LL estimator is as the solution to the minimization problem

Another is to write the approximating model as

where and

This is a linear regression with regressor vector and coefficient vector . Applying weighted least squares with the kernel weights we obtain the LL estimator

where is the stacked , and is the stacked . This expression generalizes the Nadaraya-Watson estimator as the latter is obtained by setting or constraining . Notice that the matrices and depend on and .

The local linear estimator was first suggested by Stone (1977) and came into prominence through the work of Fan (1992, 1993).

To visualize, Figure 19.1(b) displays the scatter plot of the same 100 observations from panel (a) divided into the same five bins. A linear regression is fit to the observations in each bin. These five fitted regression lines are displayed by the short dashed lines. This “binned regression estimator” produces a flexible appromation for the CEF but has large jumps at the edges of the partitions. The midpoints of each of these five regression lines are displayed by the solid squares and could be viewed as the target estimate for the binned regression estimator. A rolling version of the binned regression estimator moves these estimation windows continuously across the support of and is displayed by the solid line. This corresponds to the local linear estimator with a rectangular kernel and a bandwidth of . By construction this line passes through the solid squares. To obtain a smoother estimator we replace the rectangular with the Gaussian kernel (using the same bandwidth ). We display these estimates with the long dashes. This has the same shape as the rectangular kernel estimate (rolling binned regression) but is visually much smoother. We label this the “Local Linear” estimator because it is the standard implementation. One interesting feature is that as the LL estimator approaches the full-sample least squares estimator . That is because as all observations receive equal weight. In this sense the LL estimator is a flexible generalization of the linear OLS estimator.

Another useful property of the LL estimator is that it simultaneously provides estimates of the regression function and its slope at .

Local Polynomial Estimator

The NW and LL estimators are both special cases of the local polynomial estimator. The idea is to approximate the regression function by a polynomial of fixed degree , and then estimate locally using kernel weights.

The approximating model is a order Taylor series approximation

where

The estimator is

where Notice that this expression includes the Nadaraya-Watson and local linear estimators as special cases with and , respectively.

There is a trade-off between the polynomial order and the local smoothing bandwidth . By increasing we improve the model approximation and thereby can use a larger bandwidth . On the other hand, increasing increases estimation variance.

Asymptotic Bias

Since , the conditional expectation of the Nadaraya-Watson estimator is

We can simplify this expression as .

The following regularity conditions will be maintained through the chapter. Let denote the marginal density of and let denote the conditional variance of .

Assumption $19.1

.

.

, and are continuous in some neighborhood of .

.

These conditions are similar to those used for the asymptotic theory for kernel density estimation. The assumptions and means that the bandwidth gets small yet the number of observations in the estimation window diverges to infinity. Assumption 19.1.3 are minimal smoothness conditions on the conditional expectation , marginal density , and conditional variance . Assumption 19.1.4 specifies that the marginal density is non-zero. This is required because we are estimating the conditional expectation at , so there needs to be a non-trivial number of observations for near .

Theorem 19.1 Suppose Assumption holds and and are continuous in . Then as with

where

1.

where

The proof for the Nadaraya-Watson estimator is presented in Section 19.26. For a proof for the local linear estimator see Fan and Gijbels (1996).

We call the terms and the asymptotic bias of the estimators.

Theorem 19.1 shows that the asymptotic bias of the Nadaraya-Watson and local linear estimators is proportional to the squared bandwidth (the degree of smoothing) and to the functions and . The asymptotic bias of the local linear estimator depends on the curvature (second derivative) of the CEF function similarly to the asymptotic bias of the kernel density estimator in Theorem of Probability and Statistics for Economists. When then is downwards biased. When then is upwards biased. Local averaging smooths , inducing bias, and this bias is increasing in the level of curvature of . This is called smoothing bias.

The asymptotic bias of the Nadaraya-Watson estimator adds a second term which depends on the first derivatives of and . This is because the Nadaraya-Watson estimator is a local average. If the density is upward sloped at (if ) then there are (on average) more observations to the right of than to the left so a local average will be biased if has a non-zero slope. In contrast the bias of the local linear estimator does not depend on the local slope because it locally fits a linear regression. The fact that the bias of the local linear estimator has fewer terms than the bias of the Nadaraya-Watson estimator (and is invariant to the slope ) justifies the claim that the local linear estimator has generically reduced bias relative to Nadaraya-Watson.

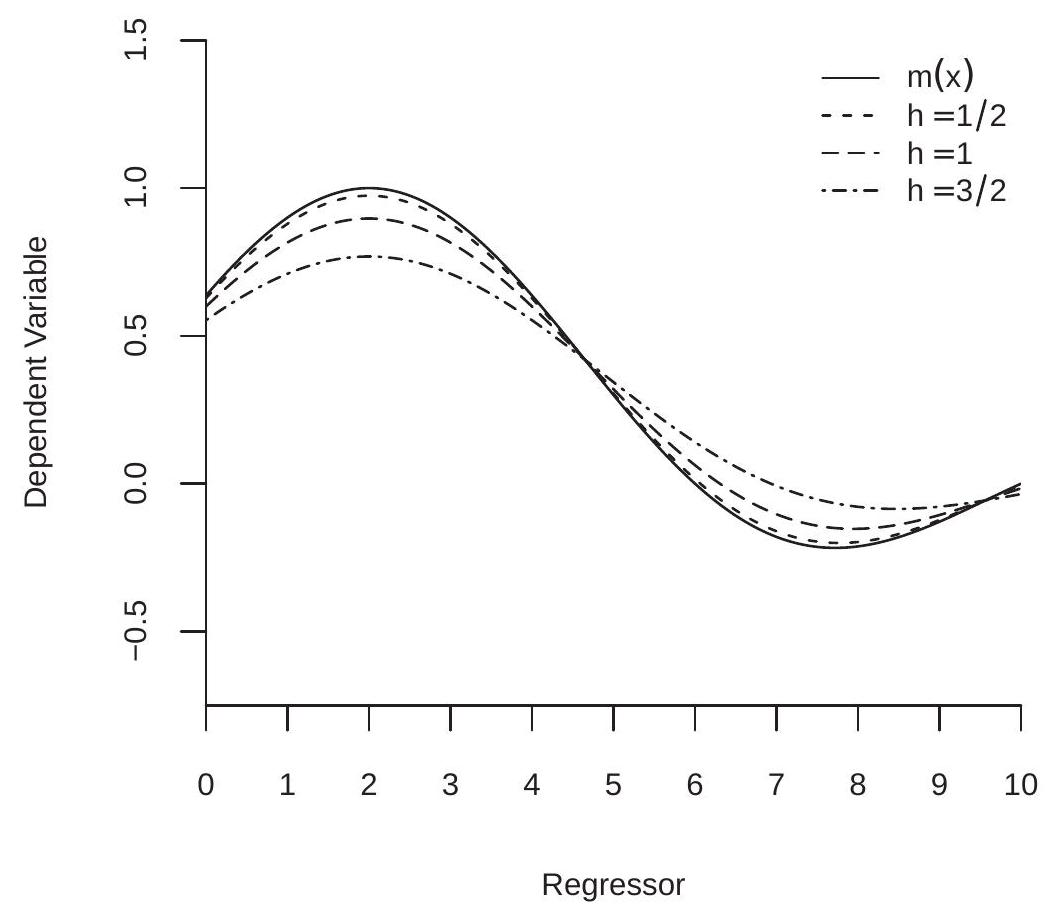

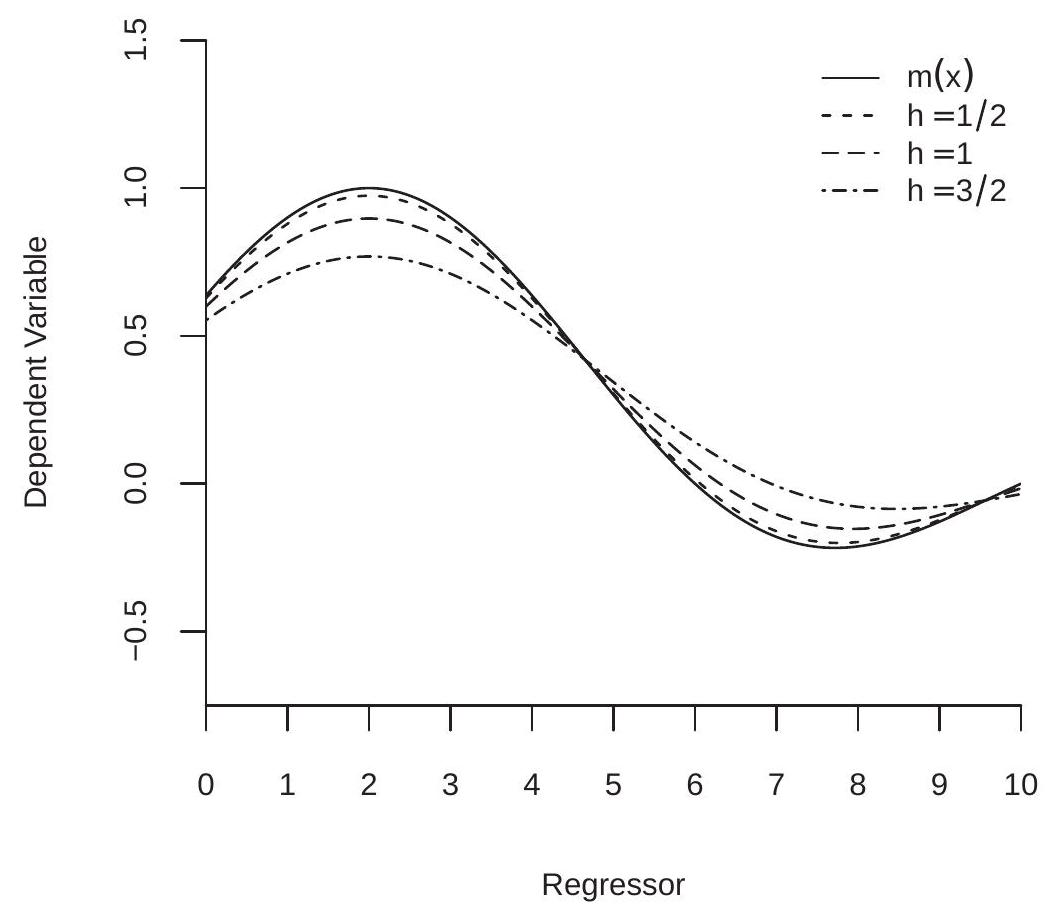

We illustrate asymptotic smoothing bias in Figure 19.2. The solid line is the true CEF for the data displayed in Figure 19.1. The dashed lines are the asymptotic approximations to the expectation for bandwidths , and . (The asymptotic biases of the NW and LL estimators are the same because has a uniform distribution.) You can see that there is minimal bias for the smallest bandwidth but considerable bias for the largest. The dashed lines are smoothed versions of the CEF, attenuating the peaks and valleys.

Smoothing bias is a natural by-product of nonparametric estimation of nonlinear functions. It can only be reduced by using a small bandwidth. As we see in the following section this will result in high estimation variance.

Figure 19.2: Smoothing Bias

Asymptotic Variance

From (19.3) we deduce that

Since the denominator is a function only of and the numerator is linear in we can calculate that the finite sample variance of is

We can simplify this expression as . Let denote the conditional variance of .

Theorem 19.2 Under Assumption 19.1,

.

.

In these expressions

is the roughness of the kernel .

The proof for the Nadaraya-Watson estimator is presented in Section 19.26. For the local linear estimator see Fan and Gijbels (1996).

We call the leading terms in Theorem the asymptotic variance of the estimators. Theorem shows that the asymptotic variance of the two estimators are identical. The asymptotic variance is proportional to the roughness of the kernel and to the conditional variance of the regression error. It is inversely proportional to the effective number of observations and to the marginal density . This expression reflects the fact that the estimators are local estimators. The precision of is low for regions where has a large conditional variance and/or has a low density (where there are relatively few observations).

AIMSE

We define the asymptotic MSE (AMSE) of an estimator as the sum of its squared asymptotic bias and asymptotic variance. Using Theorems and for the Nadaraya-Watson and local linear estimators, we obtain

where for the Nadaraya-Watson estimator and for the local linear estimator. This is the asymptotic MSE for the estimator for a single point .

A global measure of fit can be obtained by integrating AMSE . It is standard to weight the AMSE by for some integrable weight function . This is called the asymptotic integrated MSE (AIMSE). Let be the support of (the region where ).

where

The weight function can be omitted if is bounded. Otherwise, a common choice is . An integrable weight function is needed when has unbounded support to ensure that

The form of the AIMSE is similar to that for kernel density estimation (Theorem of Probability and Statistics for Economists). It has two terms (squared bias and variance). The first is increasing in the bandwidth and the second is decreasing in . Thus the choice of affects AIMSE with a trade-off between these two components. Similarly to density estimation we can calculate the bandwidth which minimizes the AIMSE. (See Exercise 19.2.) The solution is given in the following theorem.

Theorem 19.3 The bandwidth which minimizes the AIMSE (19.5) is

With then AIMSE .

This result characterizes the AIMSE-optimal bandwidth. This bandwidth satisfies the rate which is the same rate as for kernel density estimation. The optimal constant depends on the kernel , the weighted average squared bias , and the weighted average variance . The constant is different, however, from that for density estimation.

Inserting (19.6) into (19.5) plus some algebra we find that the AIMSE using the optimal bandwidth is

This depends on the kernel only through the constant . Since the Epanechnikov kernel has the smallest value of it is also the kernel which produces the smallest AIMSE. This is true for both the NW and LL estimators.

See Theorem of Probability and Statistics for Economists. Theorem 19.4 The AIMSE (19.5) of the Nadaraya-Watson and Local Linear regression estimators is minimized by the Epanechnikov kernel.

The efficiency loss by using the other standard kernels, however, is small. The relative efficiency of estimation using the another kernel is . Using the values of from Table we calculate that the efficiency loss from using the Triangle, Gaussian, and Rectangular kernels are , and , respectively, which are minimal. Since the Gaussian kernel produces the smoothest estimates, which is important for estimation of marginal effects, our overall recommendation is the Gaussian kernel.

Reference Bandwidth

The NW, LL and LP estimators depend on a bandwidth and without an empirical rule for selection of the methods are incomplete. It is useful to have a reference bandwith which mimics the optimal bandwidth in a simplified setting and provides a baseline for further investigations.

Theorem and a little re-writing reveals that the optimal bandwidth equals

where the approximation holds for all single-peaked kernels by similar calculations as in Section of Probability and Statistics for Economists.

A reference approach can be used to develop a rule-of-thumb for regression estimation. In particular, Fan and Gijbels (1996, Section 4.2) develop what they call the ROT (rule of thumb) bandwidth for the local linear estimator. We now describe their derivation.

First, set . Second, form a pilot or preliminary estimator of the regression function using a -order polynomial regression

for . (Fan and Gijbels (1996) suggest but this is not essential.) By least squares we obtain the coefficient estimates and implied second derivative 1) . Third, notice that can be written as an expectation

A moment estimator is

Fourth, assume that the regression error is homoskedastic so that . Estimate by the error variance estimate from the preliminary regression. Plugging these into (19.7) we obtain the reference bandwidth

Measured by root AIMSE.

The constant is bounded between and . Fan and Gijbels (1996) call this the Rule-of-Thumb (ROT) bandwidth.

Fan and Gijbels developed similar rules for higher-order odd local polynomial estimators but not for the local constant (Nadaraya-Watson) estimator. However, we can derive a ROT for the NW as well by using a reference model for the marginal density . A convenient choice is the uniform density under which and the optimal bandwidths for NW and LL coincide. This motivates using (19.9) as a ROT bandwidth for both the LL and NW estimators.

As we mentioned above, Fan and Gijbels suggest using a -order polynomial for the pilot estimator but this specific choice is not essential. In applications it may be prudent to assess sensitivity of the ROT bandwith to the choice of and to examine the estimated pilot regression for precision of the estimated higher-order polynomial terms.

We now comment on the choice of the weight region . When has bounded support then can be set equal to this support. Otherwise, can be set equal to the region of interest for , or the endpoints can be set to equal fixed quantiles (e.g. and ) of the distribution of .

To illustrate, take the data shown in Figure 19.1. If we fit a order polynomial we find which implies . Setting from the support of we find . The residuals from the polynomial regression have variance . Plugging these into (19.9) we find which is similar that used in Figure 19.1.

Estimation at a Boundary

One advantage of the local linear over the Nadaraya-Watson estimator is that the LL has better performance at the boundary of the support of . The NW estimator has excessive smoothing bias near the boundaries. In many contexts in econometrics the boundaries are of great interest. In such cases it is strongly recommended to use the local linear estimator (or a local polynomial estimator with ).

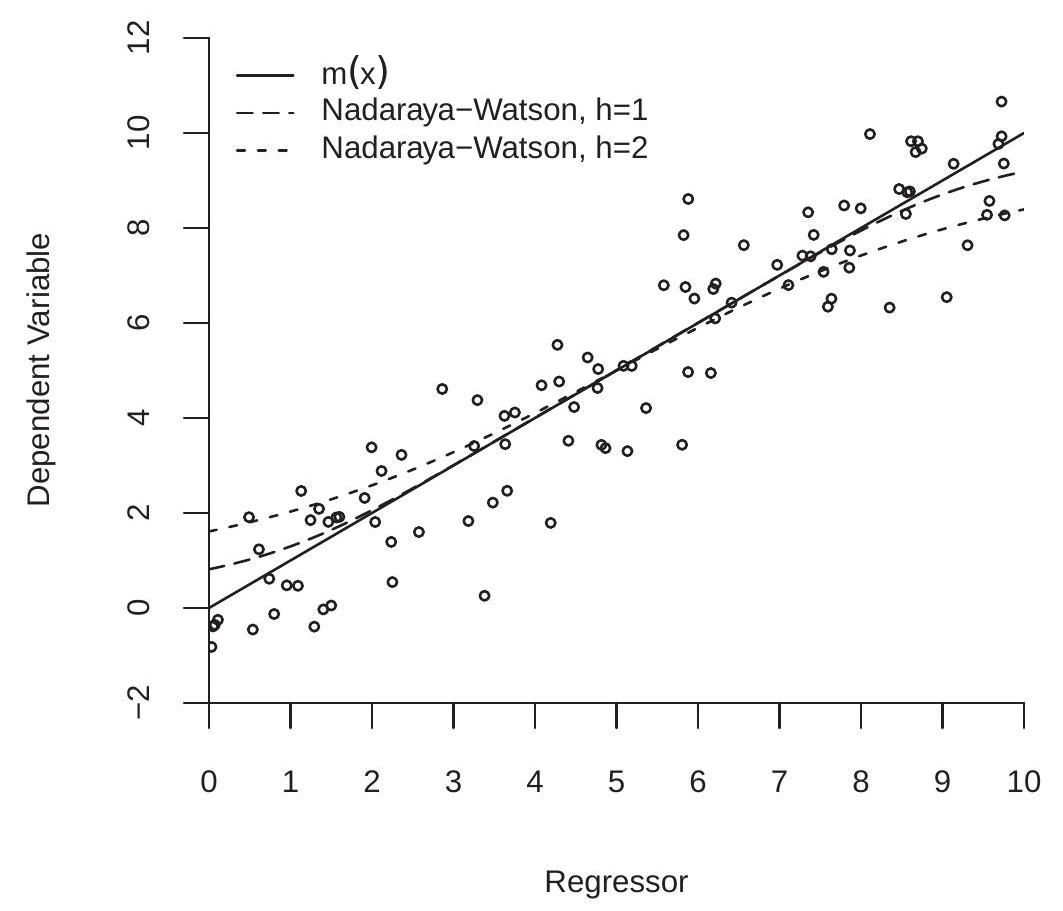

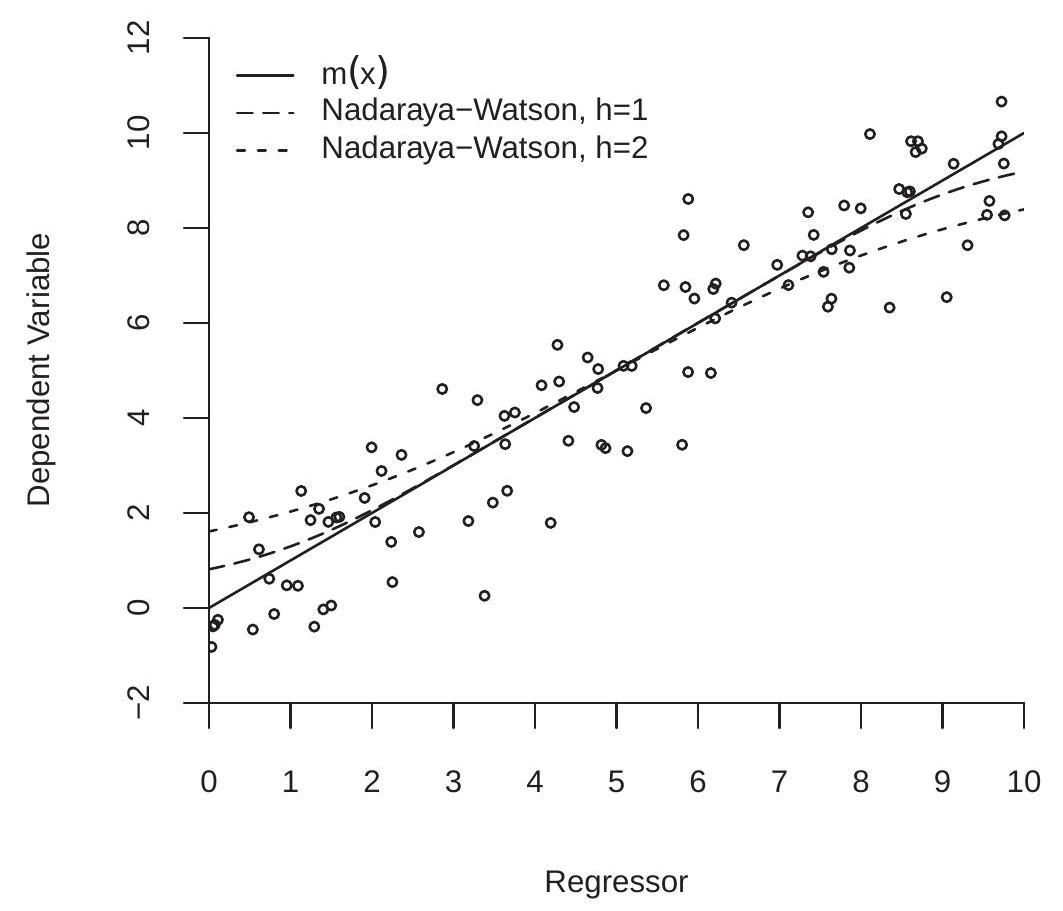

To understand the problem it may be helpful to examine Figure 19.3. This shows a scatter plot of 100 observations generated as and so that . Suppose we are interested in the CEF at the lower boundary . The Nadaraya-Watson estimator equals a weighted average of the observations for small values of . Since , these are all observations for which , and therefore is biased upwards. Symmetrically, the Nadaraya-Watson estimator at the upper boundary is a weighted average of observations for which and therefore is biased downwards.

In contrast, the local linear estimators and are unbiased in this example because is linear in . The local linear estimator fits a linear regression line. Since the expectation is correctly specified there is no estimation bias.

The exact bias of the NW estimator is shown in Figure by the dashed lines. The long dashes is the expectation for and the short dashes is the expectation for . We can see that the bias is substantial. For the bias is visible for all values of . For the smaller bandwidth the bias is minimal for in the central range of the support, but is still quite substantial for near the boundaries.

To calculate the asymptotic smoothing bias we can revisit the proof of Theorem 19.1.1 which calculated the asymptotic bias at interior points. Equation (19.29) calculates the bias of the numerator of the estimator expressed as an integral over the marginal density. Evaluated at a lower boundary the density is positive only for so the integral is over the positive region . This applies as well to equation (19.31) and the equations which follow. In this case the leading term of this expansion is the first term (19.32) which is proportional to rather than . Completing the calculations we find the following. Define and .

Calculated by simulation from 10,000 simulation replications.

Figure 19.3: Boundary Bias

Theorem 19.5 Suppose Assumption holds. Set . Let the support of be .

If and exist, and then

If and exist, and then

Theorem shows that the asymptotic bias of the NW estimator at the boundary is and depends on the slope of at the boundary. When the slope is positive the NW estimator is upward biased at the lower boundary and downward biased at the upper boundary. The standard interpretation of Theorem is that the NW estimator has high bias near boundary points.

Similarly we can evaluate the performance of the LL estimator. We summarize the results without derivation (as they are more technically challenging) and instead refer interested readers to Cheng, Fan and Marron (1997) and Imbens and Kalyahnaraman (2012).

Define the kernel moments , and projected kernel

Define its second moment

and roughness

Theorem 19.6 Under the assumptions of Theorem 19.5, at a boundary point

Theorem 19.6 shows that the asymptotic bias of the LL estimator at a boundary is ), the same as at interior points and is invariant to the slope of . The theorem also shows that the asymptotic variance has the same rate as at interior points.

Taking Theorems 19.1, 19.2, 19.5, and together we conclude that the local linear estimator has superior asymptotic properties relative to the NW estimator. At interior points the two estimators have the same asymptotic variance. The bias of the LL estimator is invariant to the slope of and its asymptotic bias only depends on the second derivative while the bias of the NW estimator depends on both the first and second derivatives. At boundary points the asymptotic bias of the NW estimator is which is of higher order than the bias of the LL estimator. For these reasons we recommend the local linear estimator over the Nadaraya-Watson estimator. A similar argument can be made to recommend the local cubic estimator, but this is not widely used.

The asymptotic bias and variance of the LL estimator at the boundary is slightly different than in the interior. The difference is that the bias and variance depend on the moments of the kernel-like function rather than the original kernel .

An interesting question is to find the optimal kernel function for boundary estimation. By the same calculations as for Theorem we find that the optimal kernel minimizes the roughness given the second moment and as argued for Theorem this is achieved when equals a quadratic function in . Since is the product of and a linear function this means that must be linear in , implying that the optimal kernel is the Triangular kernel. See Cheng, Fan, and Marron (1997). Calculations similar to those following Theorem show that efficiency loss of estimation using the Epanechnikov, Gaussian, and Rectangular kernels are 1%, 1%, and 3%, respectively.

Measured by root AIMSE.

Nonparametric Residuals and Prediction Errors

Given any nonparametric regression estimator the fitted regression at is and the fitted residual is . As a general rule, but especially when the bandwidth is small, it is hard to view as a good measure of the fit of the regression. For the NW and LL estimators, as then and therefore . This is clear overfitting as the true error is not zero. In general, because is a local average which includes , the fitted value will be necessarily close to and the residual small, and the degree of this overfitting increases as decreases.

A standard solution is to measure the fit of the regression at by re-estimating the model excluding the observation. Let be the leave-one-out nonparametric estimator computed without observation . For example, for Nadaraya-Watson regression, this is

Notationally, the “-i” subscript is used to indicate that the observation is omitted.

The leave-one-out predicted value for at is and the leave-one-out prediction error is

Since is not a function of there is no tendency for to overfit for small . Consequently, is a good measure of the fit of the estimated nonparametric regression.

When possible the leave-one-out prediction errors should be used instead of the residuals .

Cross-Validation Bandwidth Selection

The most popular method in applied statistics to select bandwidths is cross-validation. The general idea is to estimate the model fit based on leave-one-out estimation. Here we describe the method as typically applied for regression estimation. The method applies to NW, LL, and LP estimation, as well as other nonparametric estimators.

To be explicit about the dependence of the estimator on the bandwidth let us write an estimator of with a given bandwidth as .

Ideally, we would like to select to minimize the integrated mean-squared error (IMSE) of as a estimator of :

where is the marginal density of and is an integrable weight function. The weight is the same as used in (19.5) and can be omitted when has bounded support.

The difference at can be estimated by the leave-one-out prediction errors

where we are being explicit about the dependence on the bandwidth . A reasonable estimator of IMSE is the weighted average mean squared prediction errors

This function of is known as the cross-validation criterion. Once again, if has bounded support then the weights can be omitted and this is typically done in practice.

It turns out that the cross-validation criterion is an unbiased estimator of the IMSE plus a constant for a sample with observations.

Theorem $19.7

where

The proof of Theorem is presented in Section 19.26.

Since is a constant independent of the bandwidth is a shifted version of . In particular, the which minimizes and are identical. When is large the bandwidth which minimizes and are nearly identical so is essentially unbiased as an estimator of . This considerations lead to the recommendation to select as the value which minimizes .

The cross-validation bandwidth is the value which minimizes

for some . The restriction can be imposed so that is not evaluated over unreasonably small bandwidths.

There is not an explicit solution to the minimization problem (19.13), so it must be solved numerically. One method is grid search. Create a grid of values for , e.g. [ , evaluate for , and set

Evaluation using a coarse grid is typically sufficient for practical application. Plots of CV( against are a useful diagnostic tool to verify that the minimum of has been obtained. A computationally more efficient method for obtaining the solution (19.13) is Golden-Section Search. See Section of Probability and Statistics for Economists.

It is possible for the solution (19.13) to be unbounded, that is, is decreasing for large so that . This is okay. It simply means that the regression estimator simplifies to its full-sample version. For Nadaraya-Watson estimator this is . For the local linear estimator this is .

For NW and LL estimation, the criterion (19.11) requires leave-one-out estimation of the conditional mean at each observation . This is different from calculation of the estimator as the latter is typically done at a set of fixed values of for purposes of display.

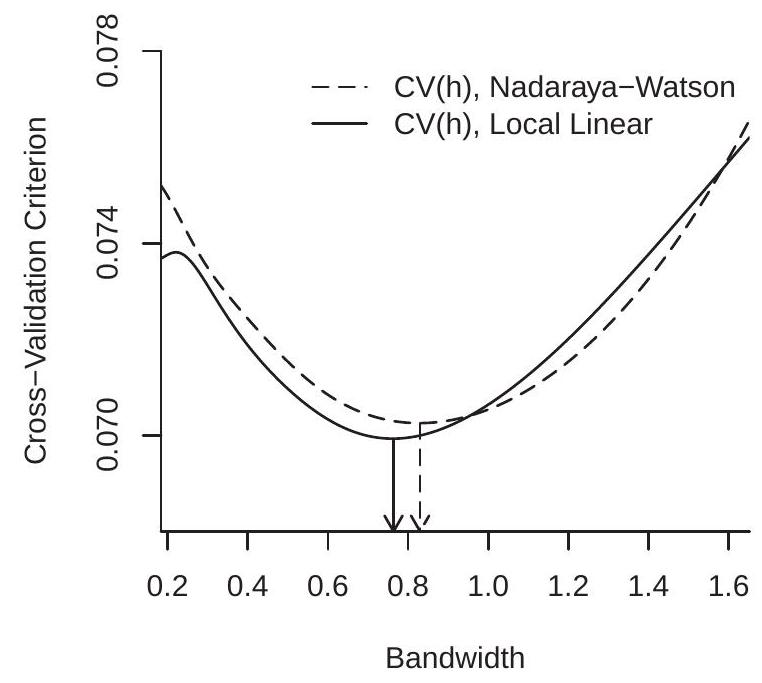

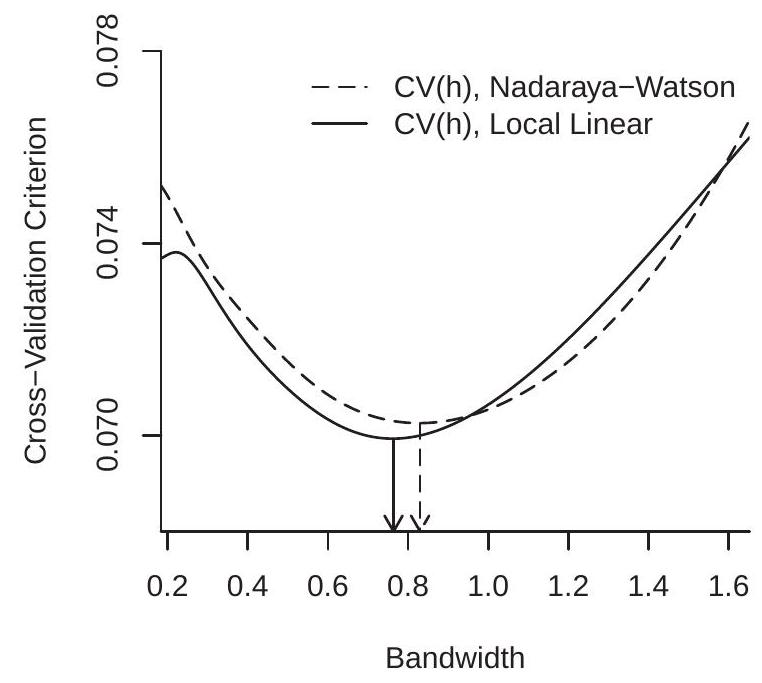

To illustrate, Figure 19.4(a) displays the cross-validation criteria for the Nadaraya-Watson and Local Linear estimators using the data from Figure 19.1, both using the Gaussian kernel. The CV functions are computed on a grid on with 200 gridpoints. The CV-minimizing bandwidths are for the Nadaraya-Watson estimator and for the local linear estimator. These are somewhat higher than the rule of thumb value calculated earlier. Figure 19.4(a) shows the minimizing bandwidths by the arrows.

The CV criterion can also be used to select between different nonparametric estimators. The CVselected estimator is the one with the lowest minimized CV criterion. For example, in Figure 19.4(a), you can see that the LL estimator has a minimized CV criterion of which is lower than the minimum

- Cross-Validation Criterion

.jpg)

- Nonparametric Estimates

Figure 19.4: Bandwidth Selection

obtained by the NW estimator. Since the LL estimator achieves a lower value of the CV criterion, LL is the CV-selected estimator. The difference, however, is small, indicating that the two estimators achieve similar IMSE.

Figure 19.4(b) displays the local linear estimates using the ROT and CV bandwidths along with the true conditional mean . The estimators track the true function quite well, and the difference between the bandwidths is relatively minor in this application.

Asymptotic Distribution

We first provide a consistency result.

Theorem 19.8 Under Assumption 19.1, and

A proof for the Nadaraya-Watson estimator is presented in Section 19.26. For the local linear estimator see Fan and Gijbels (1996).

Theorem shows that the estimators are consistent for under mild continuity assumptions. In particular, no smoothness conditions on are required beyond continuity.

We next present an asymptotic distribution result. The following shows that the kernel regression estimators are asymptotically normal with a nonparametric rate of convergence, a non-trivial asymptotic bias, and a non-degenerate asymptotic variance. Theorem 19.9 Suppose Assumption holds. Assume in addition that and are continuous in , that for some and ,

and

Then

Similarly,

A proof for the Nadaraya-Watson estimator appears in Section 19.26. For the local linear estimator see Fan and Gijbels (1996).

Relative to Theorem 19.8, Theorem requires stronger smoothness conditions on the conditional mean and marginal density. There are also two technical regularity conditions. The first is a conditional moment bound (19.14) (which is used to verify the Lindeberg condition for the CLT) and the second is the bandwidth bound . The latter means that the bandwidth must decline to zero at least at the rate and is used to ensure that higher-order bias terms do not enter the asymptotic distribution (19.16).

There are several interesting features about the asymptotic distribution which are noticeably different than for parametric estimators. First, the estimators converge at the rate not . Since , diverges slower than , thus the nonparametric estimators converge more slowly than a parametric estimator. Second, the asymptotic distribution contains a non-negligible bias term . Third, the distribution (19.16) is identical in form to that for the kernel density estimator (Theorem of Probability and Statistics for Economists).

The fact that the estimators converge at the rate has led to the interpretation of as the “effective sample size”. This is because the number of observations being used to construct is proportional to , not as for a parametric estimator.

It is helpful to understand that the nonparametric estimator has a reduced convergence rate relative to parametric asymptotic theory because the object being estimated - is nonparametric. This is harder than estimating a finite dimensional parameter, and thus comes at a cost.

Unlike parametric estimation the asymptotic distribution of the nonparametric estimator includes a term representing the bias of the estimator. The asymptotic distribution (19.16) shows the form of this bias. It is proportional to the squared bandwidth (the degree of smoothing) and to the function or which depends on the slope and curvature of the CEF . Interestingly, when is constant then and the kernel estimator has no asymptotic bias. The bias is essentially increasing in the curvature of the CEF function . This is because the local averaging smooths , and the smoothing induces more bias when is curved. Since the bias terms are multiplied by

This could be weakened if stronger smoothness conditions are assumed. For example, if and are continuous then (19.15) can be weakened to , which means that the bandwidth must decline to zero at least at the rate . which tends to zero it might be thought that the bias terms are asymptotically negligible and can be omitted, but this is mistaken because they are within the parentheses which are mutiplied by the factor . The bias terms can only be omitted if , which is known as an undersmoothing condition and is discussed in the next section.

The asymptotic variance of is inversely proportional to the marginal density . This means that has relatively low precision for regions where has a low density. This makes sense because these are regions where there are relatively few observations. An implication is that the nonparametric estimator will be relatively inaccurate in the tails of the distribution of .

Undersmoothing

The bias term in the asymptotic distribution of the kernel density estimator can be technically eliminated if the bandwidth is selected to converge to zero faster than the optimal rate , thus . This is called an under-smoothing bandwidth. By using a small bandwidth the bias is reduced and the variance is increased. Thus the random component dominates the bias component (asymptotically). The following is the technical statement.

Theorem 19.10 Under the conditions of Theorem 19.9, and ,

Theorem has the advantage of no bias term. Consequently this theorem is popular with some authors. There are also several disadvantages. First, the assumption of an undersmoothing bandwidth does not really eliminate the bias, it simply assumes it away. Thus in any finite sample there is always bias. Second, it is not clear how to set a bandwidth so that it is undersmoothing. Third, a undersmoothing bandwidth implies that the estimator has increased variance and is inefficient. Finally, the theory is simply misleading as a characterization of the distribution of the estimator.

Conditional Variance Estimation

The conditional variance is

There are a number of contexts where it is desirable to estimate including prediction intervals and confidence intervals for the estimated CEF. In general the conditional variance function is nonparametric as economic models rarely specify the form of . Thus estimation of is typically done nonparametrically. Since is the CEF of given it can be estimated by nonparametric regression. For example, the ideal NW estimator (if were observed) is

Since the errors are not observed, we need to replace them with an estimator. A simple choice are the residuals . A better choice are the leave-one-out prediction errors . The latter are recommended for variance estimation as they are not subject to overfitting. With this substitution the NW estimator of the conditional variance is

This estimator depends on a bandwidth but there is no reason for this bandwidth to be the same as that used to estimate the CEF. The ROT or cross-validation using as the dependent variable can be used to select the bandwidth for estimation of separately from the choice for estimation of .

There is one subtle difference between CEF and conditional variance estimation. The conditional variance is inherently non-negative and it is desirable for the estimator to satisfy this property. The NW estimator (19.17) is necessarily non-negative because it is a smoothed average of the nonnegative squared residuals. The LL estimator, however, is not guaranteed to be non-negative for all . Furthermore, the NW estimator has as a special case the homoskedastic estimator (full sample variance) which may be a relevant selection. For these reasons, the NW estimator may be preferred for conditional variance estimation.

Fan and Yao (1998) derive the asymptotic distribution of the estimator (19.17). They obtain the surprising result that the asymptotic distribution of the two-step estimator is identical to that of the one-step idealized estimator .

Variance Estimation and Standard Errors

It is relatively straightforward to calculate the exact conditional variance of the Nadaraya-Watson, local linear, or local polynomial estimator. The estimators can be written as

where is the vector of means . The first component is a function only of the regressors and the second is linear in the error . Thus conditionally on the regressors ,

where

A White-type estimator can be formed by replacing with the squared residuals or prediction errors

Alternatively, could be replaced with an estimator such as (19.17) evaluated at or .

A simple option is the asymptotic formula

with from (19.17) and a density estimator such as

where is a bandwidth. (See Chapter 17 of Probability and Statistics for Economists.)

In general we recommend (19.18) calculated with prediction errors as this is the closest analog of the finite sample covariance matrix.

For local linear and local polynomial estimators the estimator is the first diagonal element of the matrix . For any of the variance estimators a standard error for is the square root of .

Confidence Bands

We can construct asymptotic confidence intervals. An 95% interval for is

This confidence interval can be plotted along with to assess precision.

It should be noted, however, that this confidence interval has two unusual properties. First, it is pointwise in , meaning that it is designed to have coverage probability at each not uniformly across . Thus they are typically called pointwise confidence intervals.

Second, because it does not account for the bias it is not an asymptotically valid confidence interval for . Rather, it is an asymptotically valid confidence interval for the pseudo-true (smoothed) value, e.g. . One way of thinking about this is that the confidence intervals account for the variance of the estimator but not its bias. A technical trick which solves this problem is to assume an undersmoothing bandwidth. In this case the above confidence intervals are technically asymptotically valid. This is only a technical trick as it does not really eliminate the bias only assumes it away. The plain fact is that once we honestly acknowledge that the true CEF is nonparametric it then follows that any finite sample estimator will have finite sample bias and this bias will be inherently unknown and thus difficult to incorporate into confidence intervals.

Despite these unusual properties we can still use the interval (19.20) to display uncertainty and as a check on the precision of the estimates.

The Local Nature of Kernel Regression

The kernel regression estimators (Nadaraya-Watson, Local Linear, and Local Polynomial) are all essentially local estimators in that given the estimator is a function only of the sub-sample for which is close to . The other observations do not directly affect the estimator. This is reflected in the distribution theory as well. Theorem shows that is consistent for if the latter is continuous at . Theorem shows that the asymptotic distribution of depends only on the functions , and at the point . The distribution does not depend on the global behavior of . Global features do affect the estimator , however, through the bandwidth . The bandwidth selection methods described here are global in nature as they attempt to minimize AIMSE. Local bandwidths (designed to minimize the AMSE at a single point ) can alternatively be employed but these are less commonly used, in part because such bandwidth estimators have high imprecision. Picking local bandwidths adds extra noise.

Furthermore, selected bandwidths may be meaningfully large so that the estimation window may be a large portion of the sample. In this case estimation is neither local nor fully global.

Application to Wage Regression

We illustrate the methods with an application to the the CPS data set. We are interested in the nonparametric regression of (wage) on experience. To illustrate we take the subsample of Black men with 12 years of education (high school graduates). This sample has 762 observations.

We first need to decide on the region of interest (range of experience) for which we will calculate the regression estimator. We select the range because most observations (90%) have experience levels below 40 years.

To avoid boundary bias we use the local linear estimator.

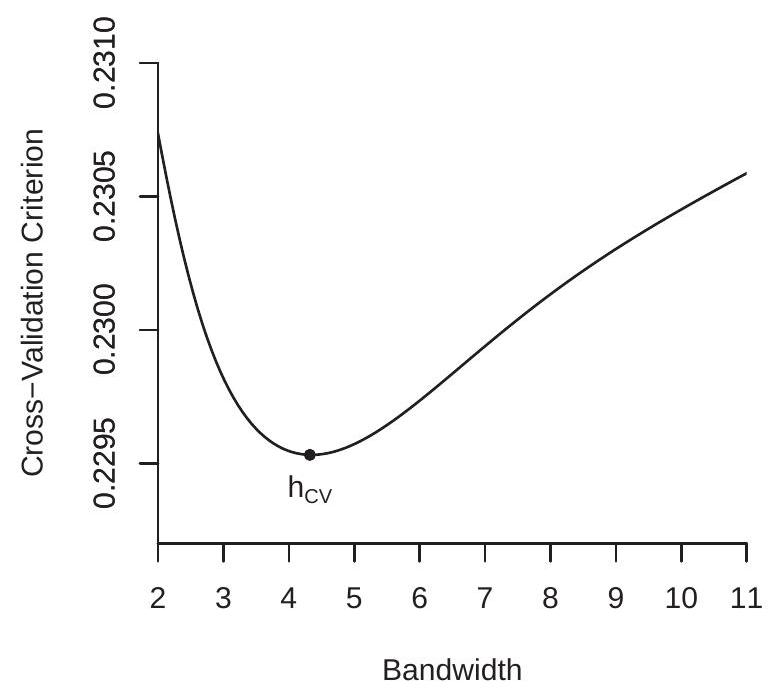

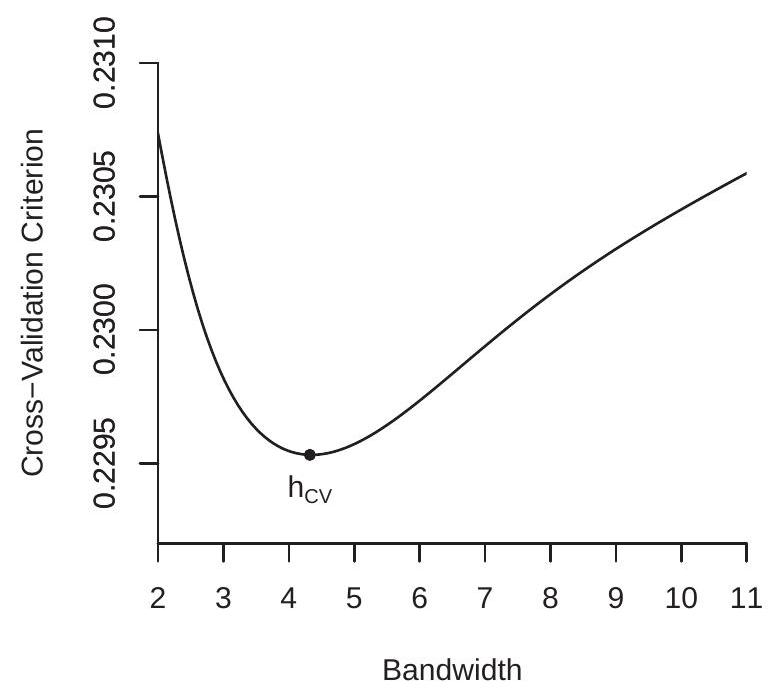

We next calculate the Fan-Gijbels rule-of-thumb bandwidth (19.9) and find . We then calculate the cross-validation criterion using the rule-of-thumb as a baseline. The CV criterion is displayed in Figure 19.5(a). The minimizer is which is somewhat smaller than the ROT bandwidth.

We calculate the local linear estimator using both bandwidths and display the estimates in Figure 19.5(b). The regression functions are increasing for experience levels up to 20 years and then become flat. While the functions are roughly concave they are noticably different than a traditional quadratic specification. Comparing the estimates, the smaller CV-selected bandwidth produces a regression estimate which is a bit too wavy while the ROT bandwidth produces a regression estimate which is much smoother yet captures the same essential features. Based on this inspection we select the estimate based on the ROT bandwidth (the solid line in panel (b)).

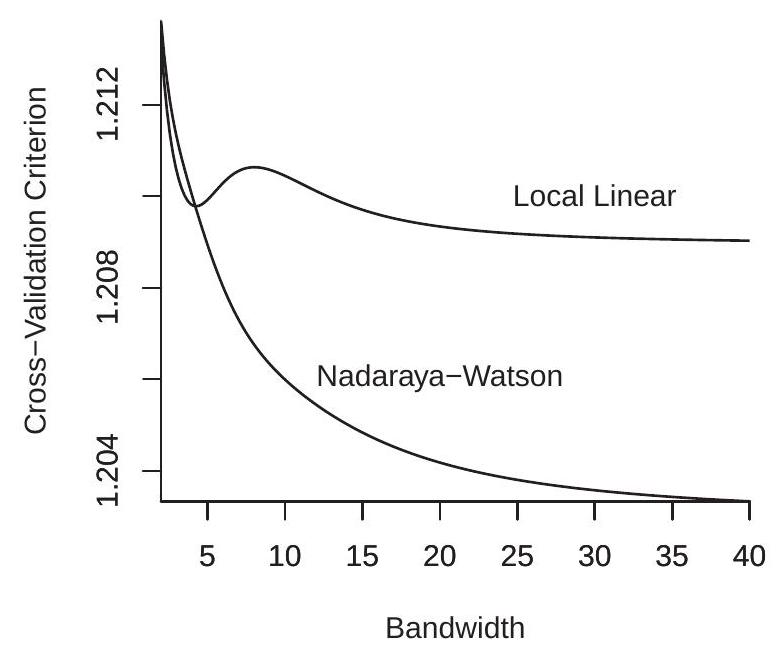

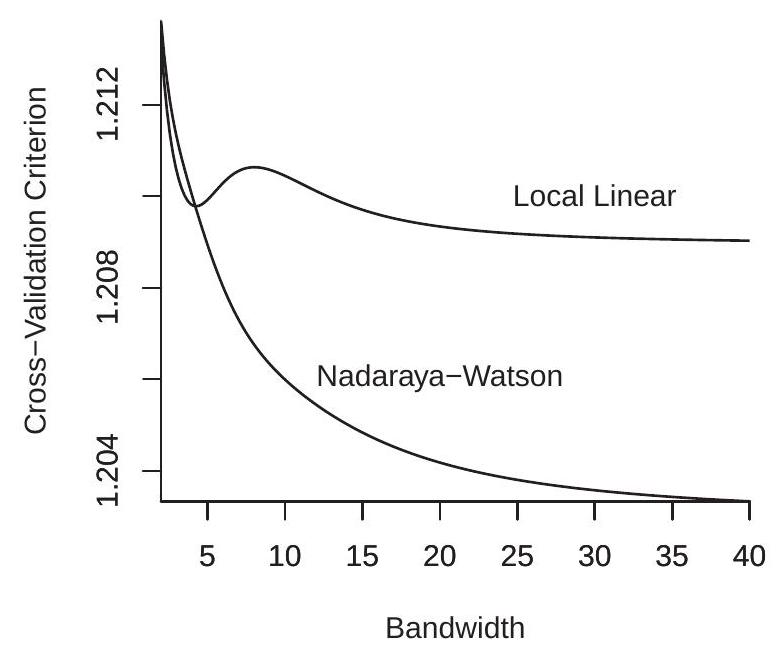

We next consider estimation of the conditional variance function. We calculate the ROT bandwidth for a regression using the squared prediction errors and find which is larger than the bandwidth used for conditional mean estimation. We next calculate the cross-validation functions for conditional variance estimation (regression of squared prediction errors on experience) using both NW and LL regression. The CV functions are displayed in Figure 19.6(a). The CV plots are quite interesting. For the LL estimator the CV function has a local minimum around but the global minimizer is unbounded. The CV function for the NW estimator is globally decreasing with an unbounded minimizer. The NW also achieves a considerably lower CV value than the LL estimator. This means that the CV-selected variance estimator is the NW estimator with , which is the simple full-sample estimator calculated with the prediction errors.

We next compute standard errors for the regression function estimates using formula (19.18). In Figure 19.6(b) we display the estimated regression (the same as Figure using the ROT bandwidth) along with asymptotic confidence bands computed as in (19.20). By displaying the confidence bands we can see that there is considerable imprecision in the estimator for low experience levels. We can still see that the estimates and confidence bands show that the experience profile is increasing up to about 20 years of experience and then flattens above 20 years. The estimates imply that for this population (Black men who are high school graduates) the average wage rises for the first 20 years of work experience (from 18 to 38 years of age) and then flattens with no further increases in average wages for the next 20 years of work experience (from 38 to 58 years of age).

- Cross-Validation Criterion

.jpg)

- Local Linear Regression

Figure 19.5: Log Wage Regression on Experience

Clustered Observations

Clustered observations are for individuals in cluster . The model is

where is the stacked . The assumption is that the clusters are mutually independent. Dependence within each cluster is unstructured.

Write

Stack and into cluster-level variables and . Let . The local linear estimator can be written as

The local linear estimator is the intercept in (19.21).

The natural method to obtain prediction errors is by delete-cluster regression. The delete-cluster estimator of is

- Cross-Validation for Conditional Variance

.jpg)

- Regression with Confidence Bands

Figure 19.6: Confidence Band Construction

The delete-cluster estimator of is the intercept from (19.22). The delete-cluster prediction error for observation is

Let be the stacked for cluster .

The variance of (19.21), conditional on the regressors , is

where . The covariance matrix (19.24) can be estimated by replacing with an estimator of . Based on analogy with regression estimation we suggest the delete-cluster prediction errors as they are not subject to over-fitting. This covariance matrix estimator using this choice is

The standard error for is the square root of the first diagonal element of .

There is no current theory on how to select the bandwidth for nonparametric regression using clustered observations. The Fan-Ghybels ROT bandwidth is designed for independent observations so is likely to be a crude choice in the case of clustered observations. Standard cross-validation has similar limitations. A practical alternative is to select the bandwidth to minimize a delete-cluster crossvaliation criterion. While there is no formal theory to justify this choice, it seems like a reasonable option. The delete-cluster CV criterion is

where are the delete-cluster prediction errors (19.23). The delete-cluster CV bandwidth is the value which minimizes this function:

As for the case of conventional cross-validation, it may be valuable to plot against to verify that the minimum has been obtained and to assess sensitivity.

Application to Testscores

We illustrate kernel regression with clustered observations by using the Duflo, Dupas, and Kremer (2011) investigation of the effect of student tracking on testscores. Recall that the core question was effect of the dummy variable tracking on the continuous variable testscore. A set of controls were included including a continuous variable percentile which recorded the student’s initial test score (as a percentile). We investigate the authors’ specification of this control using local linear regression.

We took the subsample of 1487 girls who experienced tracking and estimated the regression of testscores on percentile. For this application we used unstandardized test scores which range from 0 to about 40 . We used local linear regression with a Gaussian kernel.

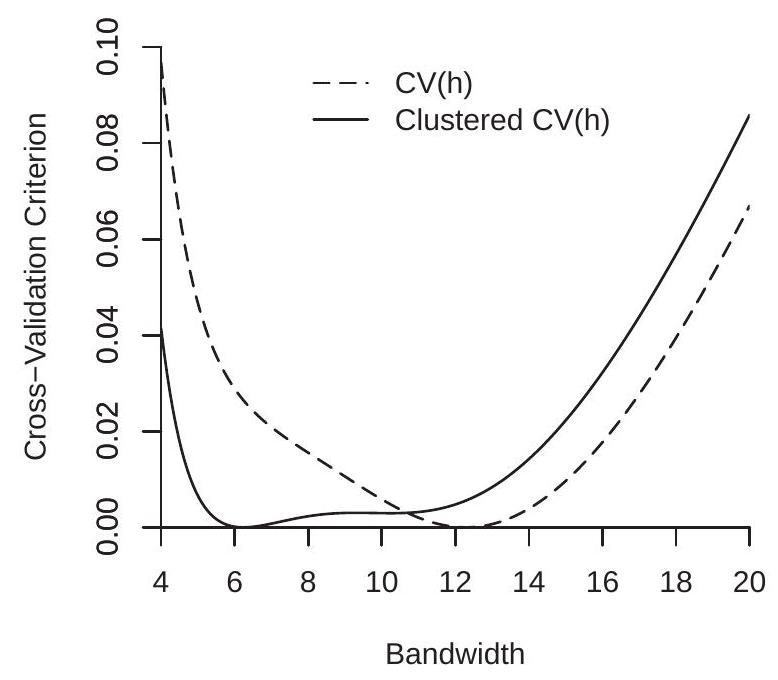

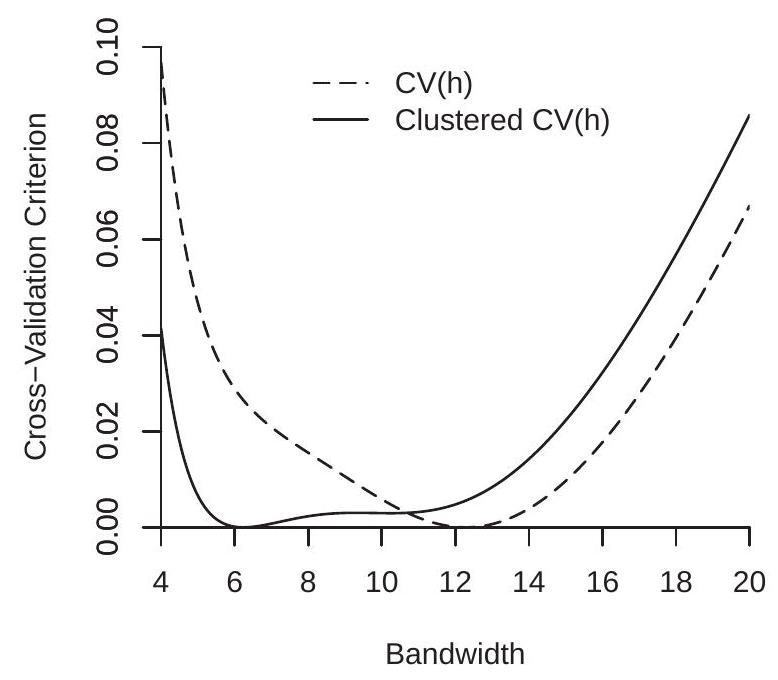

First consider bandwidth selection. The Fan-Ghybels ROT and conventional cross-validation bandwidths are and . We then calculated the clustered cross-validation criterion which has minimizer . To understand the differences we plot the standard and clustered cross-validation functions in Figure 19.7(a). In order to plot on the same graph we normalize each by subtracting their minimized value (so each is minimized at zero). What we can see from Figure 19.7(a) is that while the conventional CV criterion is sharply minimized at , the clustered CV criterion is essentially flat between 5 and 11. This means that the clustered CV criterion has difficulty discriminating between these bandwidth choices.

Using the bandwidth selected by clustered cross-validation, we calculate the local linear estimator of the regression function. The estimate is plotted in Figure 19.7(b). We calculate the deletecluster prediction errors and use these to calculate the standard errors for the local linear estimator using formula (19.25). (These standard errors are roughly twice as large as those calculated using the non-clustered formula.) We use the standard errors to calculate asymptotic pointwise confidence bands as in (19.20). These are plotted in Figure 19.7(b) along with the point estimate. Also plotted for comparison is an estimated linear regression line. The local linear estimator is similar to the global linear regression for initial percentiles below . But for initial percentiles above the two lines diverge. The confidence bands suggest that these differences are statistically meaningful. Students with initial testscores at the top of the initial distribution have higher final testscores on average than predicted by a linear specification.

Multiple Regressors

Our analysis has focused on the case of real-valued for simplicity, but the methods of kernel regression extend to the multiple regressor case at the cost of a reduced rate of convergence. In this section we

In Section 4.21, following Duflo, Dupas and Kremer (2011) the dependent variable was standardized testscores (normalized to have mean zero and variance one).

- Cross-Validation Criterion

.jpg)

- Local Linear Regression

Figure 19.7: Testscore as a Function of Initial Percentile

consider the case of estimation of the conditional expectation function where

For any evaluation point and observation define the kernel weights

a -fold product kernel. The kernel weights assess if the regressor vector is close to the evaluation point in the Euclidean space .

These weights depend on a set of bandwidths, , one for each regressor. Given these weights, the Nadaraya-Watson estimator takes the form

For the local-linear estimator, define

and then the local-linear estimator can be written as where

where .

In multiple regressor kernel regression cross-validation remains a recommended method for bandwidth selection. The leave-one-out residuals and cross-validation criterion are defined identically as in the single regressor case. The only difference is that now the CV criterion is a function over the bandwidths . This means that numerical minimization needs to be done more efficiently than by a simple grid search.

The asymptotic distribution of the estimators in the multiple regressor case is an extension of the single regressor case. Let denote the marginal density of denote the conditional variance of , and set .

Proposition 19.1 Let denote either the Nadarya-Watson or Local Linear estimator of . As and such that ,

For the Nadaraya-Watson estimator

and for the Local Linear estimator

We do not provide regularity conditions or a formal proof but instead refer interested readers to Fan and Gijbels (1996).

Curse of Dimensionality

The term “curse of dimensionality” is used to describe the phenomenon that the convergence rate of nonparametric estimators slows as the dimension increases.

When is vector-valued we define the AIMSE as the integral of the squared bias plus variance, integrating with respect to where is an integrable weight function. For notational simplicity consider the case that there is a single common bandwidth . In this case the AIMSE of equals

We see that the squared bias is of order , the same as in the single regressor case. The variance, however, is of larger order .

If pick the bandwith to minimizing the AIMSE we find that it equals for some constant c. This generalizes the formula for the one-dimensional case. The rate is slower than the rate. This effectively means that with multiple regressors a larger bandwidth is required. When the bandwidth is set as then the AIMSE is of order . This is a slower rate of convergence than in the one-dimensional case.

Theorem 19.11 For vector-valued the bandwidth which minimizes the AIMSE is of order . With then AIMSE .

See Exercise 19.6.

We see that the optimal AIMSE rate depends on the dimension . As increases this rate slows. Thus the precision of kernel regression estimators worsens with multiple regressors. The reason is the estimator is a local average of for observations such that is close to , and when there are multiple regressors the number of such observations is inherently smaller.

This phenomenon - that the rate of convergence of nonparametric estimation decreases as the dimension increases - is called the curse of dimensionality. It is common across most nonparametric estimation problems and is not specific to kernel regression.

The curse of dimensionality has led to the practical rule that most applications of nonparametric regression have a single regressor. Some have two regressors; on occassion, three. More is uncommon.

Partially Linear Regression

To handle discrete regressors and/or reduce the dimensionality we can separate the regression function into a nonparametric and a parametric part. Let the regressors be partitioned as where and are - and -dimensional, respectively. A partially linear regression model is

This model combines two elements. One, it specifies that the CEF is separable between and (there are no nonparametric interactions). Two, it specifies that the CEF is linear in the regressors . These are assumptions which may be true or may be false. In practice it is best to think of the assumptions as approximations.

When some regressors are discrete (as is common in econometric applications) they belong in . The regressors must be continuously distributed. In typical applications is either scalar or twodimensional. This may not be a restriction in practice as many econometric applications only have a small number of continuously distributed regressors.

The seminal contribution for estimation of (19.26) is Robinson (1988) who proposed a nonparmametric version of residual regression. His key insight was to see that the nonparametric component can be eliminated by transformation. Take the expectation of equation (19.26) conditional on . This is

Subtract this from (19.26), obtaining

The model is now a linear regression of the nonparametric regression error on the vector of nonparametric regression errors .

Robinson’s estimator replaces the infeasible regression errors by nonparametric counterparts. The result is a three-step estimator. 1. Using nonparametric regression (NW or LL), regress on on on , and on , obtaining the fitted values , and .

1. Regress on to obtain the coefficient estimate and standard errors.

- Use nonparametric regression to regress on to obtain the nonparametric estimator and confidence intervals.

The resulting estimators and standard errors have conventional asymptotic distributions under specific assumptions on the bandwidths. A full proof is provided by Robinson (1988). Andrews (2004) provides a more general treatment with insight to the general structure of semiparametric estimators.

The most difficult challenge is to show that the asymptotic distribution is unaffected by the first step estimation. Briefly, these are the steps of the argument. First, the first-step error has zero covariance with the regression error . Second, the asymptotic distribution will be unaffected by the firststep estimation if replacing (in this covariance) the expectation with its first-step nonparametric estimator induces an error of order . Third, because the covariance is a product, this holds when the first-step estimator has a convergence rate of . Fourth, this holds under Theorem if and .

The reason why the third step estimator has a conventional asymptotic distribution is a bit simpler to explain. The estimator converges at a conventional rate. The nonparametric estimator converges at a rate slower than . Thus the sampling error for is of lower order and does not affect the first-order asymptotic distribution of .

Once again, the theory is advanced so the above two paragraphs should not be taken as an explanation. The good news is that the estimation method is straightforward.

Computation

Stata has two commands which implement kernel regression: lpoly and npregress. 1poly implements local polynomial estimation for any , including Nadaraya-Watson (the default) and local linear estimation, and selects the bandwidth using the Fan-Gijbels ROT method. It uses the Epanechnikov kernel by default but the Gaussian can be selected as an option. The l poly command automatically displays the estimated CEF along with 95% confidence bands with standard errors computed using (19.18).

The Stata command npregress estimates local linear (the default) or Nadaraya-Watson regression. By default it selects the bandwidth by cross-validation. It uses the Epanechnikov kernel by default but the Gaussian can be selected as an option. Confidence intervals may be calculated using the percentile bootstrap. A display of the estimated CEF and 95% confidence bands at specific points (computed using the percentile bootstrap) may be obtained with the postestimation command margins.

There are several R packages which implement kernel regression. One flexible choice is npreg available in the np package. Its default method is Nadaraya-Watson estimation using a Gaussian kernel with bandwidth selected by cross-validation. There are options which allow local linear and local polynomial estimation, alternative kernels, and alternative bandwidth selection methods.

Technical Proofs*

For all technical proofs we make the simplifying assumption that the kernel function has bounded support, thus for . The results extend to the Gaussian kernel but with addition technical arguments. Proof of Theorem 19.1.1. Equation (19.3) shows that

where is the kernel density estimator (19.19) of with and

Theorem of Probability and Statistics for Economists established that . The proof is completed by showing that .

Since is a sample average it has the expectation

The second equality writes the expectation as an integral with respect to the density of . The third uses the change-of-variables . We next use the two Taylor series expansions

Inserted into (19.29) we find that (19.29) equals

The second equality uses the fact that the kernel integrates to one, its odd moments are zero, and the kernel variance is one. We have shown that .

Now consider the variance of . Since is a sample average of independent components and the variance is smaller than the second moment

The second equality writes the expectation as an integral. The third uses (19.30). The final inequality uses from Definition 19.1.1 and the fact that the kernel variance is one. This shows that

Together we conclude that

and

Together with (19.27) this implies Theorem 19.1.1.

Proof of Theorem 19.2.1. Equation (19.4) states that

where

and is the estimator (19.19) of . Theorem of Probability and Statistics for Economists established . The proof is completed by showing .

First, writing the expectation as an integral with respect to , making the change-of-variables , and appealing to the continuity of and at ,

Second, because is an average of independent random variables and the variance is smaller than the second moment

so .

We deduce from Markov’s inequality that , completing the proof.

Proof of Theorem 19.7. Observe that is a function only of and excluding , and is thus uncorrelated with . Since , then

The second term is an expectation over the random variables and , which are independent as the second is not a function of the observation. Thus taking the conditional expectation given the sample excluding the observation, this is the expectation over only, which is the integral with respect to its density

Taking the unconditional expecation yields

where this is the IMSE of a sample of size as the estimator uses observations. Combined with (19.35) we obtain (19.12), as desired.

Proof of Theorem 19.8. We can write the Nadaraya-Watson estimator as

where is the estimator (19.19), is defined in (19.28), and

Since by Theorem of Probability and Statistics for Economists, the proof is completed by showing and . Take . From (19.29) and the continuity of and

as . From (19.33),

as . Thus . By Markov’s inequality we conclude .

Take . Since is linear in and , we find . Since is an average of independent random variables, the variance is smaller than the second moment, and the definition

because and are continuous in . Thus . By Markov’s inequality we conclude , completing the proof.

Proof of Theorem 19.9. From (19.36), Theorem of Probability and Statistics for Economists, and (19.34) we have

where the final equality holds because by (19.38) and the assumption . The proof is completed by showing .

Define which are independent and mean zero. We can write as a standardized sample average. We verify the conditions for the Lindeberg CLT (Theorem 6.4). In the notation of Theorem 6.4, set as . The CLT holds if we can verify the Lindeberg condition.

This is an advanced calculation and will not interest most readers. It is provided for those interested in a complete derivation. Fix and . Since is bounded we can write . Let be sufficiently large so that

The conditional moment bound (19.14) implies that for ,

Since we find

because is arbitrary. This is the Lindeberg condition (6.2). The Lindeberg CLT (Theorem 6.4) shows that

This completes the proof.

Exercises

Exercise 19.1 For kernel regression suppose you rescale , for example replace with . How should the bandwidth change? To answer this, first address how the functions and change under rescaling, and then calculate how and change. Deduce how the optimal changes due to rescaling . Does your answer make intuitive sense?

Exercise 19.2 Show that (19.6) minimizes the AIMSE (19.5).

Exercise 19.3 Describe in words how the bias of the local linear estimator changes over regions of convexity and concavity in . Does this make intuitive sense?

Exercise 19.4 Suppose the true regression function is linear and we estimate the function using the Nadaraya-Watson estimator. Calculate the bias function . Suppose . For which regions is and for which regions is ? Now suppose that and re-answer the question. Can you intuitively explain why the NW estimator is positively and negatively biased for these regions? Exercise 19.5 Suppose is a constant function. Find the AIMSE-optimal bandwith (19.6) for NW estimation? Explain.

Exercise 19.6 Prove Theorem 19.11: Show that when the AIMSE optimal bandwidth takes the form and AIMSE is .

Exercise 19.7 Take the DDK2011 dataset and the subsample of boys who experienced tracking. As in Section use the Local Linear estimator to estimate the regression of testscores on percentile but now with the subsample of boys. Plot with confidence intervals. Comment on the similarities and differences with the estimate for the subsample of girls.

Exercise 19.8 Take the cps09mar dataset and the subsample of individuals with education=20 (professional degree or doctorate), with experience between 0 and 40 years.

Use Nadaraya-Watson to estimate the regression of log(wage) on experience, separately for men and women. Plot with confidence intervals. Comment on how the estimated wage profiles vary with experience. In particular, do you think the evidence suggests that expected wages fall for experience levels above 20 for this education group?

Repeat using the Local Linear estimator. How do the estimates and confidence intervals change?

Exercise 19.9 Take the Invest1993 dataset and the subsample of observations with . (In the dataset is the variable vala.)

Use Nadaraya-Watson to estimate the regression of on . (In the dataset is the variable inva.) Plot with confidence intervals.

Repeat using the Local Linear estimator.

Is there evidence to suggest that the regression function is nonlinear?

Exercise 19.10 The RR2010 dataset is from Reinhart and Rogoff (2010). It contains observations on annual U.S. GDP growth rates, inflation rates, and the debt/gdp ratio for the long time span 1791-2009. The paper made the strong claim that gdp growth slows as debt/gdp increases and in particular that this relationship is nonlinear with debt negatively affecting growth for debt ratios exceeding . Their full dataset includes 44 countries. Our extract only includes the United States.

Use Nadaraya-Watson to estimate the regression of gdp growth on the debt ratio. Plot with 95% confidence intervals.

Repeat using the Local Linear estimator.

Do you see evidence of nonlinearity and/or a change in the relationship at ?

Now estimate a regression of gdp growth on the inflation rate. Comment on what you find.

Exercise 19.11 We will consider a nonlinear AR(1) model for gdp growth rates

Create GDP growth rates . Extract the level of real U.S. GDP ( from FRED-QD and make the above transformation to growth rates.

Use Nadaraya-Watson to estimate . Plot with 95% confidence intervals.

Repeat using the Local Linear estimator.

Do you see evidence of nonlinearity?

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)