Introduction

So far in this textbook we have primarily focused on estimators which have explicit algebraic expressions. However, many econometric estimators need to be calculated by numerical methods. These estimators are collectively described as nonlinear. Many fall in a broad class known as m-estimators. In this part of the textbook we describe a number of m-estimators in wide use in econometrics. They have a common structure which allows for a unified treatment of estimation and inference.

An m-estimator is defined as a minimizer of a sample average

where is some function of and a parameter . The function is called the criterion function or objective function. For notational simplicity set .

This includes maximum likelihood when is the negative log-density function. “m-estimators” are a broader class; the prefix “m” stands for “maximum likelihood-type”.

The issues we focus on in this chaper are: (1) identification; (2) estimation; (3) consistency; (4) asymptotic distribution; and (5) covariance matrix estimation.

Examples

There are many m-estimators in common econometric usage. Some examples include the following.

Ordinary Least Squares: .

Nonlinear Least Squares: (Chapter 23).

Least Absolute Deviations: (Chapter 24).

Quantile Regression: (Chapter 24).

Maximum Likelihood: . The final category - Maximum Likelihood Estimation - includes many estimators as special cases. This includes many standard estimators of limited-dependent-variable models (Chapters 25-27). To illustrate, the probit model for a binary dependent variable is

where is the normal cumulative distribution function. We will study probit estimation in detail in Chapter 25. The negative log-density function is

Not all nonlinear estimators are m-estimators. Examples include method of moments, GMM, and minimum distance.

Identification and Estimation

A parameter vector is identified if it is uniquely determined by the probability distribution of the observations. This is a property of the probability distribution, not of the estimator.

However, when discussing a specific estimator it is common to describe identification in terms of the criterion function. Assume . Define

and its population minimizer

We say that is identified (or point identified) by if the minimizer is unique.

In nonlinear models it is difficult to provide general conditions under which a parameter is identified. Identification needs to be examined on a model-by-model basis.

An m-estimator by definition minimizes . When there is no explicit algebraic expression for the solution the minimization is done numerically. Such numerical methods are reviewed in Chapter 12 of Probability and Statistics for Economists.

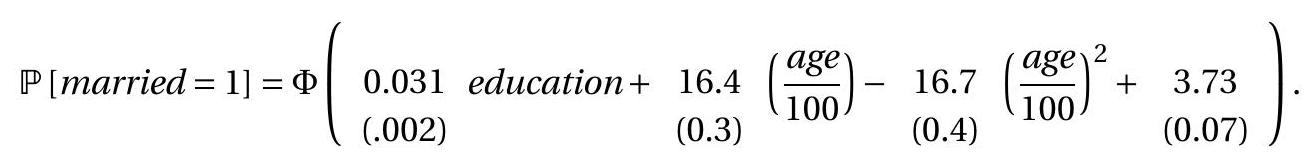

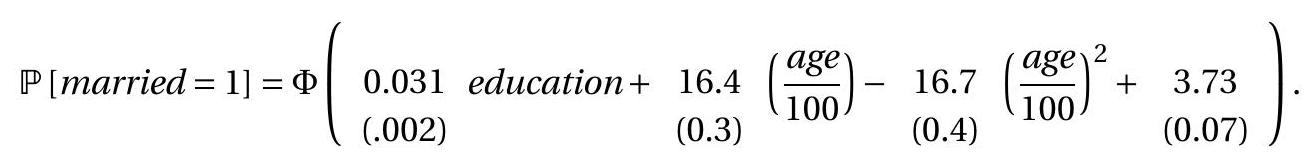

We illustrate using the probit model of the previous section. We use the CPS dataset for equal to an indicator that the individual is married , and set the regressors equal to years of education, age, and age squared. We obtain the following estimates

Standard error calculation will be discussed in Section 22.8. In this application we see that the probability of marriage is increasing in years of education and is an increasing yet concave function of age.

Consistency

It seems reasonable to expect that if a parameter is identified then we should be able to estimate the parameter consistently. For linear estimators we demonstrated consistency by applying the WLLN to the

We define married if marital equals 1,2 , or 3. explicit algebraic expressions for the estimators. This is not possible for nonlinear estimators because they do not have explicit algebraic expressions.

Instead, what is available to us is that an m-estimator minimizes the criterion function which is itself a sample average. For any given the WLLN shows that . It is intuitive that the minimizer of (the m-estimator ) will converge in probability to the minimizer of (the parameter ). However, the WLLN by itself is not sufficient to make this extension.

- Non-Uniform Convergence

.jpg)

- Uniform Convergence

Figure 22.1: Non-Uniform vs. Uniform Convergence

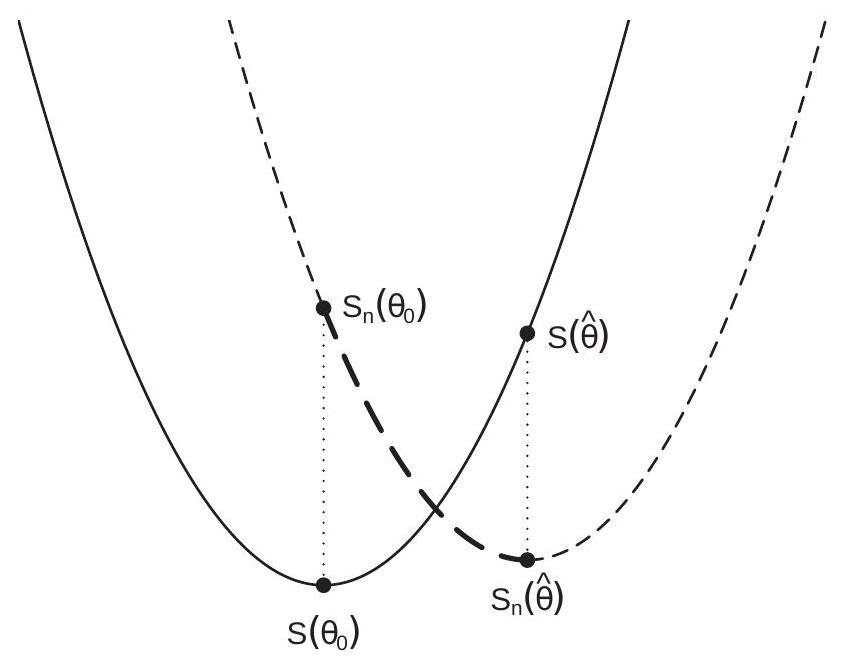

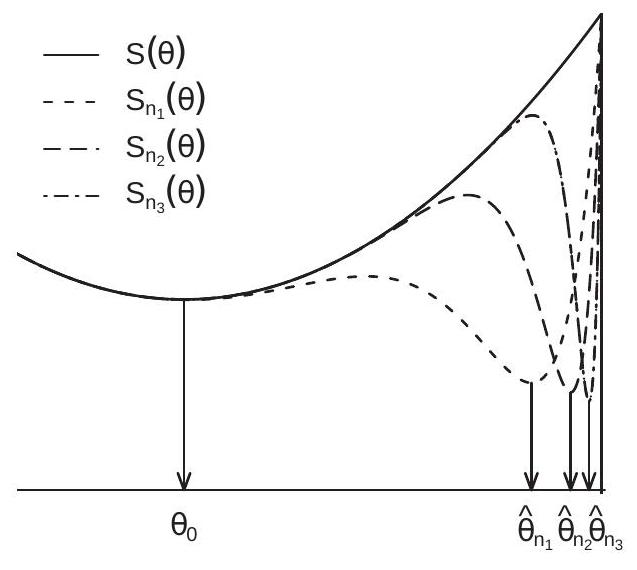

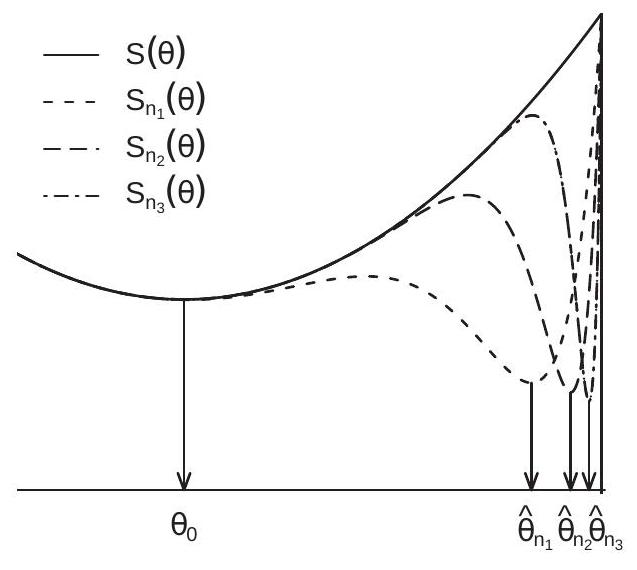

To see the problem examine Figure 22.1(a). This displays a sequence of functions (the dashed lines) for three values of . What is illustrated is that for each the function converges towards the limit function . However for each the function has a severe dip in the right-hand region. The result is that the sample minimizer converges to the right-limit of the parameter space. In contrast, the minimizer of the limit criterion is in the interior of the parameter space. What we observe is that converges to for each but the minimizer does not converge to .

A sufficient condition to exclude this pathological behavior is uniform convergence- uniformity over the parameter space . As we show in Theorem 22.1, uniform convergence in probability of to is sufficient to establish that the m-estimator is consistent for .

Definition 22.1 converges in probability to uniformly over if

as

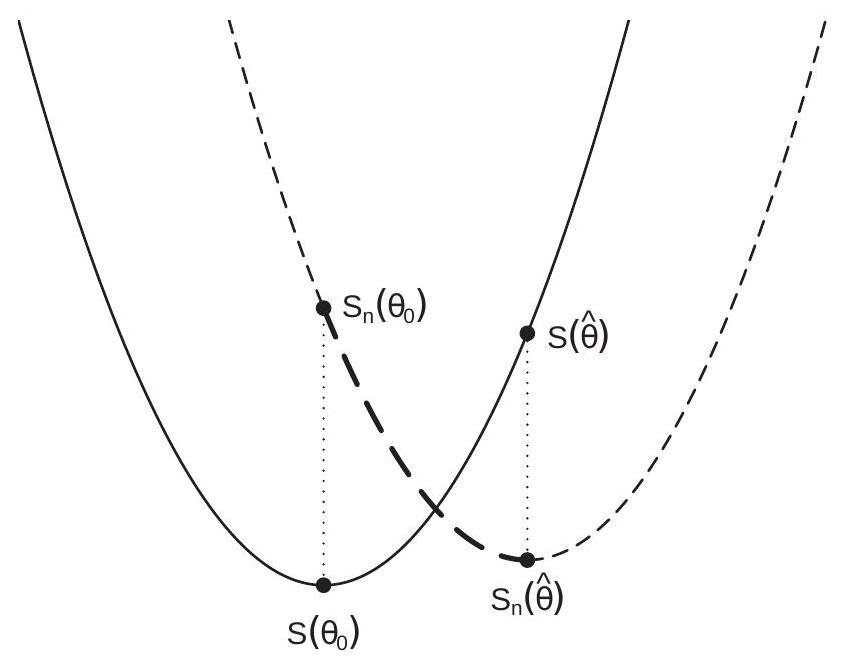

Uniform convergence excludes erratic wiggles in uniformly across and (e.g., what occurs in Figure 22.1(a)). The idea is illustrated in Figure 22.1(b). The heavy solid line is the function . The dashed lines are and . The thin solid line is the sample criterion . The figure illustrates a situation where the sample criterion satisifes . The sample criterion as displayed weaves up and down but stays within of . Uniform convergence holds if the event shown in Figure 22.1(b) holds with high probability for sufficiently large, for any arbitrarily small .

Theorem as if

converges in probability to uniformly over .

uniquely minimizes in the sense that for all ,

Theorem shows that an m-estimator is consistent for its population parameter. There are only two conditions. First, the criterion function converges uniformly in probability to its expected value, and second, the minimizer is unique. The assumption excludes the possibility that ) for some sequence not converging to .

The proof of Theorem is provided in Section 22.9.

Asymptotic Distribution

We now establish an asymptotic distribution theory. We start by an informal demonstration, present a general result under high-level conditions, and then discuss the assumptions and conditions. Define

Also define and .

Since the m-estimator minimizes it satisfies the first-order condition . Expand the right-hand side as a first order Taylor expansion about . This is valid when is in a neighborhood of , which holds for sufficiently large by Theorem 22.1. This yields

Rewriting, we obtain

Consider the two components. First, by the WLLN

If is an interior solution. Since is consistent this occurs with probability approaching one if is in the interior of the parameter space . Second,

Since minimizes it satisfies the first-order condition

Thus the summands in (22.2) are mean zero. Applying a CLT this sum converges in distribution to where . We deduce that

The technical hurdle to make this derivation rigorous is justifying the Taylor expansion (22.1). This can be done through smoothness of the second derivative of . An alternative (more advanced) argument based on empirical process theory uses weaker assumptions. Set

Let be some neighborhood of .

Theorem 22.4 Assume the conditions of Theorem hold, plus

.

is continuous in .

For all where

is in the interior of .

Then as where .

The proof of Theorem is presented in Section .

In some cases the asymptotic covariance matrix simplifies. The leading case is correctly specified maximum likelihood estimation, where so .

Assumption 1 states that the scores have a finite second moment. This is necessary in order to apply the CLT. Assumption 2 is a full-rank condition and is related to identification. A sufficient condition for Assumption 3 is that the scores are continuously differentiable but this is not necessary. Assumption 3 is broader, allowing for discontinuous , so long as its expectation is continuous and differentiable. Assumption 4 states that is Lipschitz-continuous for near . Assumption 5 is required in order to justify the application of the mean-value expansion.

Asymptotic Distribution Under Broader Conditions*

Assumption 4 in Theorem requires that is Lipschitz-continuous. While this holds in most applications, it is violated in some important applications including quantile regression. In such cases we can appeal to alternative regularity conditions. These are more flexible, but less intuitive.

The following result is a simple generalization of Lipschitz-continuity.

Theorem 22.5 The results of Theorem hold if Assumption 4 is replaced with the following condition: For all and all ,

for some and .

See Theorem of Probability and Statistics for Economists or Theorem 5 of Andrews (1994).

The bound (22.4) holds for many examples with discontinuous when the discontinuities occur with zero probability.

We next present a set of flexible results.

Theorem 22.6 The results of Theorem hold if Assumption 4 is replaced with the following. First, for with . Second, one of the following holds.

is Lipschitz-continuous.

where has finite total variation.

is a combination of functions of the form in parts 1 and 2 obtained by addition, multiplication, minimum, maximum, and composition.

is a Vapnik-Červonenkis (VC) class.

See Theorem 18.6 of Probability and Statistics for Economists or Theorems 2 and 3 of Andrews (1994).

The function in part 2 allows for discontinuous functions, including the indicator and sign functions. Part 3 shows that combinations of smooth (Lipschitz) functions and discontinuous functions satisfying the condition of part 2 are allowed. This covers many relevant applications, including quantile regression. Part 4 states a general condition, that is a VC class. As we will not be using this property in this textbook we will not discuss this further, but refer the interested reader to any textbook on empirical processes.

Theorems and provide alternative conditions on (other than Lipschitz-continuity) which can be used to establish asymptotic normality of an m-estimator.

Covariance Matrix Estimation

The standard estimator for takes the sandwich form. We estimate by

where . When is twice differentiable an estimator of is

When is not second differentiable then estimators of are constructed on a case-by-case basis.

Given and an estimator for is

It is possible to adjust by multiplying by a degree-of-freedom scaling such as where . There is no formal guidance.

For maximum likelihood estimators the standard covariance matrix estimator is . This choice is not robust to misspecification. Therefore it is recommended to use the robust version (22.5), for example by using the “, ” option in Stata. This is unfortunately not uniformly done in practice.

For clustered and time-series observations the estimator is unaltered but the estimator changes. For clustered samples it is

For time-series data the estimator is unaltered if the scores are serially uncorrelated (which occurs when a model is dynamically correctly specified). Otherwise a Newey-West covariance matrix estimator can be used and equals

Standard errors for the parameter estimates are formed by taking the square roots of the diagonal elements of .

Technical Proofs*

Proof of Theorem 22.1 The proof proceeds in two steps. First, we show that . Second we show that this implies .

Since minimizes . Hence

The second inequality uses the fact that minimizes so and replaces the other two pairwise comparisons by the supremum. The final convergence is the assumed uniform convergence in probability.

Figure 22.2: Consistency of M-Estimator

The preceeding argument is illustrated in Figure 22.2. The figure displays the expected criterion with the solid line, and the sample criterion is displayed with the dashed line. The distances between the two functions at the true value and the estimator are marked by the two dash-dotted lines. The sum of these two lengths is greater than the vertical distance between and because the latter distance equals the sum of the two dash-dotted lines plus the vertical height of the thick section of the dashed line (between and ) which is positive because . The lengths of the dotted lines converge to zero under the assumption of uniform convergence. Hence converges to . This completes the first step.

In the second step of the proof we show . Fix . The unique minimum assumption implies there is a such that implies . This means that implies . Hence

The right-hand-side converges to zero because . Thus the left-hand-side converges to zero as well. Since is arbitrary this implies that as stated.

To illustrate, again examine Figure 22.2. We see marked on the graph of . Since converges to this means that slides down the graph of towards the minimum. The only way for to not converge to would be if the function were flat at the minimum. This is excluded by the assumption of a unique minimum. Proof of Theorem 22.4 Expanding the population first-order condition around using the mean value theorem we find

where is intermediate between and . Solving, we find

The assumption that is continuously differentiable means that is continuous in . Since is intermediate between and and the latter converges in probability to , it follows that converges in probability to as well. Thus by the continuous mapping theorem .

We next examine the asymptotic distribution of . Define

An implication of the sample first-order condition is

where

Since is mean zero (see (22.3)) and has a finite covariance matrix by assumption it satisfies the multivariate central limit theorem. Thus

The final step is to show that (1). Pick any and . As shown by Theorem of Probability and Statistics for Economists, Assumption 4 implies that is asymptotically equicontinuous, which means that (see Definition in Probability and Statistics for Economists) given and there is a such that

Theorem implies that or

We calculate that

The second inequality is (22.7) and the final inequality is (22.6). Since and are arbitrary we deduce that . We conclude that

Together, we have shown that

as claimed.

Technically, since is a vector, the expansion is done separately for each element of the vector so the intermediate value varies by the rows of . This doesn’t affect the conclusion.

Exercises

Exercise 22.1 Take the model where is independent of and has known density function which is continuously differentiable.

Show that the conditional density of given is .

Find the functions and .

Calculate the asymptotic covariance matrix.

Exercise 22.2 Take the model . Consider the m-estimator of with where is a known function.

Find the functions and .

Calculate the asymptotic covariance matrix.

Exercise 22.3 For the estimator described in Exercise set .

Sketch . Is continuous? Differentiable? Second differentiable?

Find the functions and .

Calculate the asymptotic covariance matrix.

Exercise 22.4 For the estimator described in Exercise set .

Sketch . Is continuous? Differentiable? Second differentiable?

Find the functions and .

Calculate the asymptotic covariance matrix.

.jpg)